https://daviddebot.github.io/

📃 openreview.net/pdf?id=rEUbD...

📺 www.youtube.com/watch?v=sOTX...

📃 openreview.net/pdf?id=rEUbD...

📺 www.youtube.com/watch?v=sOTX...

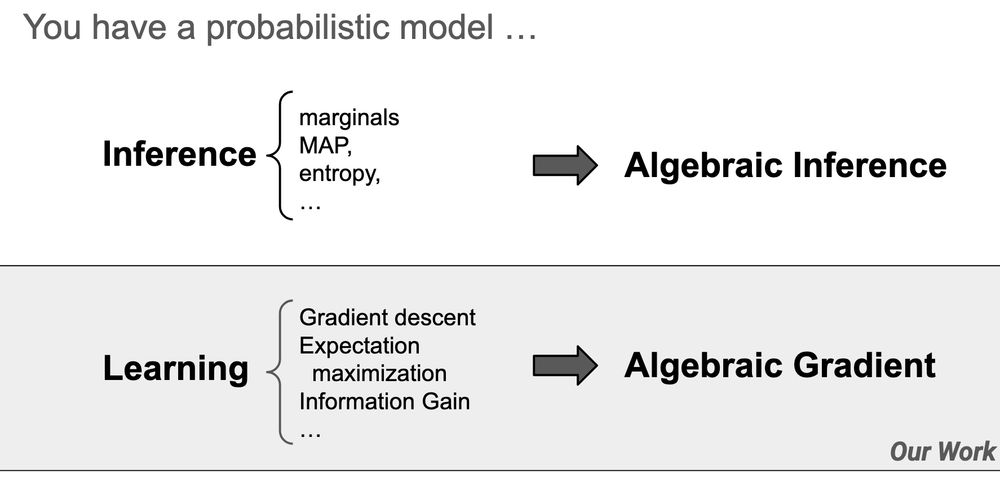

Join us at TPM @auai.org #UAI2025 and show how to build #neurosymbolic / #probabilistic AI that is both fast and trustworthy!

Join us at TPM @auai.org #UAI2025 and show how to build #neurosymbolic / #probabilistic AI that is both fast and trustworthy!

For those at ICLR 🇸🇬: you can get the juicy details tomorrow (poster #414 at 15:00). Hope to see you there!

For those at ICLR 🇸🇬: you can get the juicy details tomorrow (poster #414 at 15:00). Hope to see you there!

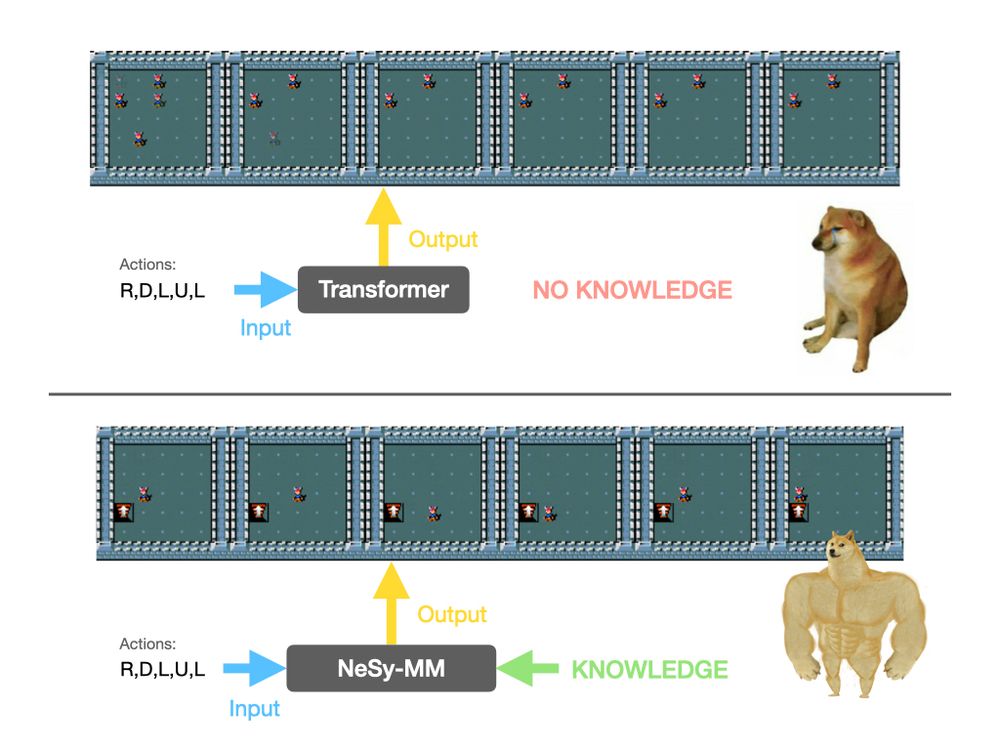

At #AAAI2025, we present our demo for neurosymbolic RL—combining deep learning with probabilistic logic shields for safer, interpretable AI in complex environments. 🏰🔥

🧵👇

(1/8)

Come to my #AAAI2025 oral tomorrow (11:45, Room 119B) to learn more.

Come to my #AAAI2025 oral tomorrow (11:45, Room 119B) to learn more.

📜 Paper: arxiv.org/pdf/2412.13023

💻 Code: github.com/ML-KULeuven/...

🧵⬇️

📜 Paper: arxiv.org/pdf/2412.13023

💻 Code: github.com/ML-KULeuven/...

🧵⬇️

At #AAAI2025, we present our demo for neurosymbolic RL—combining deep learning with probabilistic logic shields for safer, interpretable AI in complex environments. 🏰🔥

🧵👇

(1/8)

At #AAAI2025, we present our demo for neurosymbolic RL—combining deep learning with probabilistic logic shields for safer, interpretable AI in complex environments. 🏰🔥

🧵👇

(1/8)

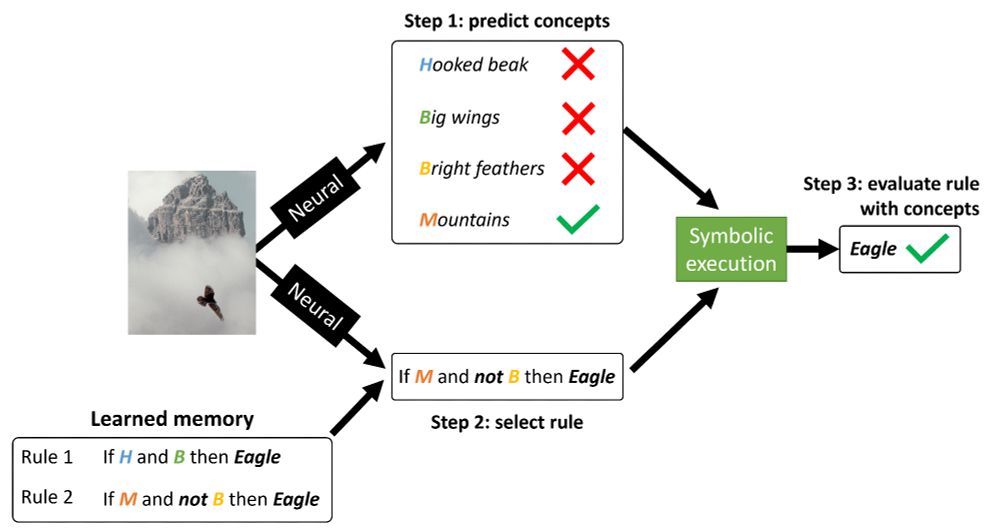

But not anymore, due to Concept-Based Memory Reasoner (CMR)! #NeurIPS2024 (1/7)

But not anymore, due to Concept-Based Memory Reasoner (CMR)! #NeurIPS2024 (1/7)