👩🏾💻 researching ar/mr/vr through hci and media psych

✨prev: research scientist intern at microsoft research and meta reality labs

🗽🇯🇲🇹🇹

cyanjd.com

✅ Completed my research scientist internship at Meta Reality Labs Research

✅ Co-presented at the #SIGGRAPH Spatial Storytelling demos

✅ Presented at the BIPOC Game Studies conference

✅ Completed my research scientist internship at Meta Reality Labs Research

✅ Co-presented at the #SIGGRAPH Spatial Storytelling demos

✅ Presented at the BIPOC Game Studies conference

www.meta.com/experiences/...

www.meta.com/experiences/...

Stanford VHIL & C.U.T.E. blend theater & research via live digital puppetry drawing on Korean folklore. Performer creates & becomes shaped by fantastical representations.

www.prnewswire.com/news-release...

Stanford VHIL & C.U.T.E. blend theater & research via live digital puppetry drawing on Korean folklore. Performer creates & becomes shaped by fantastical representations.

www.prnewswire.com/news-release...

📲 Learn more: www.olin.edu/articles/sto... #CS #AR

📲 Learn more: www.olin.edu/articles/sto... #CS #AR

I had the joy of recording an episode on Arts & Digital Embodiment with immersive tech + live performance artist and creative producer, Scarlett Kim.

It's now live! 🎙️ Link below

I had the joy of recording an episode on Arts & Digital Embodiment with immersive tech + live performance artist and creative producer, Scarlett Kim.

It's now live! 🎙️ Link below

I feel incredibly lucky and honored that @michellelipinski.bsky.social reached out after encountering my dissertation—we immediately

I feel incredibly lucky and honored that @michellelipinski.bsky.social reached out after encountering my dissertation—we immediately

www.nature.com/articles/s41...

www.nature.com/articles/s41...

@nathumbehav.nature.com

vhil.stanford.edu/publications...

@nathumbehav.nature.com

vhil.stanford.edu/publications...

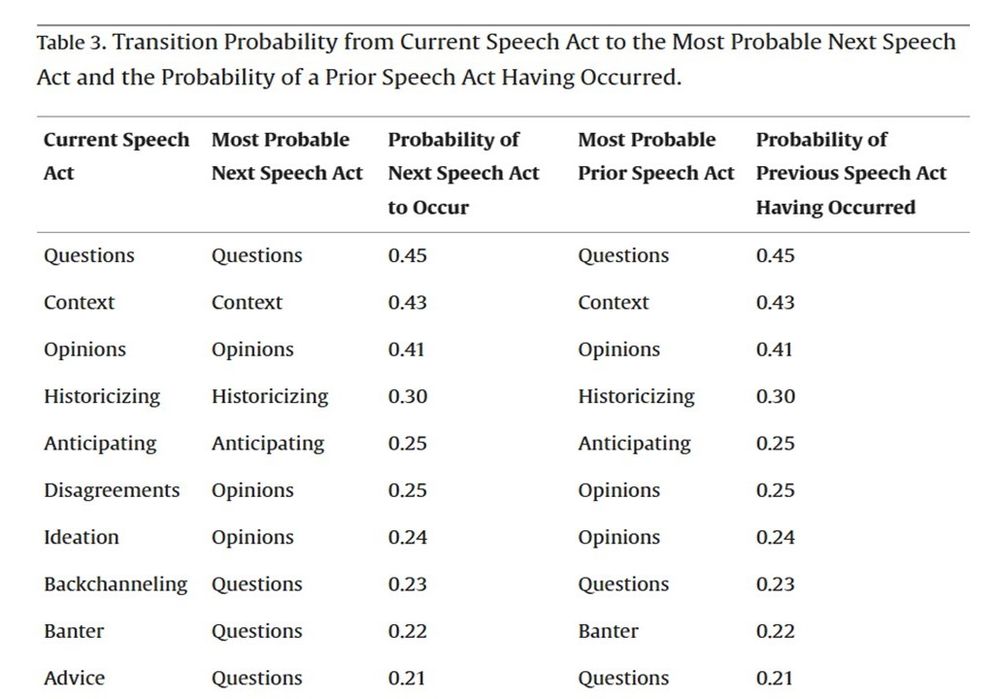

Monique Santoso coded 9,000 speech acts to develop Virtual Reality Interaction Dynamics Scheme, 10 speech acts (e.g., disagreements, context-dependent commentary). Prior speech acts and current nonverbal behavior predict group action.

vhil.stanford.edu/publications...

Monique Santoso coded 9,000 speech acts to develop Virtual Reality Interaction Dynamics Scheme, 10 speech acts (e.g., disagreements, context-dependent commentary). Prior speech acts and current nonverbal behavior predict group action.

vhil.stanford.edu/publications...

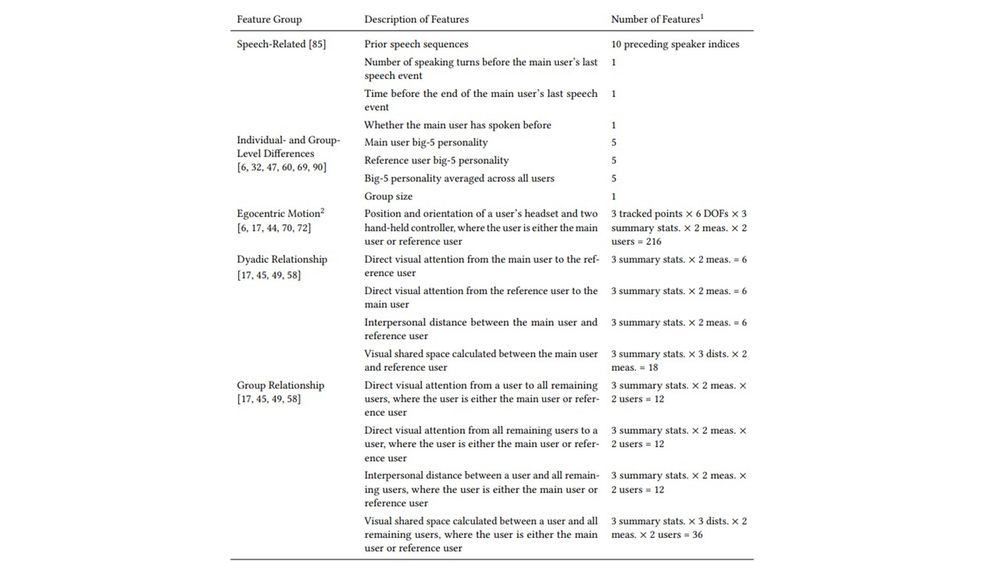

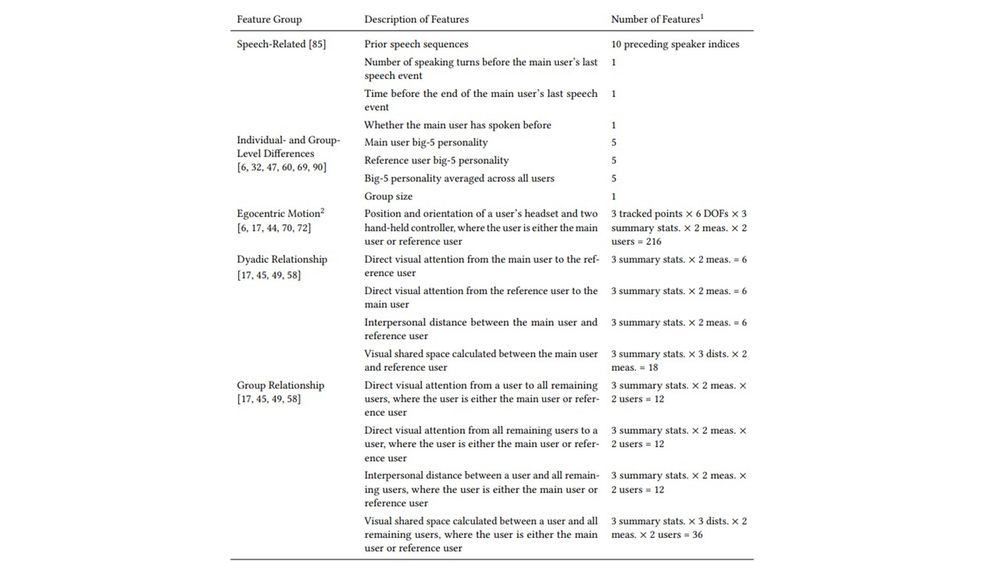

@PortiaWang.bsky.social analyzed a VR dataset of 77 sessions, 1660 minutes of group meetings over 4 weeks. Verbal & nonverbal history captured at millisecond level predicted turn-taking at nearly 30% over chance. To appear @acm-cscw.bsky.social.

vhil.stanford.edu/publications...

@PortiaWang.bsky.social analyzed a VR dataset of 77 sessions, 1660 minutes of group meetings over 4 weeks. Verbal & nonverbal history captured at millisecond level predicted turn-taking at nearly 30% over chance. To appear @acm-cscw.bsky.social.

vhil.stanford.edu/publications...

@PortiaWang.bsky.social analyzed a VR dataset of 77 sessions, 1660 minutes of group meetings over 4 weeks. Verbal & nonverbal history captured at millisecond level predicted turn-taking at nearly 30% over chance. To appear @acm-cscw.bsky.social.

vhil.stanford.edu/publications...