Prev research intern @ EPFL w/ wendlerc.bsky.social and Robert West

MATS Winter 7.0 Scholar w/ neelnanda.bsky.social

https://butanium.github.io

What do chat LLMs learn in finetuning?

Anthropic introduced a tool for this: crosscoders, an SAE variant. We find key limitations of crosscoders & fix them with BatchTopK crosscoders

This finds interpretable and causal chat-only features!🧵

New pre-print where we investigate the internal mechanisms of LLMs when filtering on a list of options.

Spoiler: turns out LLMs use strategies surprisingly similar to functional programming (think "filter" from python)! 🧵

Tldr: we show that your Narrow Finetuning is showing and might not be a realistic setup to study!

Tldr: we show that your Narrow Finetuning is showing and might not be a realistic setup to study!

Check our blogpost out! 🧵

Check our blogpost out! 🧵

goodfire.ai/ for sponsoring! nemiconf.github.io/summer25/

If you can't make it in person, the livestream will be here:

www.youtube.com/live/4BJBis...

goodfire.ai/ for sponsoring! nemiconf.github.io/summer25/

If you can't make it in person, the livestream will be here:

www.youtube.com/live/4BJBis...

🔎 Demo on Colab: colab.research.google.com/github/ndif-...

📖 Read the full manuscript: arxiv.org/abs/2505.13898

🔎 Demo on Colab: colab.research.google.com/github/ndif-...

📖 Read the full manuscript: arxiv.org/abs/2505.13898

Rather than trying to reverse-engineer the full fine-tuned model, model diffing focuses on understanding what makes it different from its base model internally.

Rather than trying to reverse-engineer the full fine-tuned model, model diffing focuses on understanding what makes it different from its base model internally.

In this updated version, we extended our results to several models and showed they can actually generate good definitions of mean concept representations across languages.🧵

In this updated version, we extended our results to several models and showed they can actually generate good definitions of mean concept representations across languages.🧵

What do chat LLMs learn in finetuning?

Anthropic introduced a tool for this: crosscoders, an SAE variant. We find key limitations of crosscoders & fix them with BatchTopK crosscoders

This finds interpretable and causal chat-only features!🧵

With @jkminder.bsky.social, we made a demo that supports both loading our max activating examples AND running the crosscoder with your own prompt to collect the activations of specific latents!

Send us the cool latents you find! dub.sh/ccdm

With @jkminder.bsky.social, we made a demo that supports both loading our max activating examples AND running the crosscoder with your own prompt to collect the activations of specific latents!

Send us the cool latents you find! dub.sh/ccdm

What do chat LLMs learn in finetuning?

Anthropic introduced a tool for this: crosscoders, an SAE variant. We find key limitations of crosscoders & fix them with BatchTopK crosscoders

This finds interpretable and causal chat-only features!🧵

What do chat LLMs learn in finetuning?

Anthropic introduced a tool for this: crosscoders, an SAE variant. We find key limitations of crosscoders & fix them with BatchTopK crosscoders

This finds interpretable and causal chat-only features!🧵

I love that our activation-based analysis of multilingual representation has now additional insight from weight space analysis: bsky.app/profile/sfeu...

I love that our activation-based analysis of multilingual representation has now additional insight from weight space analysis: bsky.app/profile/sfeu...

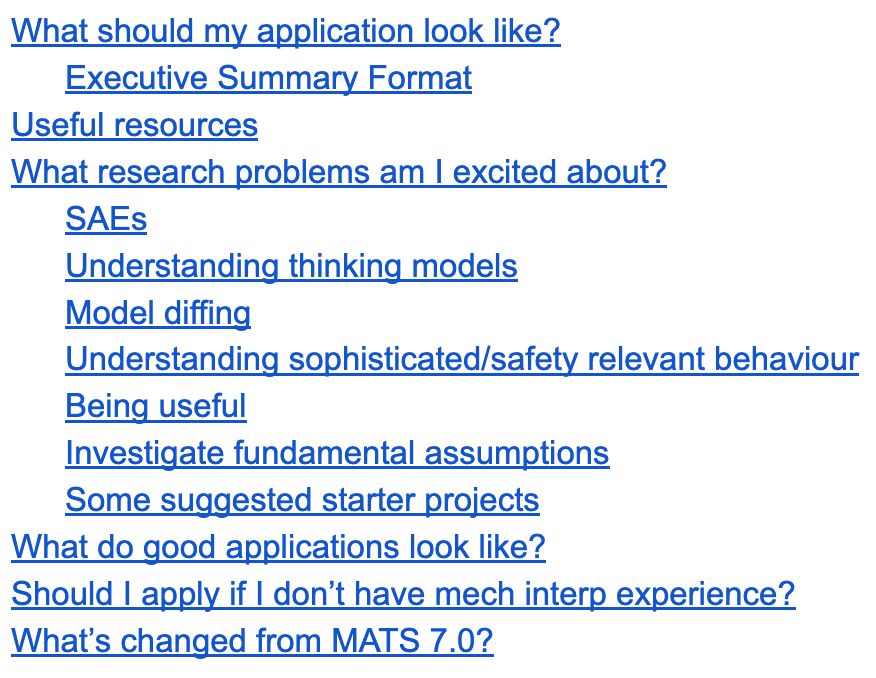

Apply for mentorship this summer at forms.matsprogram.org/turner-app-8

Apply for mentorship this summer at forms.matsprogram.org/turner-app-8

Join ARBOR: Analysis of Reasoning Behaviors thru *Open Research* - a radically open collaboration to reverse-engineer reasoning models!

Learn more: arborproject.github.io

1/N

Join ARBOR: Analysis of Reasoning Behaviors thru *Open Research* - a radically open collaboration to reverse-engineer reasoning models!

Learn more: arborproject.github.io

1/N

x.com/NeelNanda5/...

x.com/NeelNanda5/...

This great GDM safety paper shows that myopically optimising for plans that an overseer approves of, rather than outcomes, reduces these issues while performing well!

x.com/davlindner/...

This great GDM safety paper shows that myopically optimising for plans that an overseer approves of, rather than outcomes, reduces these issues while performing well!

x.com/davlindner/...

🚨 #NDIF is opening up more spots in our 405b pilot program! Apply now for a chance to conduct your own groundbreaking experiments on the 405b model. Details: 🧵⬇️

🚨 #NDIF is opening up more spots in our 405b pilot program! Apply now for a chance to conduct your own groundbreaking experiments on the 405b model. Details: 🧵⬇️

arxiv.org/abs/2411.07404

Co-led with

@kevdududu.bsky.social - @niklasstoehr.bsky.social , Giovanni Monea, @wendlerc.bsky.social, Robert West & Ryan Cotterell.

arxiv.org/abs/2411.07404

Co-led with

@kevdududu.bsky.social - @niklasstoehr.bsky.social , Giovanni Monea, @wendlerc.bsky.social, Robert West & Ryan Cotterell.