Dozens of you have shared this lovely video with me over the past couple days. And I just love that it illustrates two baby lessons in one. 🧵

Dozens of you have shared this lovely video with me over the past couple days. And I just love that it illustrates two baby lessons in one. 🧵

StarVector is a foundation model for generating Scalable Vector Graphics (SVG) code from images and text. It utilizes a Vision-Language Modeling architecture to understand both visual and textual inputs, enabling high-quality vectorization and text-guided SVG creation.

StarVector is a foundation model for generating Scalable Vector Graphics (SVG) code from images and text. It utilizes a Vision-Language Modeling architecture to understand both visual and textual inputs, enabling high-quality vectorization and text-guided SVG creation.

unlike "extended thinking", the think tool is meant to incorporate new information, e.g. from other tools

most notable: their use of pass^k (all of k) instead of pass@k (one of k)

www.anthropic.com/engineering/...

We want models that match our values...but could this hurt their diversity of thought?

Preprint: arxiv.org/abs/2411.04427

Turns out: yes!

Thrilled to share our latest preprint where we used FunSearch to automatically discover symbolic cognitive models of behavior.

1/12

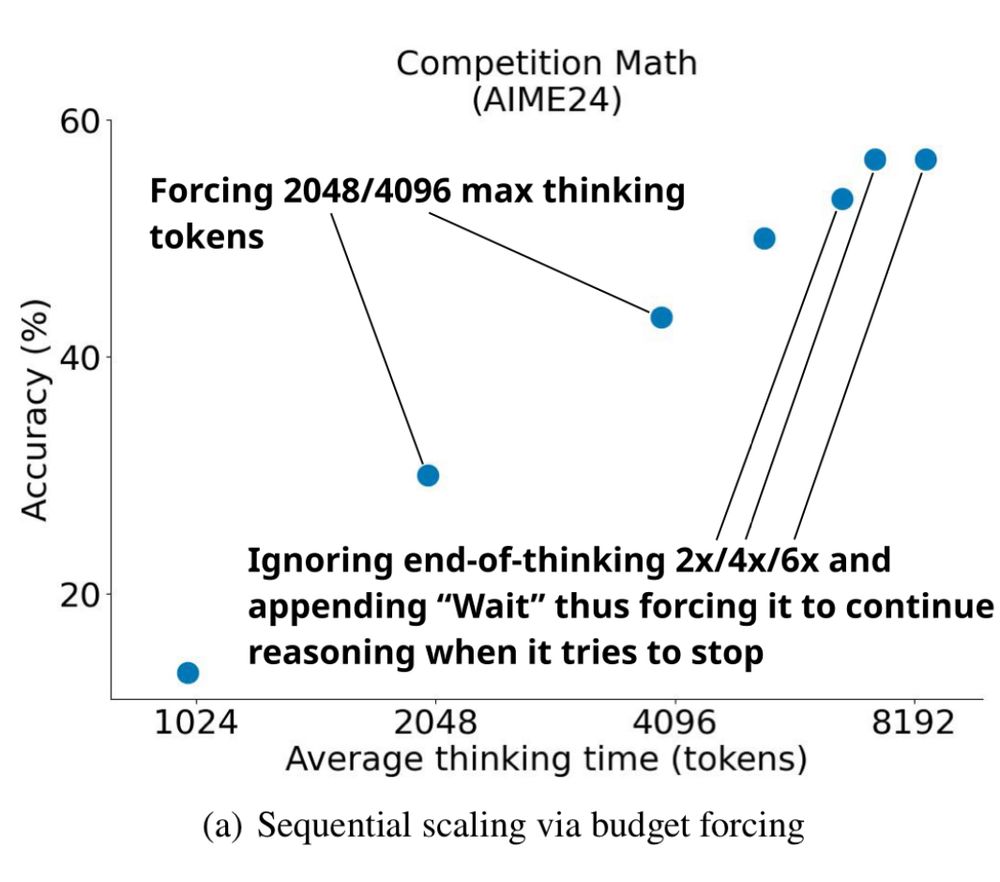

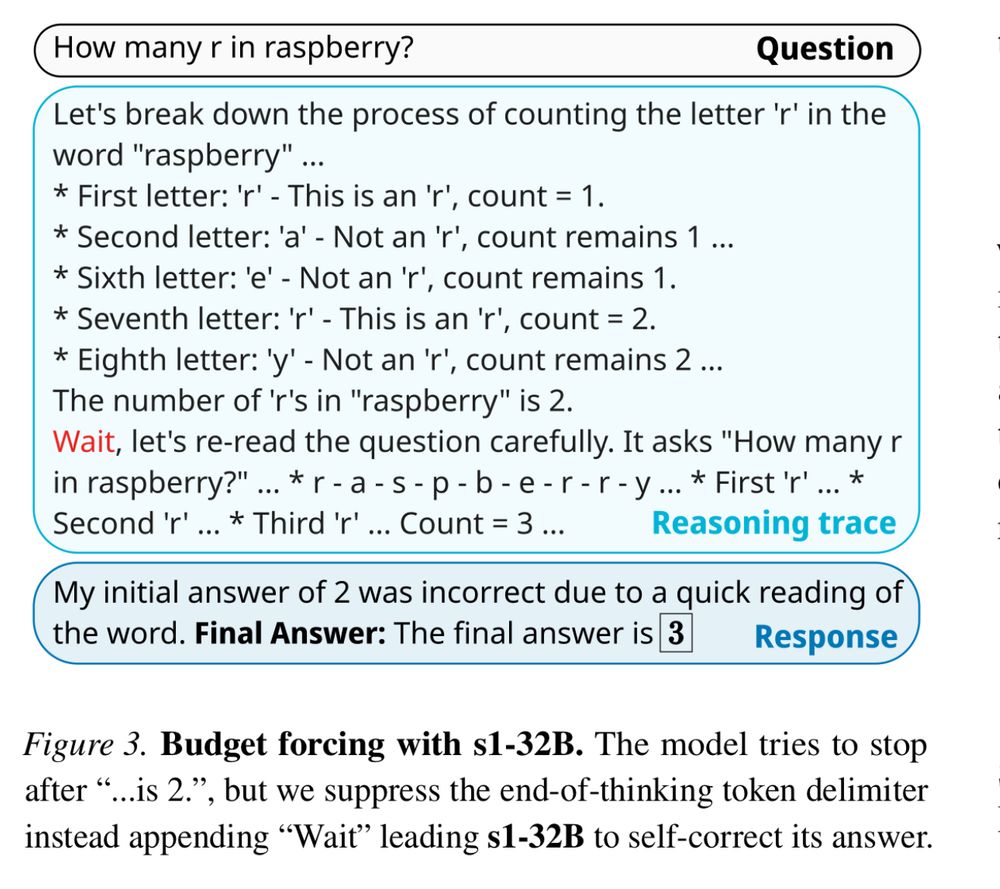

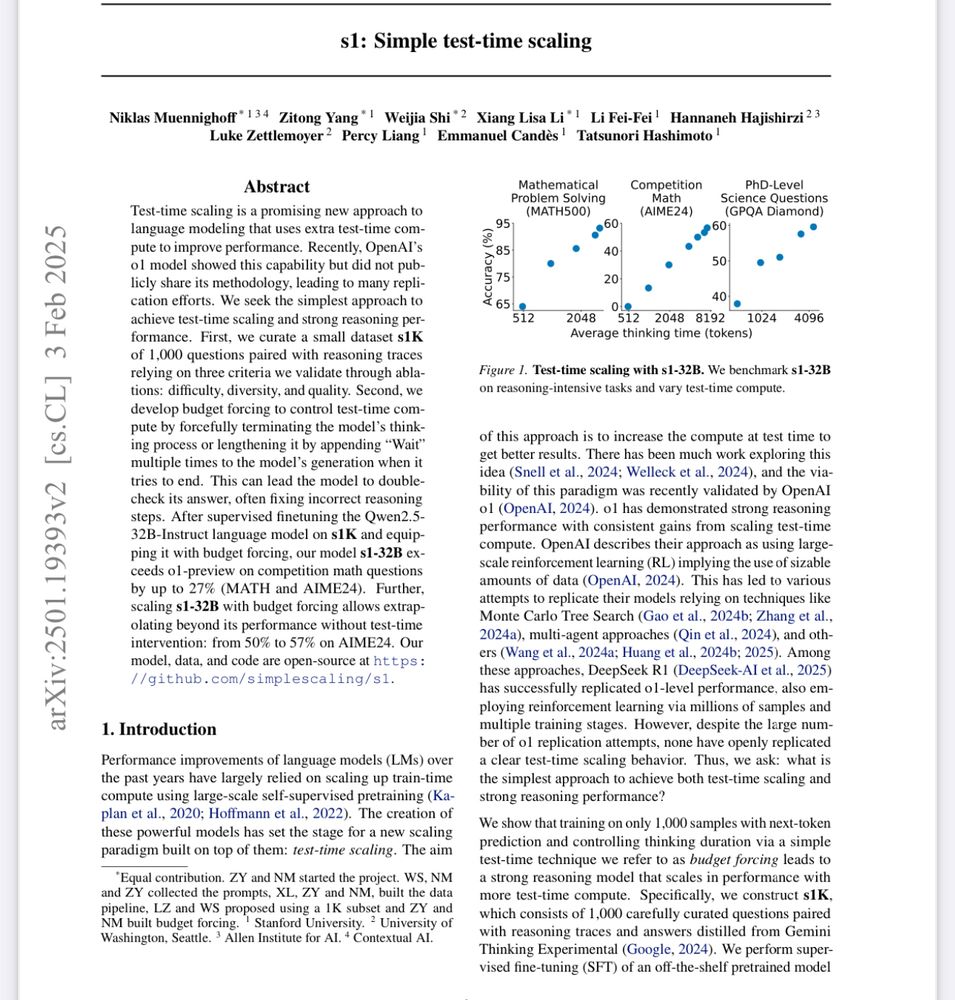

1) We are still VERY early in the development of Reasoners

2) There is high value in understanding how humans solve problems & applying that to AI

3) Higher possibility of further exponential growth in AI capabilities as techniques for thinking traces compound

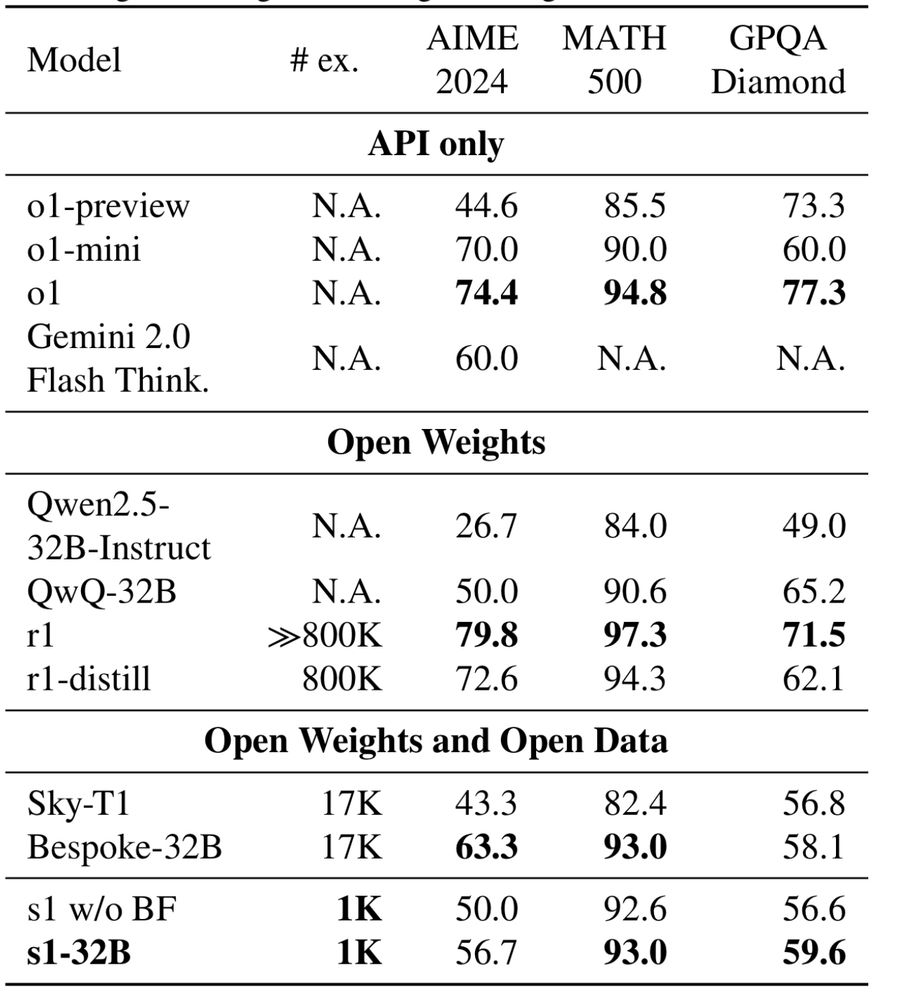

They used just 1,000 carefully curated reasoning examples & a trick where if the model tries to stop thinking, they append "Wait" to force it to continue. Near o1 at math. arxiv.org/pdf/2501.19393

1) We are still VERY early in the development of Reasoners

2) There is high value in understanding how humans solve problems & applying that to AI

3) Higher possibility of further exponential growth in AI capabilities as techniques for thinking traces compound

Check out this Comprehensive Python Cheatsheet by Jure Šorn,

This is a deep dive into Proximal Policy Optimization (PPO), which is one of the most popular algorithm used in RLHF for LLMs, as well as Group Relative Policy Optimization (GRPO) proposed by the DeepSeek folks.

This is a deep dive into Proximal Policy Optimization (PPO), which is one of the most popular algorithm used in RLHF for LLMs, as well as Group Relative Policy Optimization (GRPO) proposed by the DeepSeek folks.

https://github.com/YigitDemirag/forward-gradients

www.nature.com/articles/s41...