A small thread 🧵👇

A small thread 🧵👇

huggingface.co/collections/...

HF model collection for OpenCLIP and timm:

huggingface.co/collections/...

And of course big_vision checkpoints:

github.com/google-resea...

huggingface.co/collections/...

HF model collection for OpenCLIP and timm:

huggingface.co/collections/...

And of course big_vision checkpoints:

github.com/google-resea...

arxiv.org/abs/2502.14786

HF blog post from @arig23498.bsky.social et al. with a gentle intro to the training recipe and a demo:

huggingface.co/blog/siglip2

Thread with results overview from Xiaohua (only on X, sorry - these are all in the paper):

x.com/XiaohuaZhai/...

arxiv.org/abs/2502.14786

HF blog post from @arig23498.bsky.social et al. with a gentle intro to the training recipe and a demo:

huggingface.co/blog/siglip2

Thread with results overview from Xiaohua (only on X, sorry - these are all in the paper):

x.com/XiaohuaZhai/...

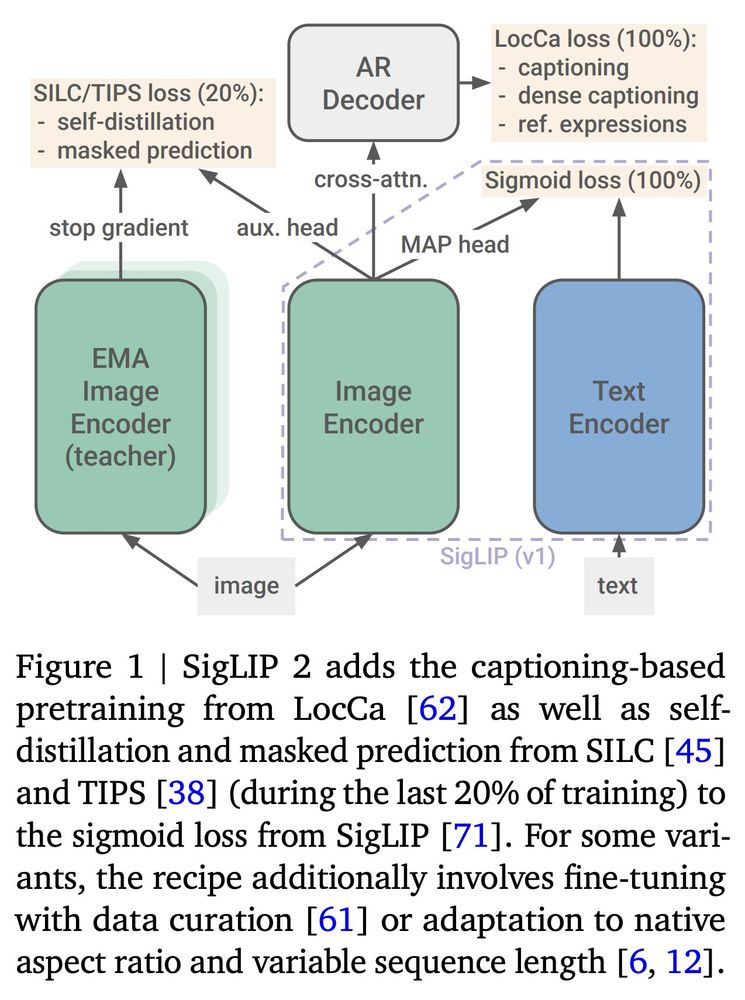

TL;DR: Improved high-level semantics, localization, dense features, and multilingual capabilities via drop-in replacement for v1.

Bonus: Variants supporting native aspect and variable sequence length.

A thread with interesting resources👇

TL;DR: Improved high-level semantics, localization, dense features, and multilingual capabilities via drop-in replacement for v1.

Bonus: Variants supporting native aspect and variable sequence length.

A thread with interesting resources👇

Build a proof-of-concept API, hosting Qwen2.5-VL-7B-Instruct on Hugging Face Spaces using Docker.

huggingface.co/blog/ariG234...

Build a proof-of-concept API, hosting Qwen2.5-VL-7B-Instruct on Hugging Face Spaces using Docker.

huggingface.co/blog/ariG234...

They listen to the community and put efforts in building competitive models.

I was intrigued by their latest `Qwen/QwQ-32B-Preview` model and wanted to play with it.

[1/N]

They listen to the community and put efforts in building competitive models.

I was intrigued by their latest `Qwen/QwQ-32B-Preview` model and wanted to play with it.

[1/N]

1️⃣ Understanding tool calling with Llama 3.2 🔧

2️⃣ Using Text Generation Inference (TGI) with Llama models 🦙

(links in the next post)

1️⃣ Understanding tool calling with Llama 3.2 🔧

2️⃣ Using Text Generation Inference (TGI) with Llama models 🦙

(links in the next post)

I will go first, the data pipeline.

I will go first, the data pipeline.

Note: MB should be GB in the diagram.

Note: MB should be GB in the diagram.

Bonus: Claim a 3 month PyCharm subscription using PyCharm4HF

Blog Post: huggingface.co/blog/pycharm...

Bonus: Claim a 3 month PyCharm subscription using PyCharm4HF

Blog Post: huggingface.co/blog/pycharm...

Try out the FLUX model in JAX. It also works on TPUs if that is your thing.

For people who want to work on it, there are open issues as well. Happy coding!

Try out the FLUX model in JAX. It also works on TPUs if that is your thing.

For people who want to work on it, there are open issues as well. Happy coding!