We are excited to launch the #EpiTrainingKit #Africa: Introduction to Infectious Disease Modelling for Public Health.

🎯 Tailored for the African context

🌍 With a gender perspective

🆓 Open-access and online

#EpiTKit #Epiverse #PublicHealth

We are excited to launch the #EpiTrainingKit #Africa: Introduction to Infectious Disease Modelling for Public Health.

🎯 Tailored for the African context

🌍 With a gender perspective

🆓 Open-access and online

#EpiTKit #Epiverse #PublicHealth

We’re expanding to be more inclusive & diverse, reaching a wider audience in public health & data science.

Want to know more about what we do?⁉️🤔

🧵a thread!

We’re expanding to be more inclusive & diverse, reaching a wider audience in public health & data science.

Want to know more about what we do?⁉️🤔

🧵a thread!

~3 pages is the longest form we’ve tried.

~3 pages is the longest form we’ve tried.

They've got the context length for it. And new models seem capable of the multi-hop reasoning required for plot. So why hasn't anyone demoed a model that can write long interesting stories?

I do have a theory ... +

They've got the context length for it. And new models seem capable of the multi-hop reasoning required for plot. So why hasn't anyone demoed a model that can write long interesting stories?

I do have a theory ... +

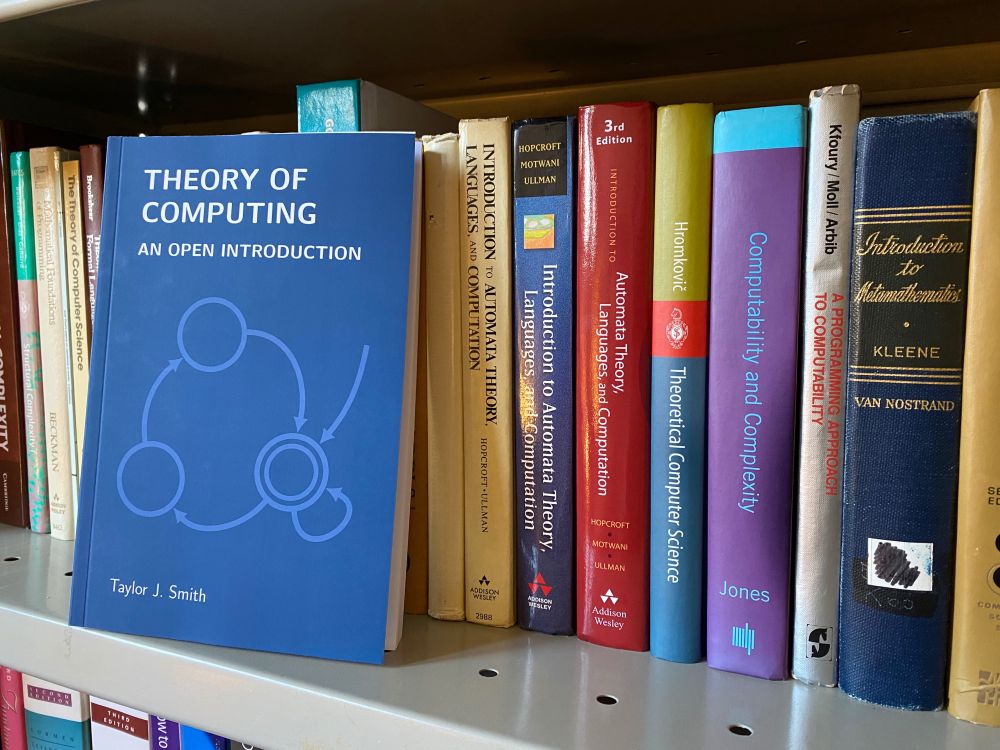

This term was the first time I used it in class, and students loved it. Big plans for future editions, so stay tuned!

taylorjsmith.xyz/tocopen/

This term was the first time I used it in class, and students loved it. Big plans for future editions, so stay tuned!

taylorjsmith.xyz/tocopen/

docs.google.com/presentation...

Since the title is misleading, let me also say: US academics do not need $100k for this. They used 2,000 GPU hours in this paper; NSF will give you that. #MLSky

Since the title is misleading, let me also say: US academics do not need $100k for this. They used 2,000 GPU hours in this paper; NSF will give you that. #MLSky