Hierarchical structure understanding in complex tables with VLLMs: a benchmark and experiments

https://arxiv.org/abs/2511.08298

Hierarchical structure understanding in complex tables with VLLMs: a benchmark and experiments

https://arxiv.org/abs/2511.08298

(1) p2-TQA: A Process-based Preference Learning Framework for Self-Improving Table Question Answering Models

(2) Hierarchical structure understanding in complex tables with VLLMs: a benchmark and experiments

🔍 More at researchtrend.ai/communities/LMTD

There is a lot happening in the space of computational analysis of visual content as we acknowledge more and more the visual nature of contemporary communication.

There is a lot happening in the space of computational analysis of visual content as we acknowledge more and more the visual nature of contemporary communication.

This is a much-needed service for all developers and users of VLLMs and Agents.

It also indicates that GitHub is on a clear path to being absorbed by Microsoft, as I have previously noted.

This is a much-needed service for all developers and users of VLLMs and Agents.

It also indicates that GitHub is on a clear path to being absorbed by Microsoft, as I have previously noted.

Waste-Bench: A Comprehensive Benchmark for Evaluating VLLMs in Cluttered Environments

https://arxiv.org/abs/2509.00176

Waste-Bench: A Comprehensive Benchmark for Evaluating VLLMs in Cluttered Environments

https://arxiv.org/abs/2509.00176

#AWS #Deep #Learning #AMIs #Best #Practices #Expert #(400) […]

[Original post on aws.amazon.com]

#AWS #Deep #Learning #AMIs #Best #Practices #Expert #(400) […]

[Original post on aws.amazon.com]

#KDD2025 #WearableAI #VLLMs admscentre.org/4mu7lFx

#KDD2025 #WearableAI #VLLMs admscentre.org/4mu7lFx

No idea what is best in class at the moment.

I want to distill unstructured key info out of videos up to 5 minutes long.

OSS-wise Qwen2.5-VL seems neat.

Their GH looks very unmaintained :)

Sonnet? Gemini? GPT???

Any advice?

Plz

No idea what is best in class at the moment.

I want to distill unstructured key info out of videos up to 5 minutes long.

OSS-wise Qwen2.5-VL seems neat.

Their GH looks very unmaintained :)

Sonnet? Gemini? GPT???

Any advice?

Plz

Mpeg7 fingerprinting, Deep belief, tensorflow, PyTorch, CoreML, vLLMs.

First POC:

vimeo.com/148001471

Mpeg7 fingerprinting, Deep belief, tensorflow, PyTorch, CoreML, vLLMs.

First POC:

vimeo.com/148001471

Luigi Arminio, Matteo Magnani, Matias Piqueras, Luca Rossi, Alexandra Segerberg

Session: Posters II (Atrium)

23 Jul 2025, 13:30 – 14:30

Luigi Arminio, Matteo Magnani, Matias Piqueras, Luca Rossi, Alexandra Segerberg

Session: Posters II (Atrium)

23 Jul 2025, 13:30 – 14:30

Write instructions like:

– "Summarize briefly using 2+ sources"

– "If question ends in 'detailed', use this format..."

Most VLLMs support this. It’s like giving your assistant a style guide and simple answers to possible dilemmas

Write instructions like:

– "Summarize briefly using 2+ sources"

– "If question ends in 'detailed', use this format..."

Most VLLMs support this. It’s like giving your assistant a style guide and simple answers to possible dilemmas

Nothing to do but chat with VLLMs like you

Nothing to do but chat with VLLMs like you

From Pixels and Words to Waves: A Unified Framework for Spectral Dictionary vLLMs

https://arxiv.org/abs/2506.18943

From Pixels and Words to Waves: A Unified Framework for Spectral Dictionary vLLMs

https://arxiv.org/abs/2506.18943

arxiv.org/html/2506.04...

arxiv.org/html/2506.04...

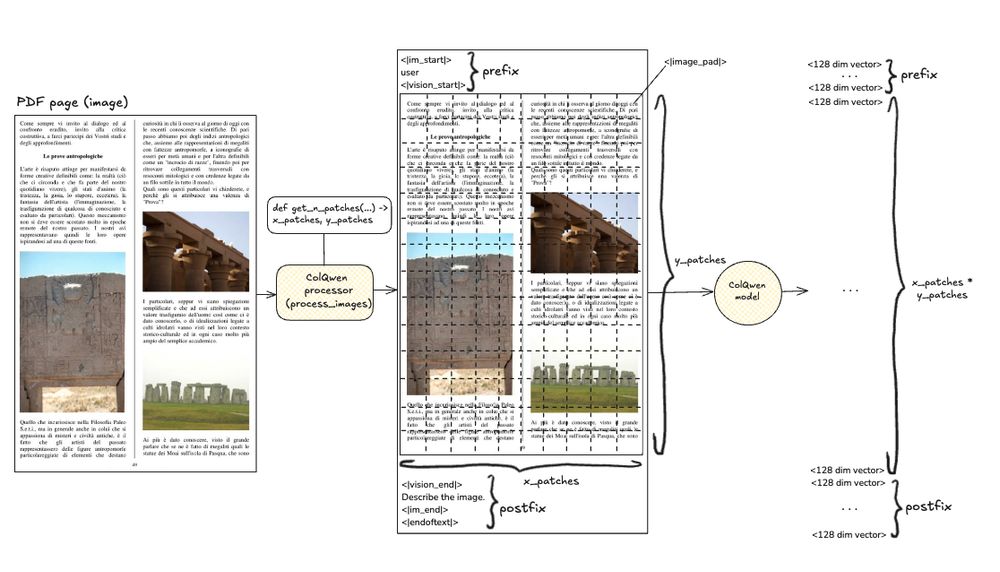

But scaling it to 20,000+ PDF pages? Brutal.

VLLMs like ColPali and ColQwen create thousands of vectors per page.

@qdrant_engine solves this, but only if you optimize smart.

Here’s how to scale visual PDF retrieval without melting your GPU:

But scaling it to 20,000+ PDF pages? Brutal.

VLLMs like ColPali and ColQwen create thousands of vectors per page.

@qdrant_engine solves this, but only if you optimize smart.

Here’s how to scale visual PDF retrieval without melting your GPU: