Research Fellow @ RBC Borealis

Model analysis, interpretability, reasoning and hallucination

Studying model behaviours to make them better :))

Looking for Fall '26 PhD

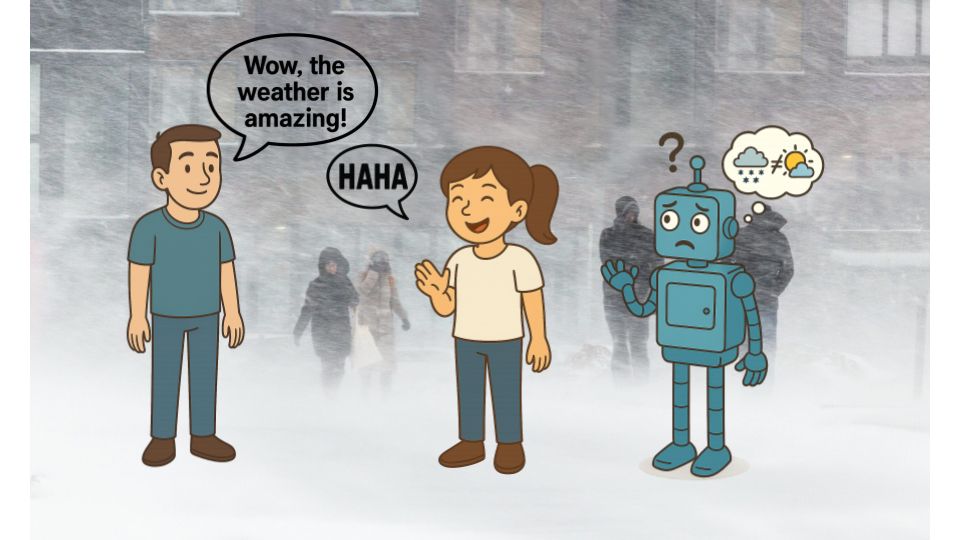

Our #ACL2025 (Main) paper shows that hallucinations under irrelevant contexts follow a systematic failure mode — revealing how LLMs generalize using abstract classes + context cues, albeit unreliably.

📎 Paper: arxiv.org/abs/2505.22630 1/n

Come to our poster at #ACL2025 on July 29th at 4 PM in Level 0, Halls X4/X5. Would love to chat about interpretability, hallucinations, and reasoning :)

@mcgill-nlp.bsky.social @mila-quebec.bsky.social

Come to our poster at #ACL2025 on July 29th at 4 PM in Level 0, Halls X4/X5. Would love to chat about interpretability, hallucinations, and reasoning :)

@mcgill-nlp.bsky.social @mila-quebec.bsky.social

Paper: arxiv.org/abs/2506.09301 to appear @ #ACL2025 (Main)

Paper: arxiv.org/abs/2506.09301 to appear @ #ACL2025 (Main)

Our #ACL2025 (Main) paper shows that hallucinations under irrelevant contexts follow a systematic failure mode — revealing how LLMs generalize using abstract classes + context cues, albeit unreliably.

📎 Paper: arxiv.org/abs/2505.22630 1/n

Our #ACL2025 (Main) paper shows that hallucinations under irrelevant contexts follow a systematic failure mode — revealing how LLMs generalize using abstract classes + context cues, albeit unreliably.

📎 Paper: arxiv.org/abs/2505.22630 1/n