Member of @belongielab.org, ELLIS @ellis.eu, and Pioneer Centre for AI🤖

Computer Vision | Multimodality

MSc CS at ETH Zurich

🔗: zhaochongan.github.io/

Curious how MULTIMODALITY can enhance FEW-SHOT 3D SEGMENTATION WITHOUT any additional cost? Come chat with us at the poster session — always happy to connect!🤝

🗓️ Fri 25 Apr, 3 - 5:30 pm

📍 Hall 3 + Hall 2B #504

More follow

Curious how MULTIMODALITY can enhance FEW-SHOT 3D SEGMENTATION WITHOUT any additional cost? Come chat with us at the poster session — always happy to connect!🤝

🗓️ Fri 25 Apr, 3 - 5:30 pm

📍 Hall 3 + Hall 2B #504

More follow

💠 2D images (leveraged implicitly during pretraining)

💠 Text (using class names)

—all at no extra cost beyond the 3D-only setup. ✨

💠 2D images (leveraged implicitly during pretraining)

💠 Text (using class names)

—all at no extra cost beyond the 3D-only setup. ✨

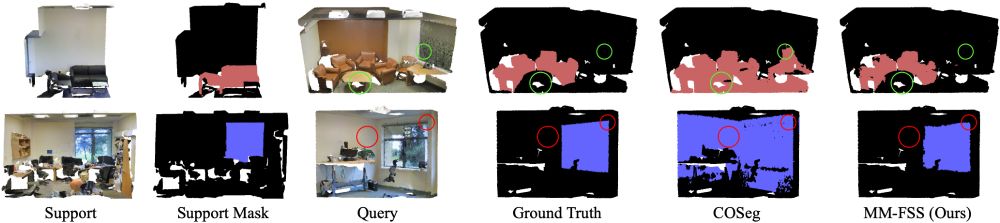

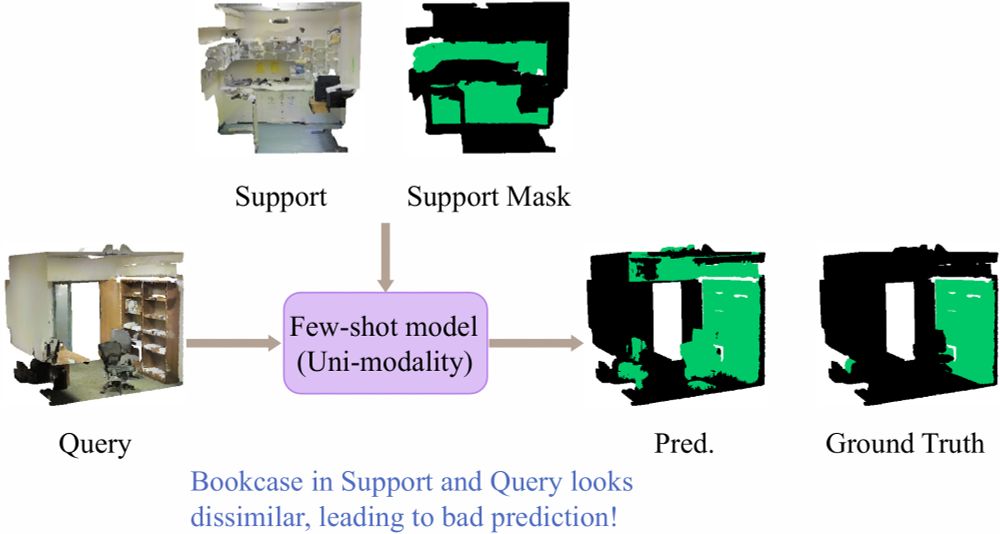

However, when support and query objects look very different, performance can suffer, limiting effective few-shot adaptation. 🙁

However, when support and query objects look very different, performance can suffer, limiting effective few-shot adaptation. 🙁

With MM-FSS, we take it even further!

Ref: COSeg arxiv.org/pdf/2410.22489

With MM-FSS, we take it even further!

Ref: COSeg arxiv.org/pdf/2410.22489

Our model MM-FSS leverages 3D, 2D, & text modalities for robust few-shot 3D segmentation—all without extra labeling cost. 🤩

arxiv.org/pdf/2410.22489

More details👇

Our model MM-FSS leverages 3D, 2D, & text modalities for robust few-shot 3D segmentation—all without extra labeling cost. 🤩

arxiv.org/pdf/2410.22489

More details👇