They were thought to rely only on a positional one—but when many entities appear, that system breaks down.

Our new paper shows what these pointers are and how they interact 👇

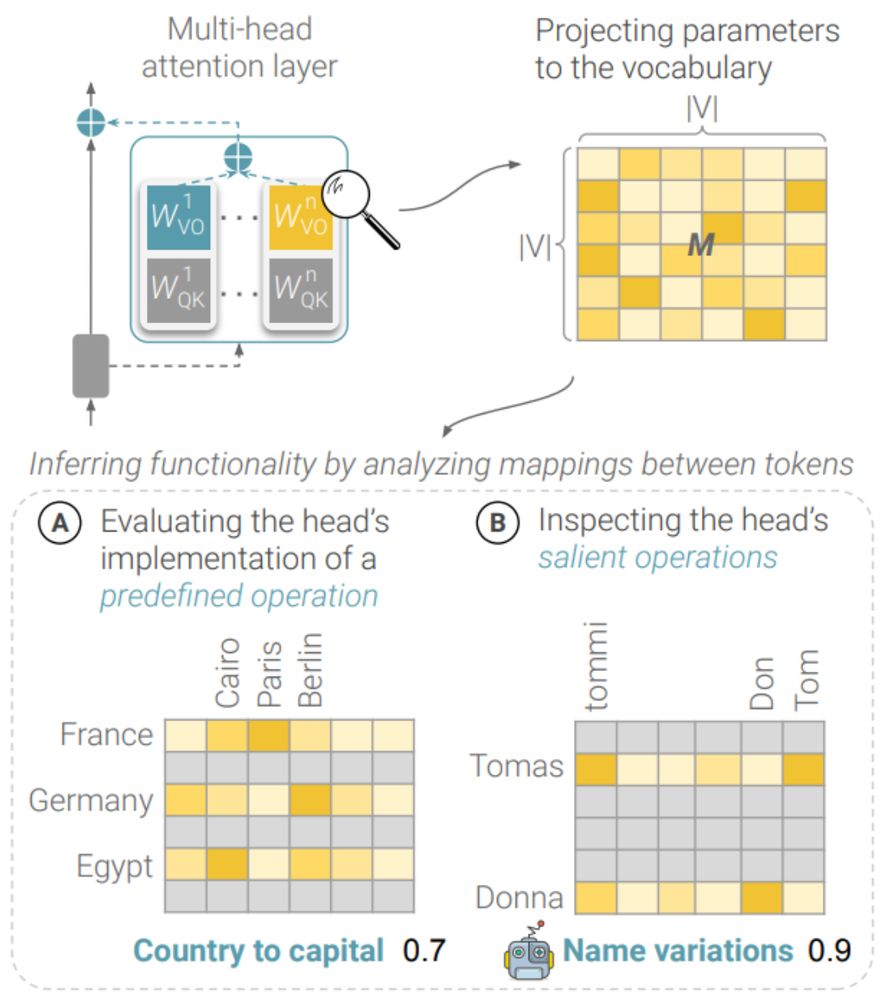

Interpreted by projecting the vector to vocabulary space, yielding a list of tokens associated with it

Interpreted by projecting the vector to vocabulary space, yielding a list of tokens associated with it

We were curious how these mechanisms develop throughout training, so we evaluated their existence across OLMo checkpoints 👇

We were curious how these mechanisms develop throughout training, so we evaluated their existence across OLMo checkpoints 👇

They were thought to rely only on a positional one—but when many entities appear, that system breaks down.

Our new paper shows what these pointers are and how they interact 👇

They were thought to rely only on a positional one—but when many entities appear, that system breaks down.

Our new paper shows what these pointers are and how they interact 👇

Most methods are shallow, coarse, or overreach, adversely affecting related or general knowledge.

We introduce🪝𝐏𝐈𝐒𝐂𝐄𝐒 — a general framework for Precise In-parameter Concept EraSure. 🧵 1/

Most methods are shallow, coarse, or overreach, adversely affecting related or general knowledge.

We introduce🪝𝐏𝐈𝐒𝐂𝐄𝐒 — a general framework for Precise In-parameter Concept EraSure. 🧵 1/

Current pipelines use activating inputs, which is costly and ignores how features causally affect model outputs!

We propose efficient output-centric methods that better predict the steering effect of a feature.

New preprint led by @yoav.ml 🧵1/

Current pipelines use activating inputs, which is costly and ignores how features causally affect model outputs!

We propose efficient output-centric methods that better predict the steering effect of a feature.

New preprint led by @yoav.ml 🧵1/

We present an efficient framework – MAPS – for inferring the functionality of attention heads in LLMs ✨directly from their parameters✨

A new preprint with Amit Elhelo 🧵 (1/10)

We present an efficient framework – MAPS – for inferring the functionality of attention heads in LLMs ✨directly from their parameters✨

A new preprint with Amit Elhelo 🧵 (1/10)