Is publishing rules enough if users can't understand them?

Our team, led by @friederikeq.bsky.social, answers this question with two innovative experimental studies on user comprehension.

More on that soon !!

Is publishing rules enough if users can't understand them?

Our team, led by @friederikeq.bsky.social, answers this question with two innovative experimental studies on user comprehension.

More on that soon !!

-- The largest platforms are most likely to publish guidelines

-- But those guidelines tend to be longer and more complex

-- Guidelines have grown more complex under new regulation (like the DSA)

-- The largest platforms are most likely to publish guidelines

-- But those guidelines tend to be longer and more complex

-- Guidelines have grown more complex under new regulation (like the DSA)

We analyzed them for:

-- Length

-- Readability

-- Semantic complexity

We analyzed them for:

-- Length

-- Readability

-- Semantic complexity

And more importantly — can regular users understand those rules?

We built a new dataset (COMPARE) to find out. Access it on Github: github.com/transparency...

And more importantly — can regular users understand those rules?

We built a new dataset (COMPARE) to find out. Access it on Github: github.com/transparency...

Download and read the full report: osf.io/s3kcw

Download and read the full report: osf.io/s3kcw

🎓 @spyroskosmidis.bsky.social

🎓 @janzilinsky.bsky.social

🎓 @friederikeq.bsky.social

🎓 @franziskapradel.bsky.social

Led by the Chair of Digital Governance @tum.de + the Content Moderation Lab (a TUM & @ox.ac.uk collaboration)

🎓 @spyroskosmidis.bsky.social

🎓 @janzilinsky.bsky.social

🎓 @friederikeq.bsky.social

🎓 @franziskapradel.bsky.social

Led by the Chair of Digital Governance @tum.de + the Content Moderation Lab (a TUM & @ox.ac.uk collaboration)

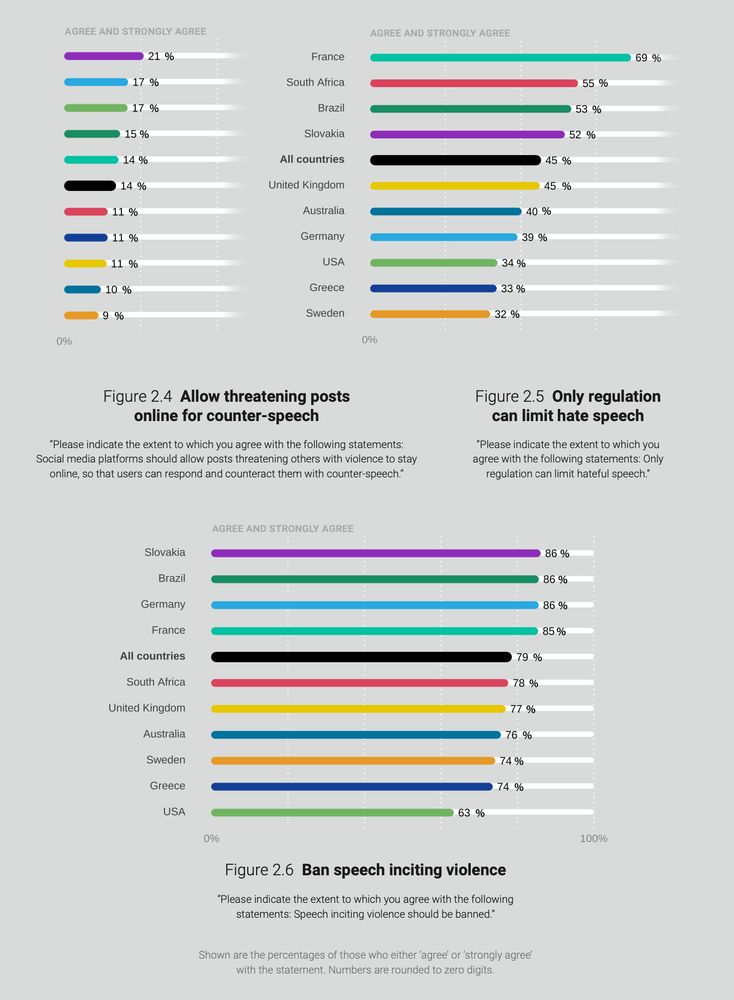

🔹 Most people want some level of content moderation—but opinions vary on who should be responsible.

🔹 There’s strong public concern about misinformation, toxicity, and online harm.

🔹 Many users feel platforms aren’t doing enough—but they also worry about overreach.

🔹 Most people want some level of content moderation—but opinions vary on who should be responsible.

🔹 There’s strong public concern about misinformation, toxicity, and online harm.

🔹 Many users feel platforms aren’t doing enough—but they also worry about overreach.

🔹 Who do people think should moderate online content?

🔹 How do they balance free speech vs. harm prevention?

🔹 What concerns them most—toxicity, misinformation, platform power?

Below you can find a glimpse into some of the many key findings you will find in the report.

🔹 Who do people think should moderate online content?

🔹 How do they balance free speech vs. harm prevention?

🔹 What concerns them most—toxicity, misinformation, platform power?

Below you can find a glimpse into some of the many key findings you will find in the report.

Stay tuned for the upcoming report with @janzilinsky.bsky.social, @friederikeq.bsky.social, @spyroskosmidis.bsky.social & @franziskapradel.bsky.social. 🚀

Stay tuned for the upcoming report with @janzilinsky.bsky.social, @friederikeq.bsky.social, @spyroskosmidis.bsky.social & @franziskapradel.bsky.social. 🚀

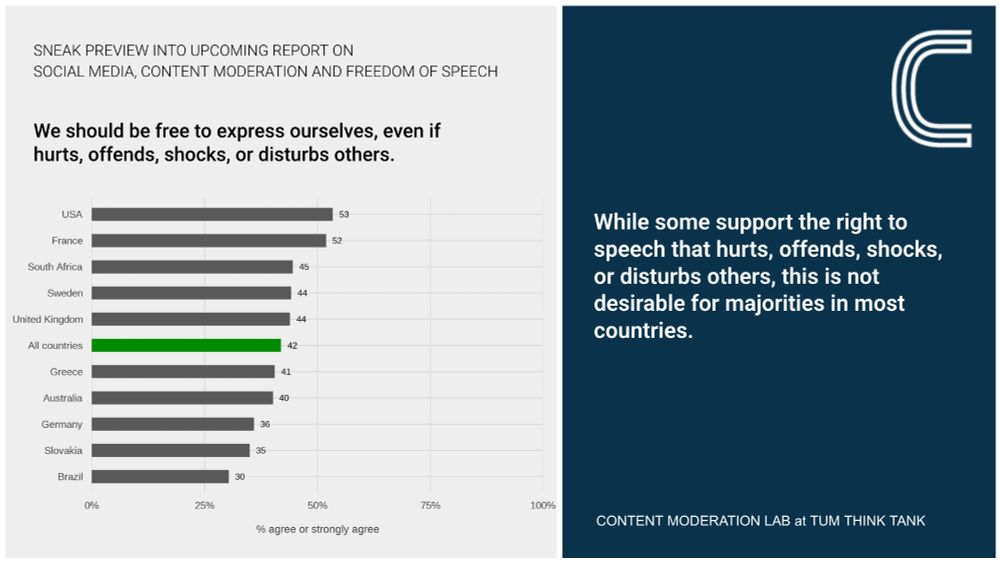

"We should be free to express ourselves, even if it hurts, offends, shocks, or disturbs others."

But where do we draw the line between free expression and harmful speech?

In an upcoming report, we dive into this critical trade-off 📊

"We should be free to express ourselves, even if it hurts, offends, shocks, or disturbs others."

But where do we draw the line between free expression and harmful speech?

In an upcoming report, we dive into this critical trade-off 📊

In the U.S., support rises to just 29%.

There seems to be very little tolerance for hateful speech.

In the U.S., support rises to just 29%.

There seems to be very little tolerance for hateful speech.