The 2nd CEGIS workshop on visual generative models evaluation at #ICCV2025 #ICCV @iccv.bsky.social

🌴 New deadline: July 2nd 2025 (11:59 pm, Pacific Time)

📝 Submission site: cmt3.research.microsoft.com/CEGIS2025

🏁 Check details at: sites.google.com/view/cegis-w...

The 2nd CEGIS workshop on visual generative models evaluation at #ICCV2025 #ICCV @iccv.bsky.social

🌴 New deadline: July 2nd 2025 (11:59 pm, Pacific Time)

📝 Submission site: cmt3.research.microsoft.com/CEGIS2025

🏁 Check details at: sites.google.com/view/cegis-w...

Instance-Level Recognition and Generation (ILR+G) Workshop at ICCV2025 @iccv.bsky.social

📅 new deadline: June 26, 2025 (23:59 AoE)

📄 paper submission: cmt3.research.microsoft.com/ILRnG2025

🌐 ILR+G website: ilr-workshop.github.io/ICCVW2025/

#ICCV2025 #ComputerVision #AI

Instance-Level Recognition and Generation (ILR+G) Workshop at ICCV2025 @iccv.bsky.social

📅 new deadline: June 26, 2025 (23:59 AoE)

📄 paper submission: cmt3.research.microsoft.com/ILRnG2025

🌐 ILR+G website: ilr-workshop.github.io/ICCVW2025/

#ICCV2025 #ComputerVision #AI

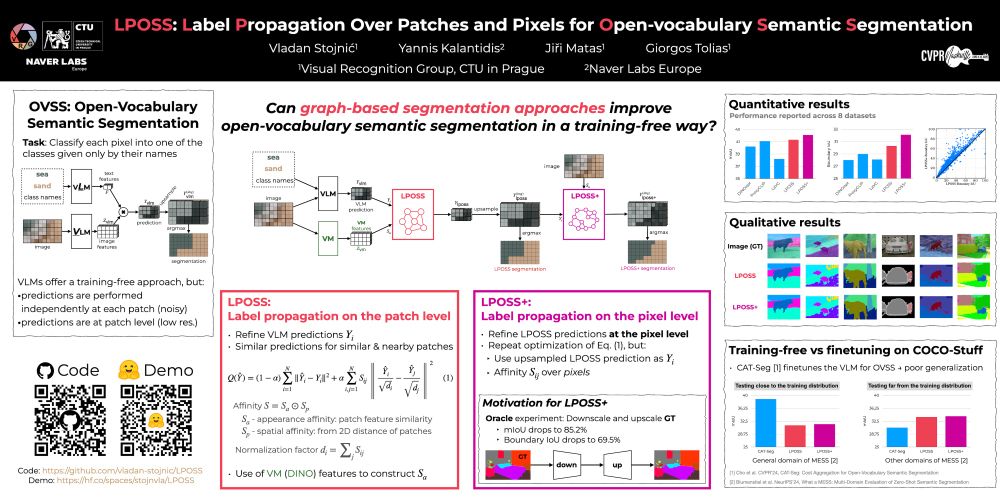

We show how can graph-based label propagation be used to improve weak, patch-level predictions from VLMs for open-vocabulary semantic segmentation.

📅 June 13, 2025, 16:00 – 18:00 CDT

📍 Location: ExHall D, Poster #421

We show how can graph-based label propagation be used to improve weak, patch-level predictions from VLMs for open-vocabulary semantic segmentation.

📅 June 13, 2025, 16:00 – 18:00 CDT

📍 Location: ExHall D, Poster #421

Fri 10:30-12:30 A Dataset for Semantic Segmentation in the Presence of Unknowns

Fri 16:00-18:00 LOCORE: Image Re-ranking with Long-Context Sequence Modeling

Fri 10:30-12:30 A Dataset for Semantic Segmentation in the Presence of Unknowns

Fri 16:00-18:00 LOCORE: Image Re-ranking with Long-Context Sequence Modeling

📅 Sat 14/6, 10:30-12:30

📍 Poster #395, ExHall D

📅 Sat 14/6, 10:30-12:30

📍 Poster #395, ExHall D

The 2nd CEGIS workshop on visual generative models evaluation is back at #ICCV2025!!

🌴 Deadline: June 26th 2025

📝 Submission site: cmt3.research.microsoft.com/CEGIS2025

🏁 Check details at: sites.google.com/view/cegis-w...

See you in Honolulu!

Submit your contributions:

- Deadline: June 26th 2025

- Notification: July 10th, 2025

- Camera-ready: August 18th, 2025

See you in Honolulu!

sites.google.com/view/cegis-w...

@iccv.bsky.social

The 2nd CEGIS workshop on visual generative models evaluation is back at #ICCV2025!!

🌴 Deadline: June 26th 2025

📝 Submission site: cmt3.research.microsoft.com/CEGIS2025

🏁 Check details at: sites.google.com/view/cegis-w...

See you in Honolulu!

We will now feature a single submission track with new submission dates.

📅 New submission deadline: June 21, 2025

🔗 Submit here: cmt3.research.microsoft.com/ILRnG2025

🌐 More details: ilr-workshop.github.io/ICCVW2025/

#ICCV2025

We will now feature a single submission track with new submission dates.

📅 New submission deadline: June 21, 2025

🔗 Submit here: cmt3.research.microsoft.com/ILRnG2025

🌐 More details: ilr-workshop.github.io/ICCVW2025/

#ICCV2025

Submit your in-proceedings papers by June 7, or out-of-proceedings by June 30.

See you in Honolulu! 🏝️

#iccv2025 @iccv.bsky.social

7th Instance-Level Recognition and Generation (ILR+G) Workshop at @iccv.bsky.social

📍 Honolulu, Hawaii 🌺

📅 October 19–20, 2025

🌐 ilr-workshop.github.io/ICCVW2025/

in-proceedings deadline: June 7

out-of-proceedings deadline: June 30

#ICCV2025

Submit your in-proceedings papers by June 7, or out-of-proceedings by June 30.

See you in Honolulu! 🏝️

#iccv2025 @iccv.bsky.social

www.nature.com/articles/s44...

www.nature.com/articles/s44...

#Internship #CV

#Internship #CV

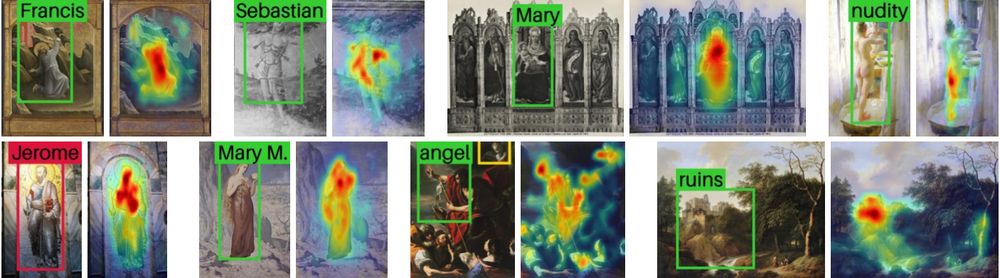

Bear with me for a moment: Imagine you train a model to classify photos, but you need it to generalize to paintings 🎨.

You only have data from photos, and the target domain is inaccessible due to cost or legal rights.

You train many models and you validate on the source domain.

by @timnitgebru.bsky.social and Remi Denton

by @timnitgebru.bsky.social and Remi Denton

happy happy happy to introduce NADA, our latest work on object detection in art! 🎨

with amazing collaborators:

@patrick-ramos.bsky.social, @nicaogr.bsky.social, Selina Khan, Yuta Nakashima

1/