@neuralreckoning.bsky.social & I explore this in our new preprint:

doi.org/10.1101/2025...

🤖🧠🧪

🧵1/9

@neuralreckoning.bsky.social & I explore this in our new preprint:

doi.org/10.1101/2025...

🤖🧠🧪

🧵1/9

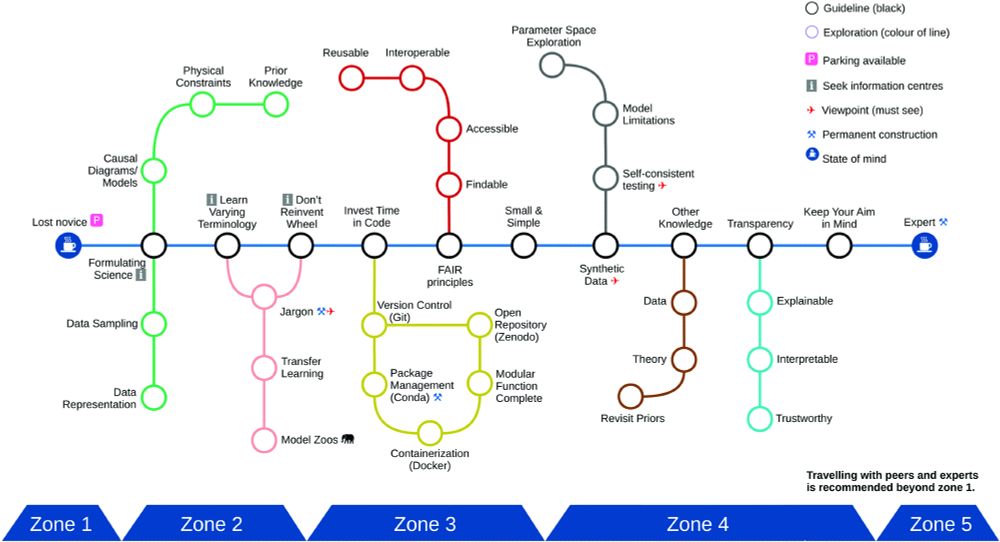

Myself and 9 other research fellows from @imperial-ix.bsky.social use AI methods in domains from plant biology (🌱) to neuroscience (🧠) and particle physics (🎇).

Together we suggest 10 simple rules @plos.org 🧵

doi.org/10.1371/jour...

Myself and 9 other research fellows from @imperial-ix.bsky.social use AI methods in domains from plant biology (🌱) to neuroscience (🧠) and particle physics (🎇).

Together we suggest 10 simple rules @plos.org 🧵

doi.org/10.1371/jour...

arxiv.org/abs/2507.16043

Surrogate gradients are popular for training SNNs, but some worry whether they really learn complex temporal spike codes. TLDR: we tested this, and yes they can! 🧵👇

🤖🧠🧪

arxiv.org/abs/2507.16043

Surrogate gradients are popular for training SNNs, but some worry whether they really learn complex temporal spike codes. TLDR: we tested this, and yes they can! 🧵👇

🤖🧠🧪

Blog: gabrielbena.github.io/blog/2025/be...

Thread: bsky.app/profile/sola...

Blog: gabrielbena.github.io/blog/2025/be...

Thread: bsky.app/profile/sola...

What if we could train neural cellular automata to develop continuous universal computation through gradient descent ?! We have started to chart a path toward this goal in our new preprint:

arXiv: arxiv.org/abs/2505.13058

Blog: gabrielbena.github.io/blog/2025/be...

🧵⬇️

What if we could train neural cellular automata to develop continuous universal computation through gradient descent ?! We have started to chart a path toward this goal in our new preprint:

arXiv: arxiv.org/abs/2505.13058

Blog: gabrielbena.github.io/blog/2025/be...

🧵⬇️

arxiv.org/abs/2001.10605

arxiv.org/abs/2001.10605