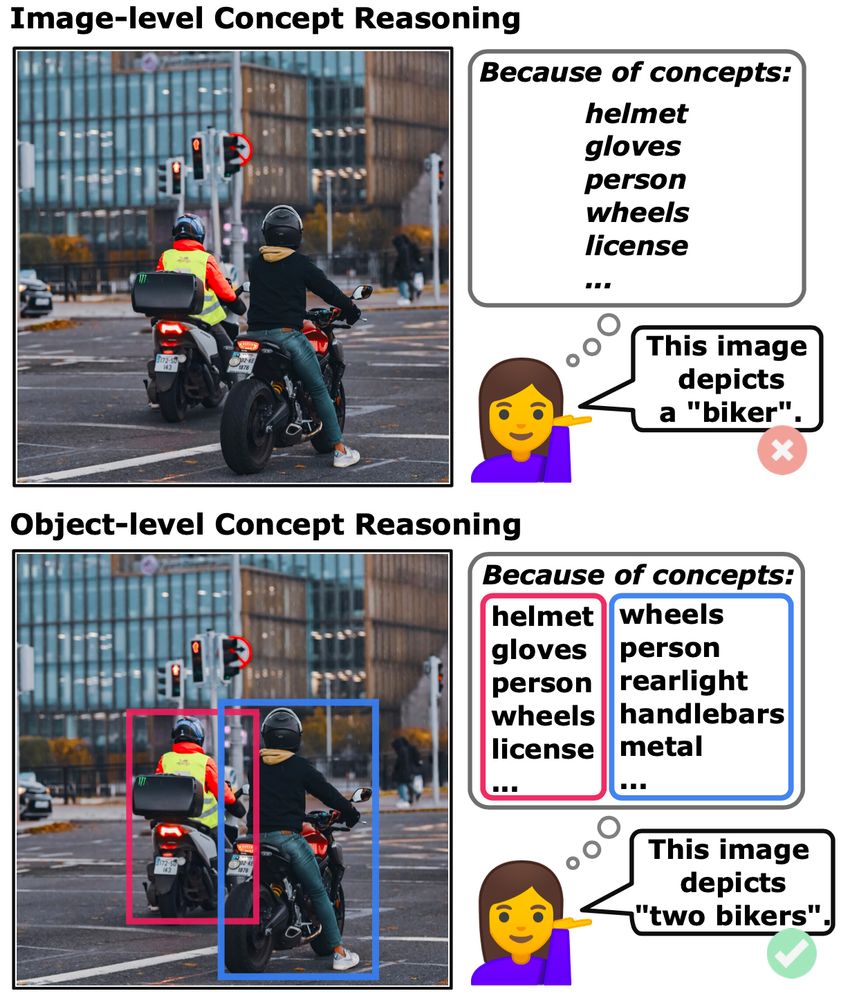

At ICML’s Actionable Interpretability Workshop, we present Neural Concept Verifier—bringing Prover–Verifier Games to concept space.

📅 Poster: Sat, July 19

📄 arxiv.org/abs/2507.07532

#ICML2025 #XAI #NeuroSymbolic

At ICML’s Actionable Interpretability Workshop, we present Neural Concept Verifier—bringing Prover–Verifier Games to concept space.

📅 Poster: Sat, July 19

📄 arxiv.org/abs/2507.07532

#ICML2025 #XAI #NeuroSymbolic

📄 arxiv.org/abs/2505.244...

#AI #XAI #NeSy #CBM #ML

📄 arxiv.org/abs/2505.244...

#AI #XAI #NeSy #CBM #ML

We test Vision-Language Models on classic visual puzzles—and even simple concepts like “spiral direction” or “left vs. right” trip them up. Big gap to human reasoning remains.

📄 arxiv.org/pdf/2410.19546

We test Vision-Language Models on classic visual puzzles—and even simple concepts like “spiral direction” or “left vs. right” trip them up. Big gap to human reasoning remains.

📄 arxiv.org/pdf/2410.19546

Lukas Helff @ingaibs.bsky.social @wolfstammer.bsky.social @devendradhami.bsky.social @c-rothkopf.bsky.social @kerstingaiml.bsky.social ! ✨

Lukas Helff @ingaibs.bsky.social @wolfstammer.bsky.social @devendradhami.bsky.social @c-rothkopf.bsky.social @kerstingaiml.bsky.social ! ✨

arxiv.org/abs/2402.06434

-> we introduce continual confounding + the ConCon dataset, where confounders over time render continual knowledge accumulation insufficient ⬇️

arxiv.org/abs/2402.06434

-> we introduce continual confounding + the ConCon dataset, where confounders over time render continual knowledge accumulation insufficient ⬇️

Feel free to take a look :) tuprints.ulb.tu-darmstadt.de/29712/

Feel free to take a look :) tuprints.ulb.tu-darmstadt.de/29712/

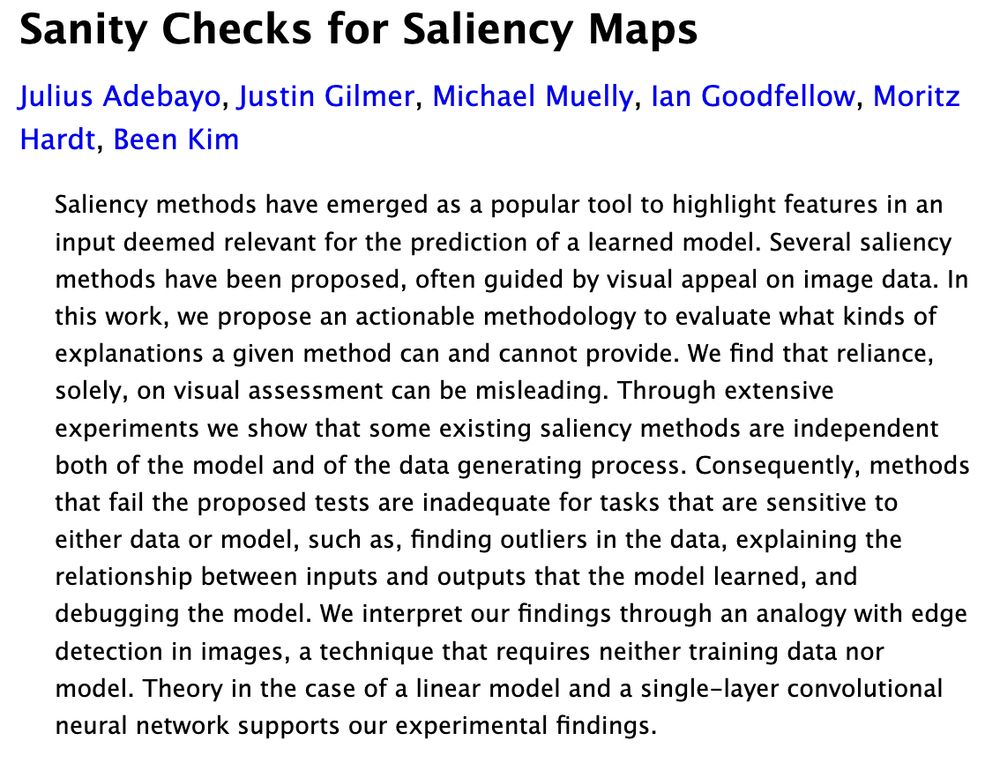

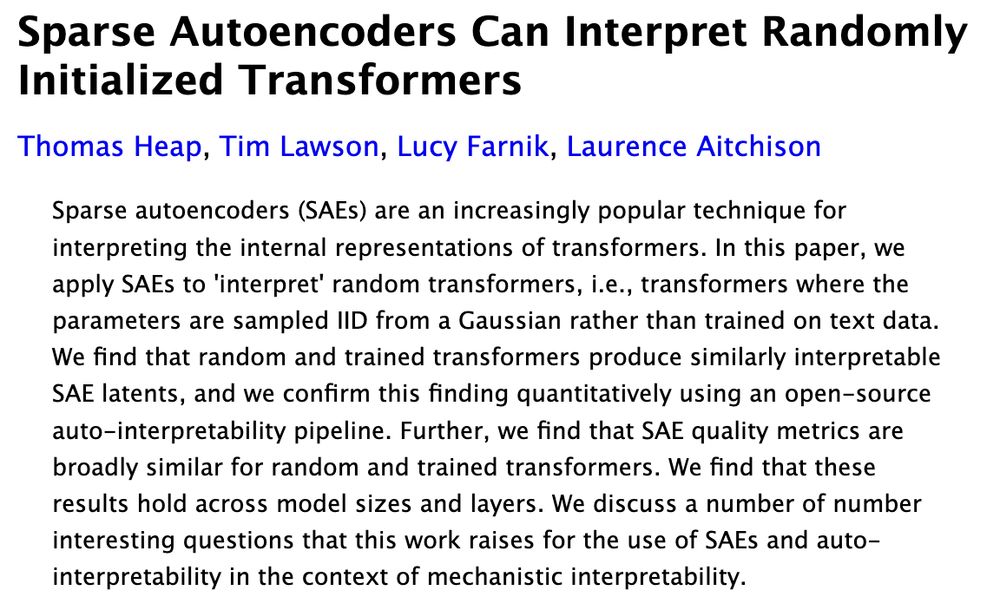

2025: SAEs give plausible interpretations of random weights, triggering skepticism and ...

2025: SAEs give plausible interpretations of random weights, triggering skepticism and ...

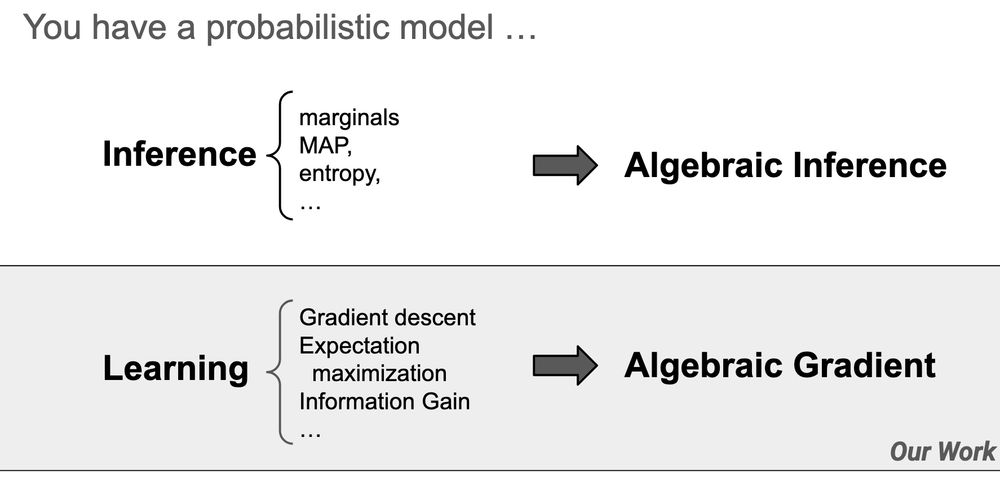

Come to my #AAAI2025 oral tomorrow (11:45, Room 119B) to learn more.

Come to my #AAAI2025 oral tomorrow (11:45, Room 119B) to learn more.