Evaluates LLMs for breakfast, preaches AI usefulness all day long at ellamind.com.

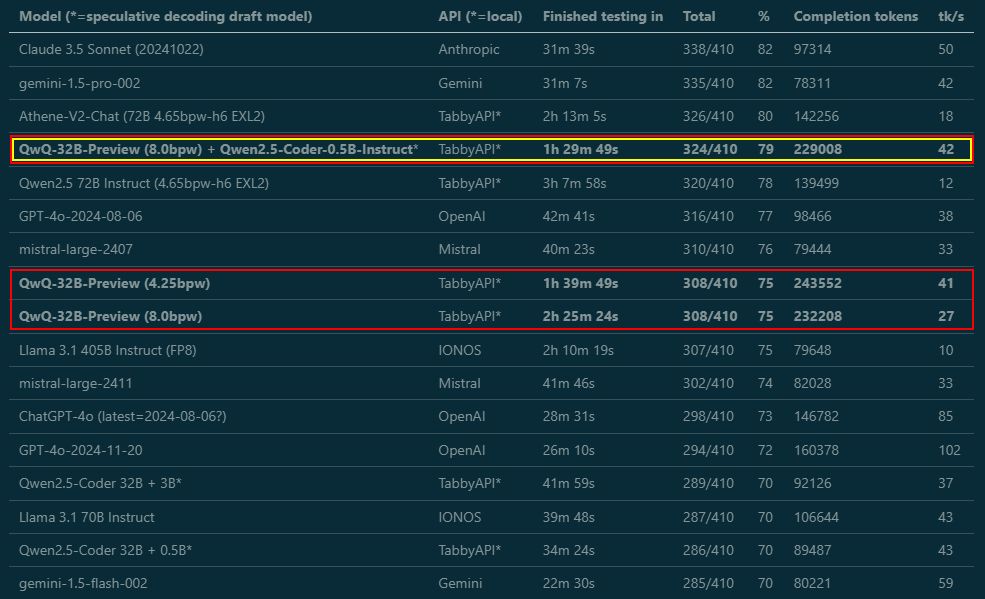

A few take-aways stood out - especially for those interested in local deployment and performance trade-offs:

A few take-aways stood out - especially for those interested in local deployment and performance trade-offs:

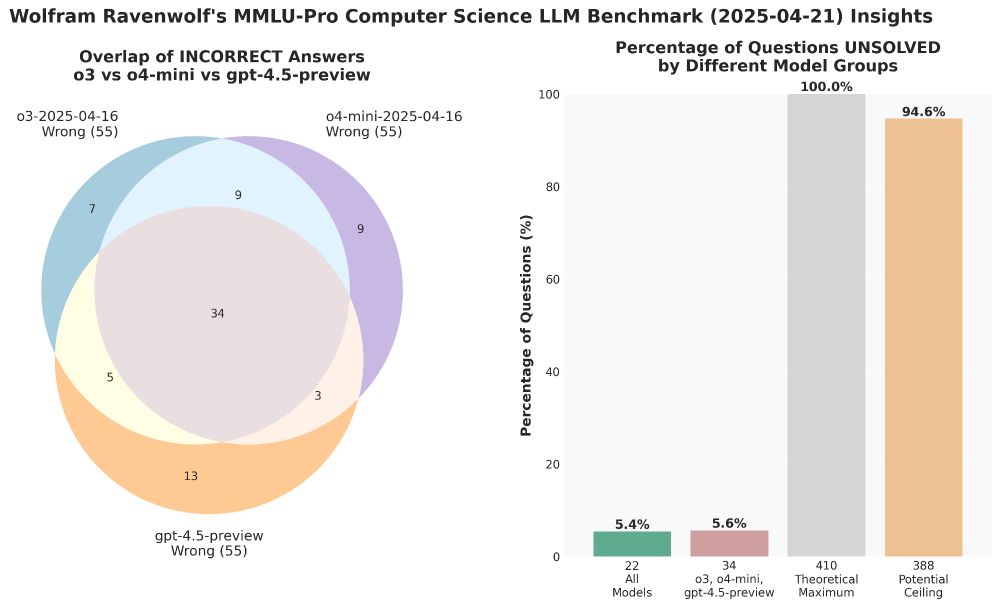

Definitely unexpected to see all three OpenAI top models get the exact same, top score in this benchmark. But they didn't all fail the same questions, as the Venn diagram shows. 🤔

Definitely unexpected to see all three OpenAI top models get the exact same, top score in this benchmark. But they didn't all fail the same questions, as the Venn diagram shows. 🤔

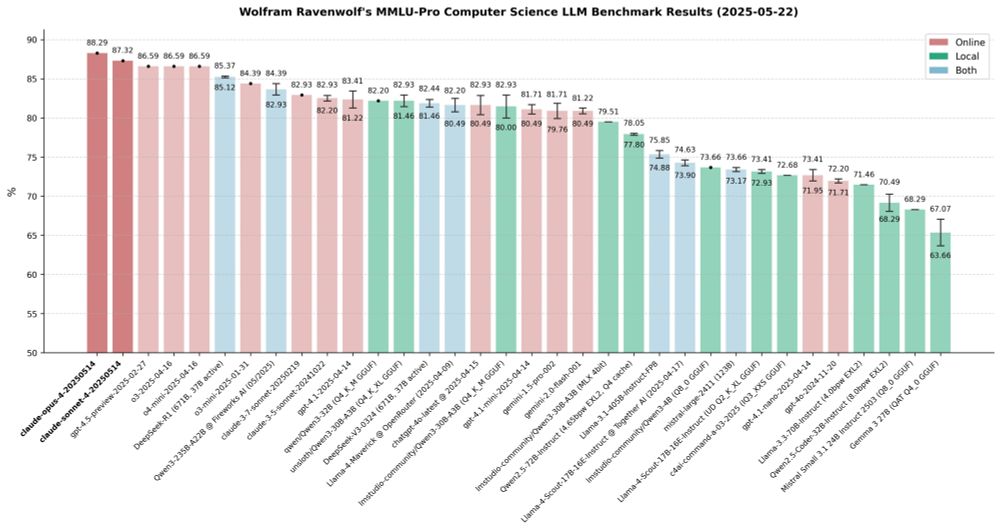

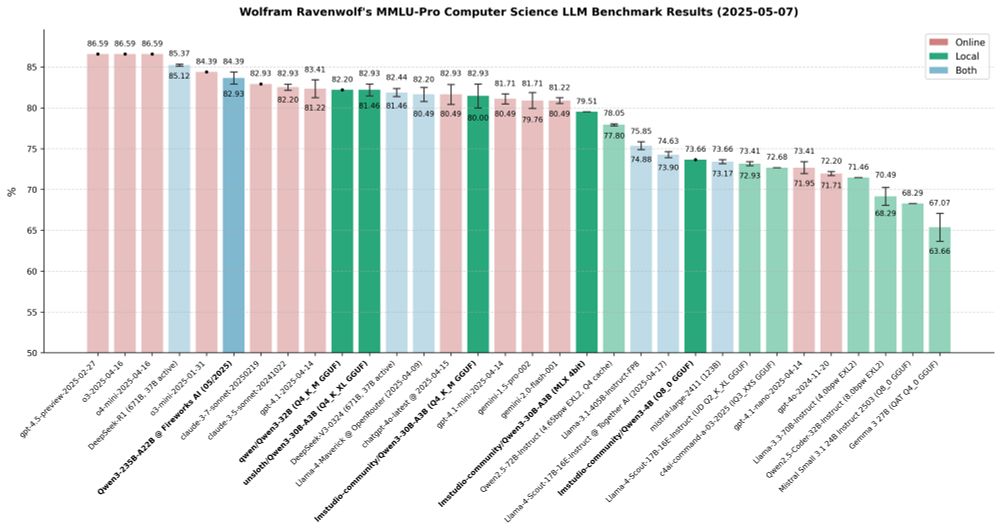

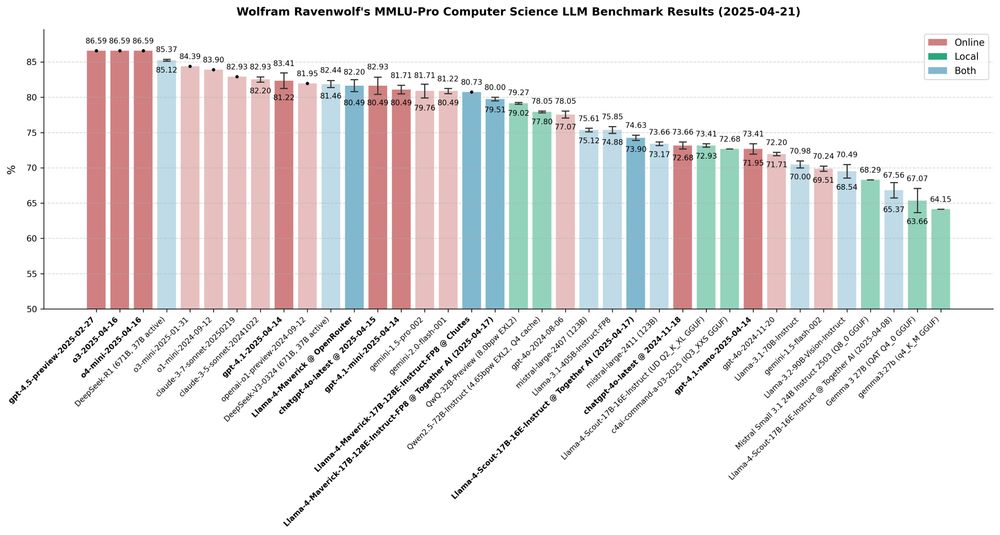

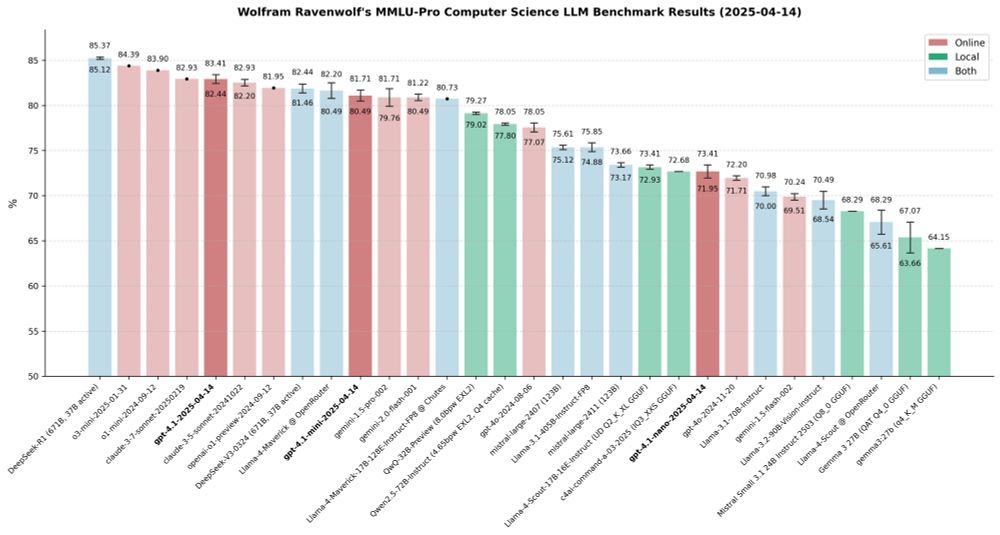

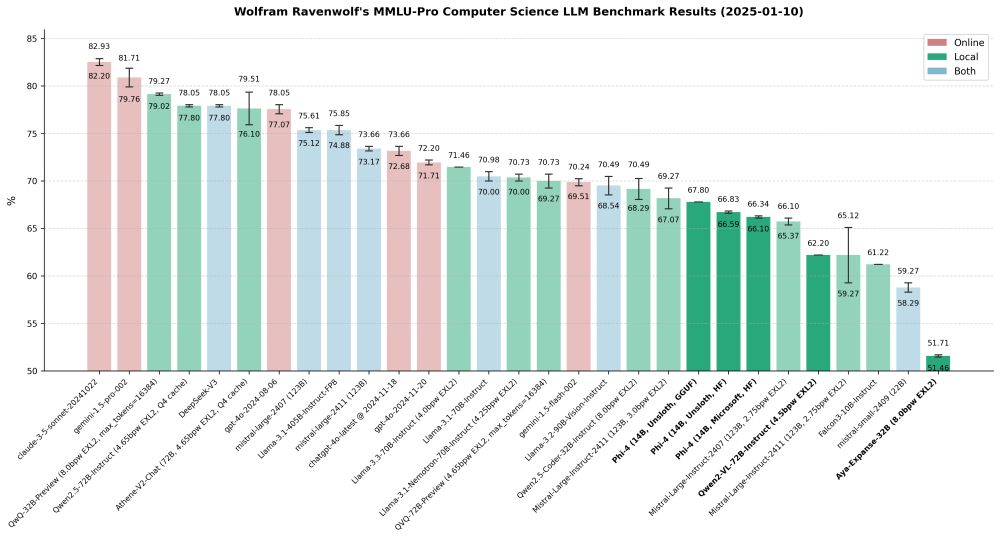

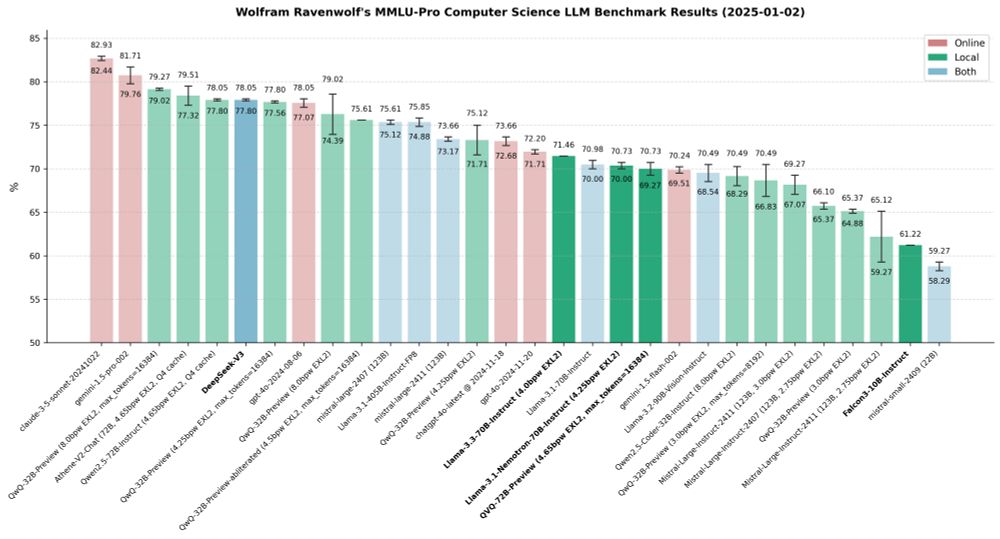

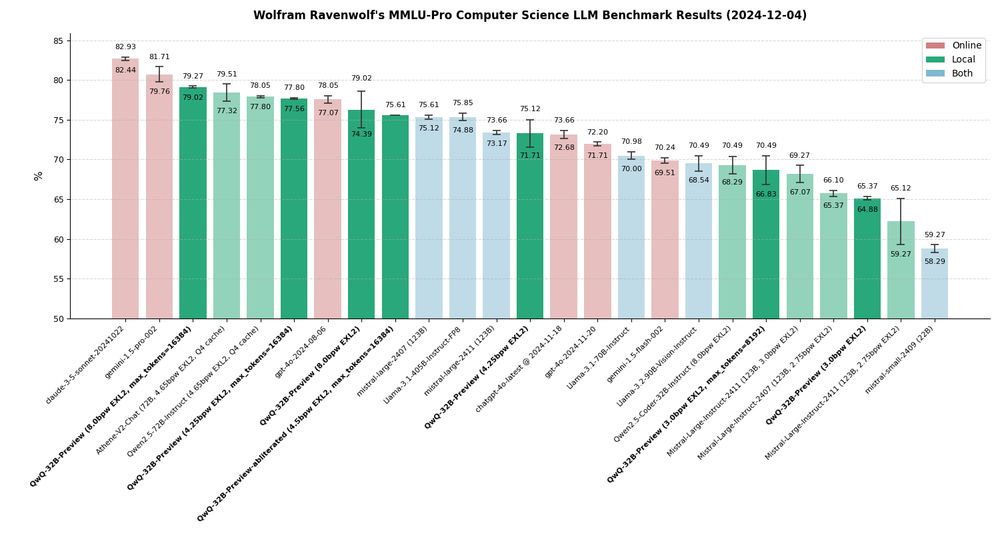

Here's how these three LLMs compare to an assortment of other strong models, online and local, open and closed, in the MMLU-Pro CS benchmark:

Here's how these three LLMs compare to an assortment of other strong models, online and local, open and closed, in the MMLU-Pro CS benchmark:

More details here:

huggingface.co/blog/wolfram...

More details here:

huggingface.co/blog/wolfram...

huggingface.co/blog/wolfram...

huggingface.co/blog/wolfram...

Thank you all for being part of this incredible journey - friends, colleagues, clients, and of course family. 💖

May the new year bring you joy and success! Let's make 2025 a year to remember - filled with laughter, love, and of course, plenty of AI magic! ✨

Thank you all for being part of this incredible journey - friends, colleagues, clients, and of course family. 💖

May the new year bring you joy and success! Let's make 2025 a year to remember - filled with laughter, love, and of course, plenty of AI magic! ✨

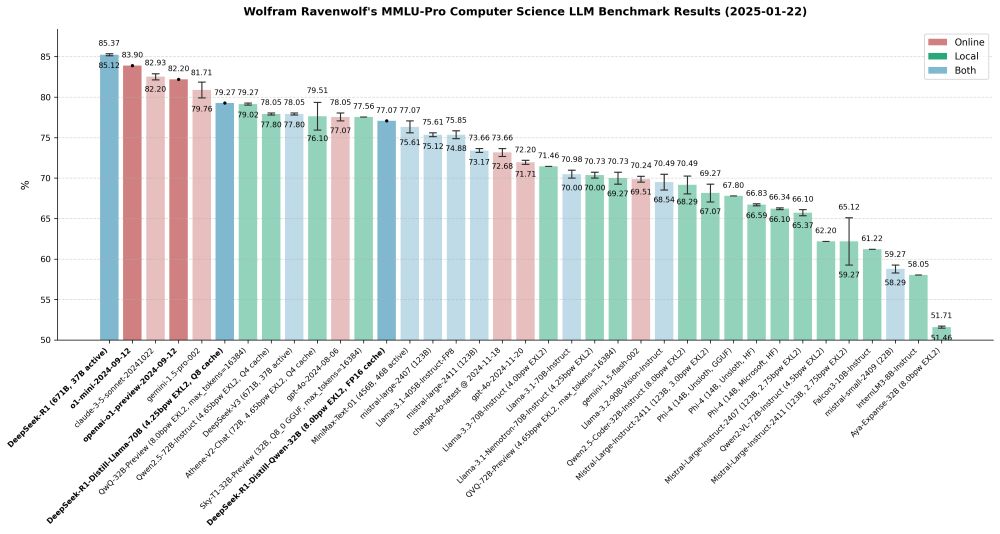

I've seen 2K max context and 128 max new tokens on too many models that should have much higher values. Especially QwQ needs room to think.

I've seen 2K max context and 128 max new tokens on too many models that should have much higher values. Especially QwQ needs room to think.

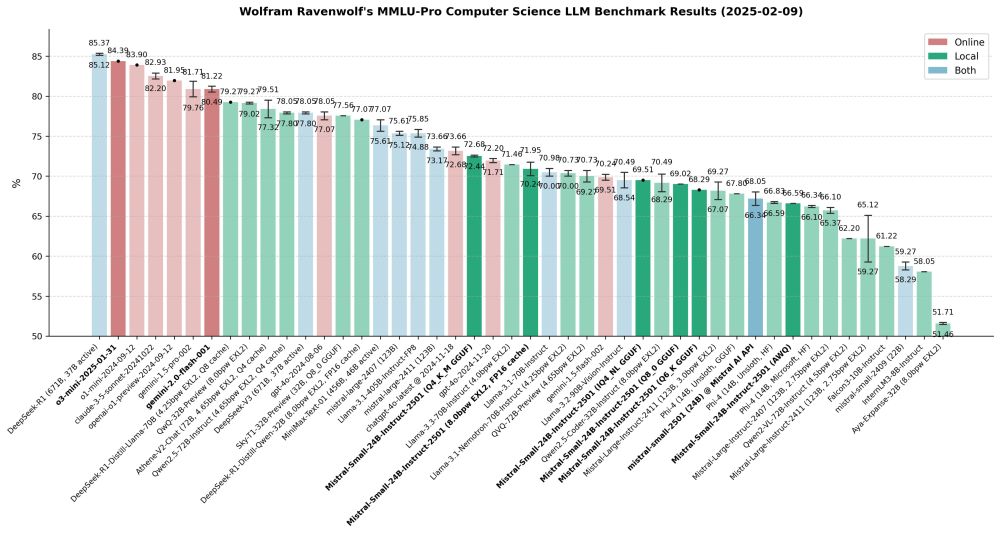

I'm still working on the detailed analysis, but here's the main graph that accurately depicts the quality of all tested models.

I'm still working on the detailed analysis, but here's the main graph that accurately depicts the quality of all tested models.

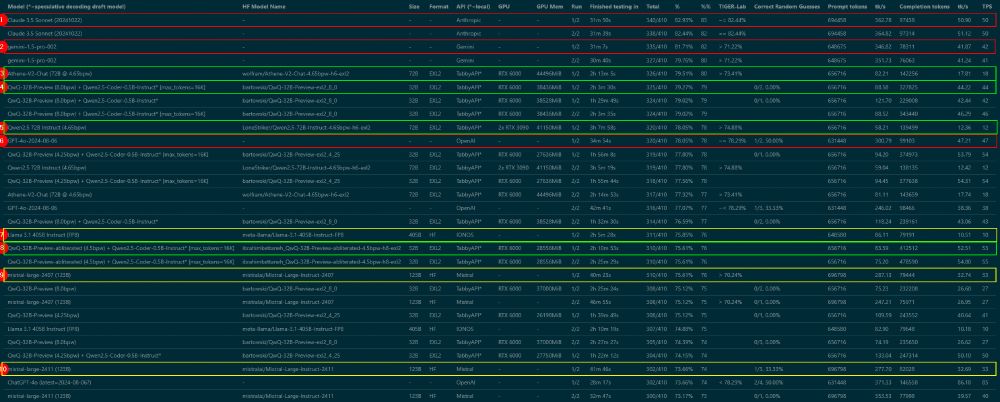

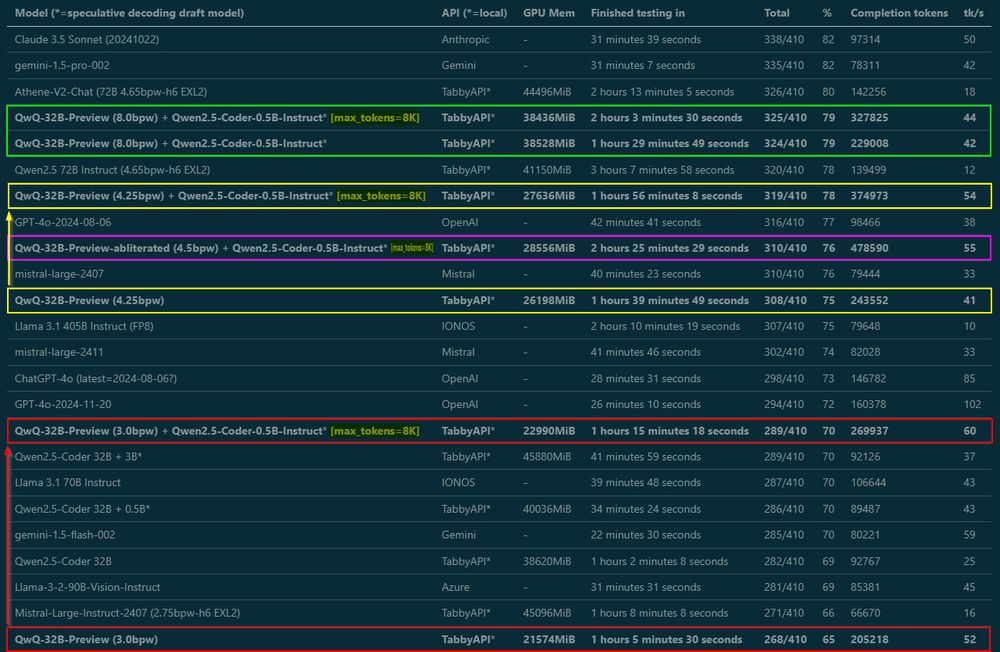

I've completed ANOTHER round to ensure accuracy - yes, I have now run ALL the benchmarks TWICE! While still compiling the results for a blog post, here's a sneak peek featuring detailed metrics and Top 10 rankings. Stay tuned for the complete analysis.

I've completed ANOTHER round to ensure accuracy - yes, I have now run ALL the benchmarks TWICE! While still compiling the results for a blog post, here's a sneak peek featuring detailed metrics and Top 10 rankings. Stay tuned for the complete analysis.

🎙️ Voice assistants

🤖 ChatGPT, Claude, Google

💻 Local LLMs

📱 Visual assistant on tablet & watch

huggingface.co/blog/wolfram...

#SmartHome #AI

🎙️ Voice assistants

🤖 ChatGPT, Claude, Google

💻 Local LLMs

📱 Visual assistant on tablet & watch

huggingface.co/blog/wolfram...

#SmartHome #AI

Glasses are just the perfect form factor for AI usage – so much better than having to whip out and use a phone, and more versatile than a watch on one's wrist.

Glasses are just the perfect form factor for AI usage – so much better than having to whip out and use a phone, and more versatile than a watch on one's wrist.