https://wimmerth.github.io

In our experiments, we show that it matches encoder-specific upsamplers and that trends between different model sizes are preserved.

In our experiments, we show that it matches encoder-specific upsamplers and that trends between different model sizes are preserved.

Importantly, the upsampled features also stay faithful to the input feature space, as we show in experiments with pre-trained DINOv2 probes.

Importantly, the upsampled features also stay faithful to the input feature space, as we show in experiments with pre-trained DINOv2 probes.

Together with window attention-based upsampling, a new training pipeline and consistency regularization, we achieve SOTA results.

Together with window attention-based upsampling, a new training pipeline and consistency regularization, we achieve SOTA results.

✨ AnyUp: Universal Feature Upsampling 🔎

Upsample any feature - really any feature - with the same upsampler, no need for cumbersome retraining.

SOTA feature upsampling results while being feature-agnostic at inference time.

🌐 wimmerth.github.io/anyup/

✨ AnyUp: Universal Feature Upsampling 🔎

Upsample any feature - really any feature - with the same upsampler, no need for cumbersome retraining.

SOTA feature upsampling results while being feature-agnostic at inference time.

🌐 wimmerth.github.io/anyup/

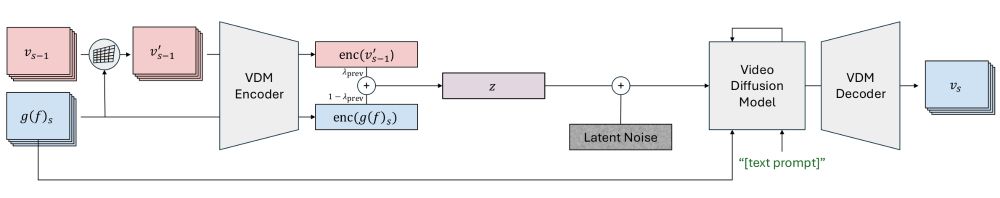

With limited resources, we can't fine-tune or retrain a VDM to be pose-conditioned. Thus, we propose a zero-shot technique to generate more 3D-consistent videos!

🧵⬇️

With limited resources, we can't fine-tune or retrain a VDM to be pose-conditioned. Thus, we propose a zero-shot technique to generate more 3D-consistent videos!

🧵⬇️

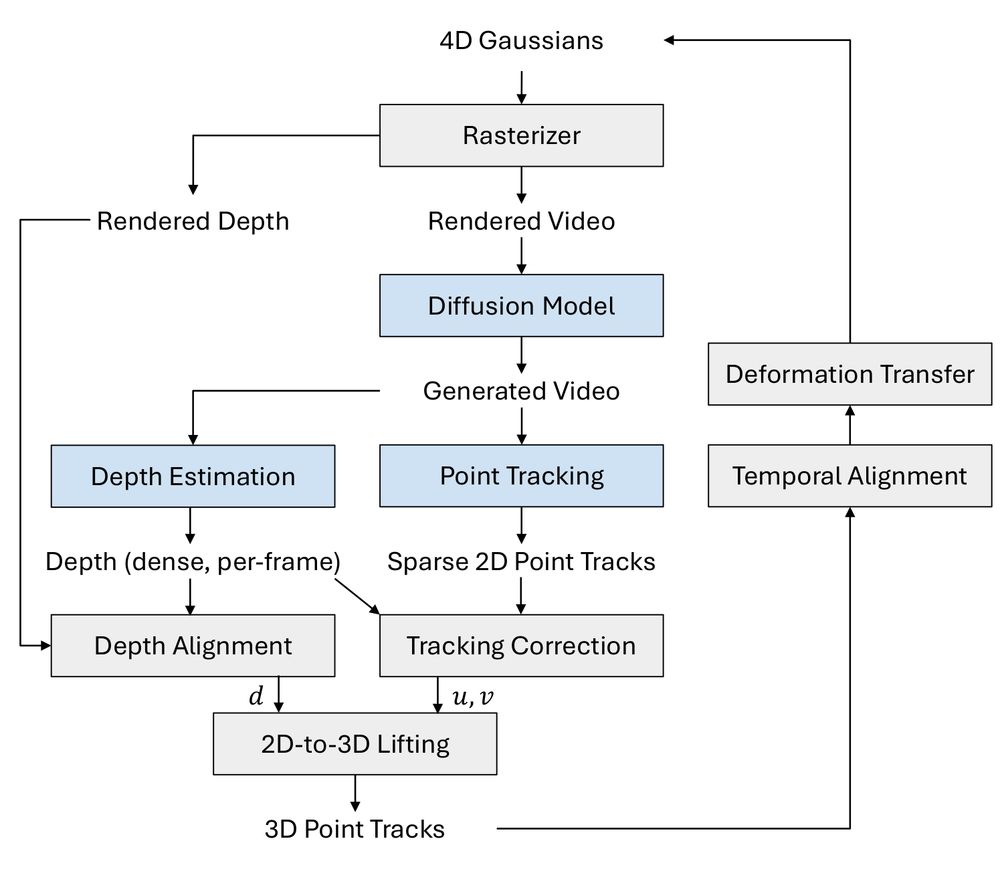

Instead, we propose to employ several pre-trained 2D models to directly lift motion from tracked points in the generated videos to 3D Gaussians.

🧵⬇️

Instead, we propose to employ several pre-trained 2D models to directly lift motion from tracked points in the generated videos to 3D Gaussians.

🧵⬇️

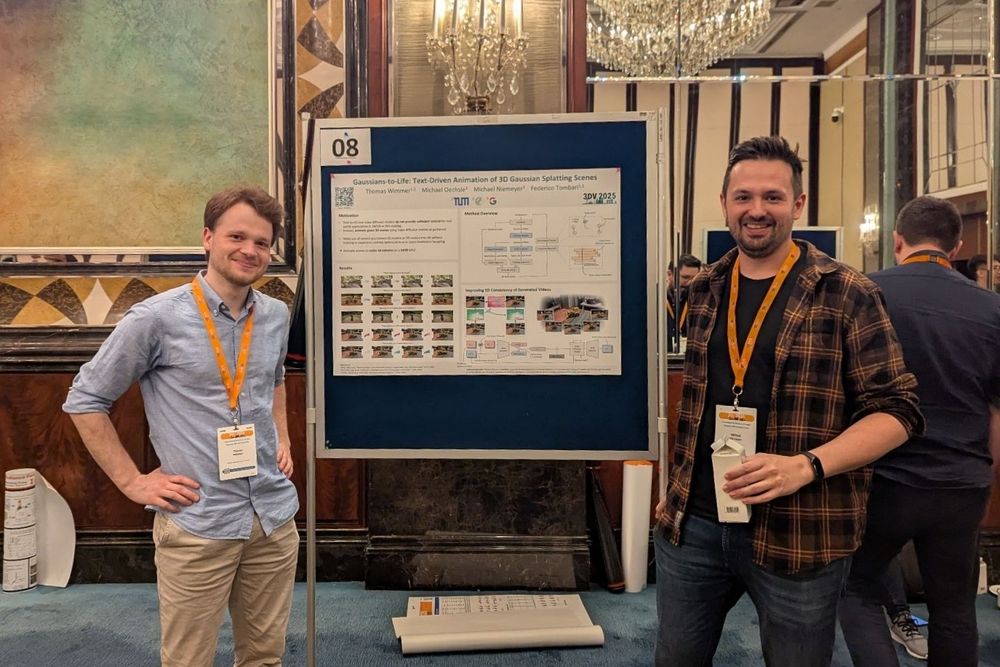

This work was a great collaboration with @moechsle.bsky.social, @miniemeyer.bsky.social, and Federico Tombari.

🧵⬇️

This work was a great collaboration with @moechsle.bsky.social, @miniemeyer.bsky.social, and Federico Tombari.

🧵⬇️

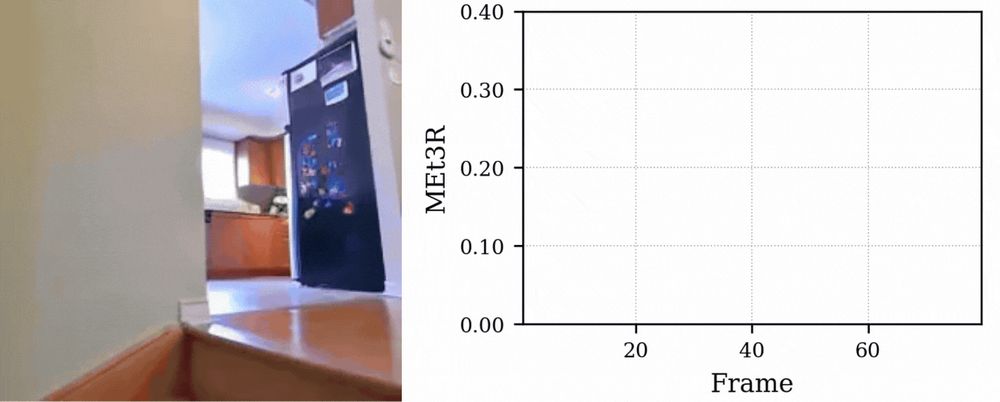

We realized that we are often lacking metrics for comparing the quality of video and multi-view diffusion models. Especially the quantification of multi-view 3D consistency across frames is difficult.

But not anymore: Introducing MET3R 🧵

We realized that we are often lacking metrics for comparing the quality of video and multi-view diffusion models. Especially the quantification of multi-view 3D consistency across frames is difficult.

But not anymore: Introducing MET3R 🧵