William Ngiam | 严祥全

@williamngiam.github.io

Cognitive Neuroscientist at Adelaide University | Perception, Attention, Learning and Memory Lab (https://palm-lab.github.io) | Open Practices Editor at Attention, Perception, & Psychophysics | ReproducibiliTea | http://williamngiam.github.io

For the Feyerabend anarchists:

November 9, 2025 at 4:39 AM

For the Feyerabend anarchists:

me: finding a recent and relevant Chaz phil vis paper that I had missed through zohran-posting

November 9, 2025 at 3:37 AM

me: finding a recent and relevant Chaz phil vis paper that I had missed through zohran-posting

As per Schurgin et al. (2020), we find that the cognitive representation underlying all tasks do not match the physical stimulus space. But we also find that the representation is different for similarity comparison and both reproduction tasks; similarity is not the basis for working memory. /7

November 3, 2025 at 7:34 AM

As per Schurgin et al. (2020), we find that the cognitive representation underlying all tasks do not match the physical stimulus space. But we also find that the representation is different for similarity comparison and both reproduction tasks; similarity is not the basis for working memory. /7

We conducted a follow-up experiment comparing static discs to moving discs; we wanted to make sure the canonical CDA set-size effect replicated in our paradigm where we cued targets first. And it does when the discs are static, and we also replicated the lack of color load effect for moving discs!

September 18, 2025 at 2:56 PM

We conducted a follow-up experiment comparing static discs to moving discs; we wanted to make sure the canonical CDA set-size effect replicated in our paradigm where we cued targets first. And it does when the discs are static, and we also replicated the lack of color load effect for moving discs!

A surprisingly similar result! While the CDA amplitude was slightly elevated overall, there was no clear effect of working memory load. That is, the CDA appeared to be largely driven by the attentional tracking load – one or two discs.

September 18, 2025 at 2:56 PM

A surprisingly similar result! While the CDA amplitude was slightly elevated overall, there was no clear effect of working memory load. That is, the CDA appeared to be largely driven by the attentional tracking load – one or two discs.

Our result? When subjects were only required to complete the MOT task (and could ignore the colors), the CDA was as expected. We observed a sustained difference in the CDA amplitude when tracking one disc compared to tracking two discs. But what about tracking and remembering?

September 18, 2025 at 2:56 PM

Our result? When subjects were only required to complete the MOT task (and could ignore the colors), the CDA was as expected. We observed a sustained difference in the CDA amplitude when tracking one disc compared to tracking two discs. But what about tracking and remembering?

We combined MOT with the whole-report WM task – subjects had to track one or two moving discs, while also remembering the colors of those discs. There were either one or two colors per target discs, so either two or four colors to remember in total. We had subjects do MOT-only as well to compare.

September 18, 2025 at 2:56 PM

We combined MOT with the whole-report WM task – subjects had to track one or two moving discs, while also remembering the colors of those discs. There were either one or two colors per target discs, so either two or four colors to remember in total. We had subjects do MOT-only as well to compare.

I had a fantastic experience presenting at Raising the Bar last night! It was nerve-wracking to speak about research with no slides, but it meant I really connected with the audience on the current discourse around the perceived impact of digital technology on attention spans and brain function.

August 6, 2025 at 11:46 AM

I had a fantastic experience presenting at Raising the Bar last night! It was nerve-wracking to speak about research with no slides, but it meant I really connected with the audience on the current discourse around the perceived impact of digital technology on attention spans and brain function.

Does tracking and remembering the colour of multiple moving objects share a common mechanism? How might the encoded information be represented in the mind and brain? See my talk tomorrow morning to hear about a couple of EEG studies looking at this (see task below)!

@expsyanz.bsky.social #EPC2025

@expsyanz.bsky.social #EPC2025

June 18, 2025 at 1:04 PM

Does tracking and remembering the colour of multiple moving objects share a common mechanism? How might the encoded information be represented in the mind and brain? See my talk tomorrow morning to hear about a couple of EEG studies looking at this (see task below)!

@expsyanz.bsky.social #EPC2025

@expsyanz.bsky.social #EPC2025

In the first iteration of the session, @styrkowiec.bsky.social and I shared the very beginnings of an experiment idea testing how information would be organised in working memory when stimuli were moving and changing at the same time. We got a useful signal – that others were interested in the idea!

May 1, 2025 at 12:11 AM

In the first iteration of the session, @styrkowiec.bsky.social and I shared the very beginnings of an experiment idea testing how information would be organised in working memory when stimuli were moving and changing at the same time. We got a useful signal – that others were interested in the idea!

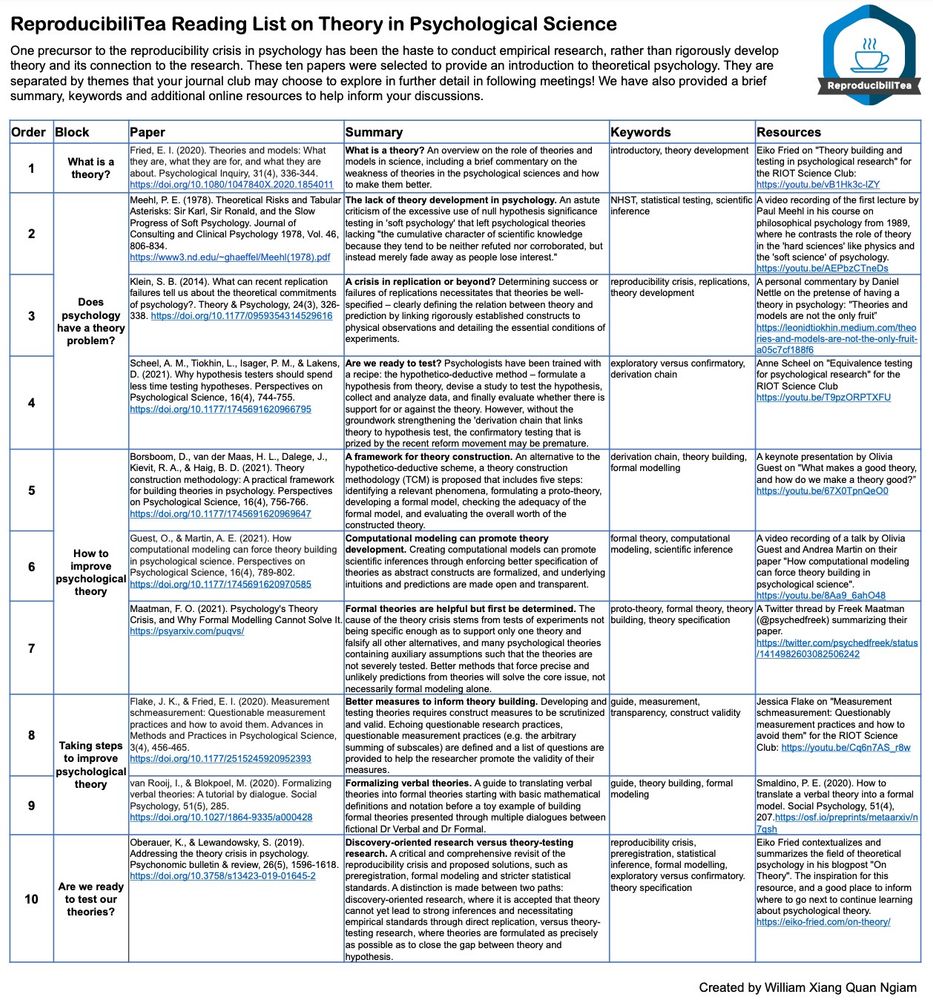

I created this reading list on theory in psychology a while back, so it probably needs an update! Would love any recommendations for papers to include – maybe I can turn this into a syllabus of sorts.

PDF of this reading list here: williamngiam.github.io/reading_list...

PDF of this reading list here: williamngiam.github.io/reading_list...

April 24, 2025 at 1:23 PM

I created this reading list on theory in psychology a while back, so it probably needs an update! Would love any recommendations for papers to include – maybe I can turn this into a syllabus of sorts.

PDF of this reading list here: williamngiam.github.io/reading_list...

PDF of this reading list here: williamngiam.github.io/reading_list...

Embarrassing as a vision scientist to upload a SVG of the logo, for it to come out blurry. Hopefully this one is a bit nicer to look at

March 14, 2025 at 12:21 AM

Embarrassing as a vision scientist to upload a SVG of the logo, for it to come out blurry. Hopefully this one is a bit nicer to look at

For the #visionscience folks, the pre-data poster session is back at VSS for its third year. It only makes sense to get feedback at the conference at a point in time where you can actually action it! I think it is a great opportunity for ECRs to be involved in VSS as well!

March 14, 2025 at 12:15 AM

For the #visionscience folks, the pre-data poster session is back at VSS for its third year. It only makes sense to get feedback at the conference at a point in time where you can actually action it! I think it is a great opportunity for ECRs to be involved in VSS as well!

I feel like this will give ECRs (both postdoctoral researchers and early-career faculty) a great deal of insecurity, bouncing around from academic job to research job and back again, from project to project. Would like to see more explanation of how the following is achieved:

February 27, 2025 at 12:42 PM

I feel like this will give ECRs (both postdoctoral researchers and early-career faculty) a great deal of insecurity, bouncing around from academic job to research job and back again, from project to project. Would like to see more explanation of how the following is achieved:

I don't think this is "radical" or is in opposition with institutional structures. Reform won't come in one fell swoop – I hope that with enough individual and local community action, there will be a strong resonance that tumbles existing structures or forces them to evolve to remain standing.

January 23, 2025 at 11:24 PM

I don't think this is "radical" or is in opposition with institutional structures. Reform won't come in one fell swoop – I hope that with enough individual and local community action, there will be a strong resonance that tumbles existing structures or forces them to evolve to remain standing.

A new update to quokka – my open-source, in-browser, free-to-use qualitative coding ShinyApp! In the new 'sorting' tab, users who have finished analysing can organise their codes into themes (or subthemes or categories based on the approach) with a simple drag-and-drop interface. #CAQDAS

January 18, 2025 at 5:04 AM

A new update to quokka – my open-source, in-browser, free-to-use qualitative coding ShinyApp! In the new 'sorting' tab, users who have finished analysing can organise their codes into themes (or subthemes or categories based on the approach) with a simple drag-and-drop interface. #CAQDAS

Actually Google, I did not mean that.

October 7, 2024 at 4:17 AM

Actually Google, I did not mean that.

Everything is spot on, even down to the quotes. And if anyone wants to know how I think (some of the) Open Science principles can transform psychological science for the better, I will happily talk for hours on end.

Check out my Veggie ID! You can create yours at sophie006liu.github.io/vegetal/

Check out my Veggie ID! You can create yours at sophie006liu.github.io/vegetal/

October 7, 2024 at 3:43 AM

Everything is spot on, even down to the quotes. And if anyone wants to know how I think (some of the) Open Science principles can transform psychological science for the better, I will happily talk for hours on end.

Check out my Veggie ID! You can create yours at sophie006liu.github.io/vegetal/

Check out my Veggie ID! You can create yours at sophie006liu.github.io/vegetal/

I thoroughly enjoyed "Why We Remember" by @charan-neuro.bsky.social - it was fun to follow the weaving of personal anecdotes with our current understanding of (long-term) memory. (The Australian book cover changed the byline and lacks the distinctive brain-shaped cloud though...)

September 28, 2024 at 8:25 AM

I thoroughly enjoyed "Why We Remember" by @charan-neuro.bsky.social - it was fun to follow the weaving of personal anecdotes with our current understanding of (long-term) memory. (The Australian book cover changed the byline and lacks the distinctive brain-shaped cloud though...)

Really cool work! Thyer et al. (fig below) shows classification from 4 to 2 with perceptual groups (wrench heads facing each other, forming illusory bars). So why isn't that kind of patterned observed with a retro-cue in the linked study? I suspect it's not an either-or between load and selection /1

July 7, 2024 at 4:44 AM

Really cool work! Thyer et al. (fig below) shows classification from 4 to 2 with perceptual groups (wrench heads facing each other, forming illusory bars). So why isn't that kind of patterned observed with a retro-cue in the linked study? I suspect it's not an either-or between load and selection /1

I have spent a wonderful 5 years in Chicago, learning with the Awh/Vogel Lab. It's sad to be leaving so many brilliant people who have had a positive impact on me.

Tomorrow, I return to Australia and embark on the journey of becoming a faculty and starting the PALM lab in University of Adelaide! 🌴

Tomorrow, I return to Australia and embark on the journey of becoming a faculty and starting the PALM lab in University of Adelaide! 🌴

May 28, 2024 at 2:53 AM

I have spent a wonderful 5 years in Chicago, learning with the Awh/Vogel Lab. It's sad to be leaving so many brilliant people who have had a positive impact on me.

Tomorrow, I return to Australia and embark on the journey of becoming a faculty and starting the PALM lab in University of Adelaide! 🌴

Tomorrow, I return to Australia and embark on the journey of becoming a faculty and starting the PALM lab in University of Adelaide! 🌴

Piotr @styrkowiec.bsky.social will be presenting our work on the CDA in a novel dual #workingmemory and object tracking task, Tuesday morning at #CNS2024. We find that the CDA is dominated by the number of moving targets, not the load of to-be-remembered colors www.cogneurosociety.org/poster/?id=650

April 12, 2024 at 7:24 PM

Piotr @styrkowiec.bsky.social will be presenting our work on the CDA in a novel dual #workingmemory and object tracking task, Tuesday morning at #CNS2024. We find that the CDA is dominated by the number of moving targets, not the load of to-be-remembered colors www.cogneurosociety.org/poster/?id=650

University of Chicago ReproTea starting our meetings for the quarter by enjoying an instant ramen party while watching @priyasilverstein.com and their fantastic RIOT Science Club talk on "Easing into Open Science"! Still a relevant and helpful guide! @reproducibilitea.bsky.social

March 28, 2024 at 3:17 AM

University of Chicago ReproTea starting our meetings for the quarter by enjoying an instant ramen party while watching @priyasilverstein.com and their fantastic RIOT Science Club talk on "Easing into Open Science"! Still a relevant and helpful guide! @reproducibilitea.bsky.social

Last year, @styrkowiec.bsky.social and I presented our own pre-data poster – a study of working memory for moving objects and the contralateral delay activity. We had a lot of helpful discussions that lead to a more impactful design – the results are going to be presented in a talk this year!

March 23, 2024 at 5:31 PM

Last year, @styrkowiec.bsky.social and I presented our own pre-data poster – a study of working memory for moving objects and the contralateral delay activity. We had a lot of helpful discussions that lead to a more impactful design – the results are going to be presented in a talk this year!