Code: github.com/williamgilpi...

Explanatory Website & Code demo: williamgilpin.github.io/illotka/demo...

Code: github.com/williamgilpi...

Explanatory Website & Code demo: williamgilpin.github.io/illotka/demo...

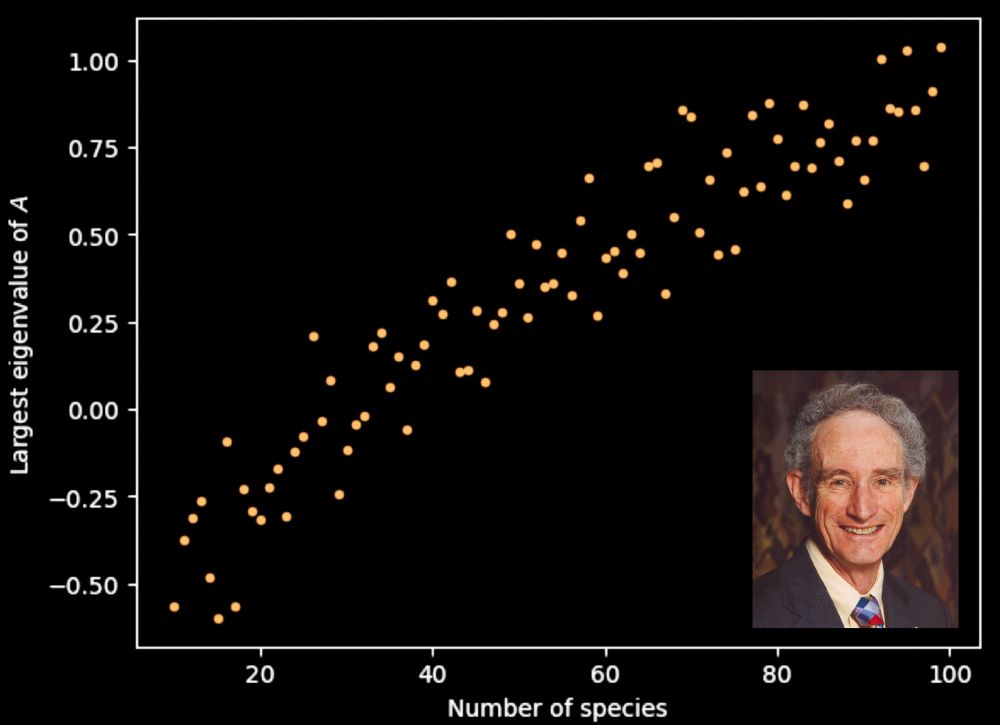

Paper here: arxiv.org/abs/2505.13755

Study lead by Jeff Lai and Anthony Bao

Paper here: arxiv.org/abs/2505.13755

Study lead by Jeff Lai and Anthony Bao