Postdoctoral fellow at Yale University

https://www.vladchituc.com/

5/7

5/7

And the same holds true for immorality.

(Also: this project has my favorite joke that I've ever snuck into a paper).

3/7

And the same holds true for immorality.

(Also: this project has my favorite joke that I've ever snuck into a paper).

3/7

@cognitionjournal.bsky.social.

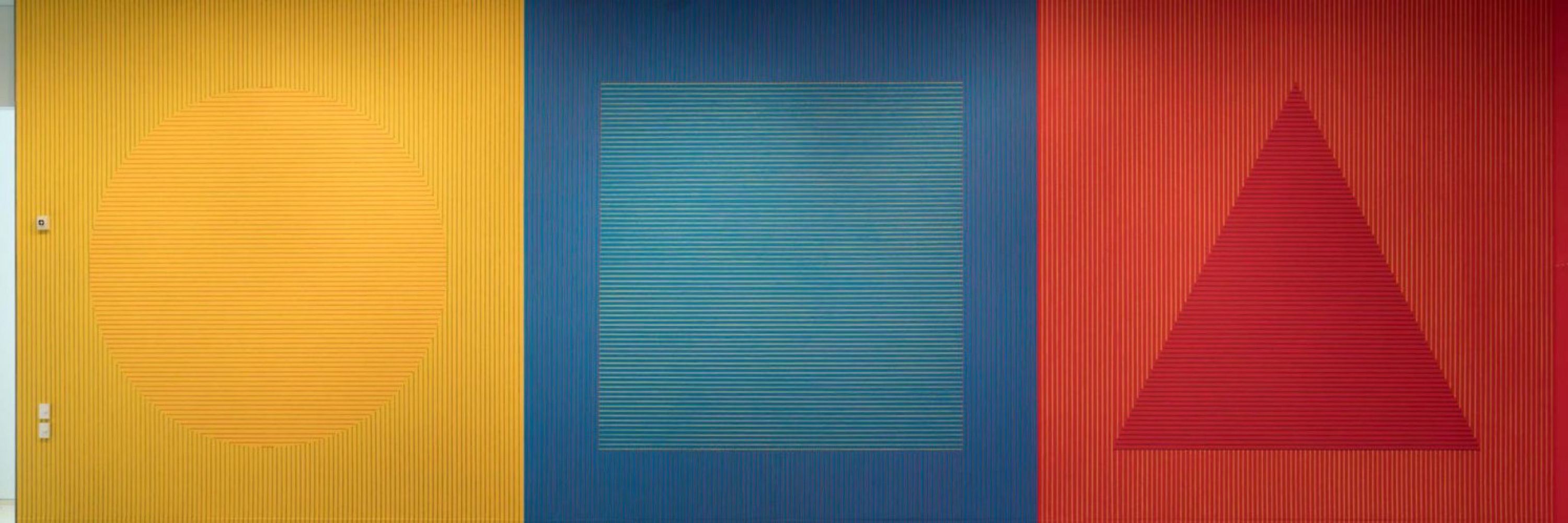

Moral psychologists almost always use self-report scales to study moral judgment. But there's a problem: the meaning of these scales is inherently relative.

A 2 min demo (and a short thread):

1/7

@cognitionjournal.bsky.social.

Moral psychologists almost always use self-report scales to study moral judgment. But there's a problem: the meaning of these scales is inherently relative.

A 2 min demo (and a short thread):

1/7

"Modern chess algorithms are better than Deep Blue with less computational power! That's exponential growth! Hard takeoff baby!!"

This is how fucking dumb you sound:

"Modern chess algorithms are better than Deep Blue with less computational power! That's exponential growth! Hard takeoff baby!!"

This is how fucking dumb you sound:

But reader—this is dumb. A distinction without a difference.

Scaling laws ARE the wall.

But reader—this is dumb. A distinction without a difference.

Scaling laws ARE the wall.

This is fully and entirely in context. The book was very gay.

This is fully and entirely in context. The book was very gay.

(12/14)

(12/14)

(10/14)

(10/14)

(9/14)

(9/14)

(7/14)

(7/14)

(5/14)

(5/14)

(4/14)

(4/14)

vladchituc.substack.com/p/you-dont-h...

vladchituc.substack.com/p/you-dont-h...

(I actually spent like 5 hours typesetting this quote for the beginning of my dissertation about adapting the methods of sensory psychophysics, and it's genuinely one of my favorite parts of my dissertation).

(I actually spent like 5 hours typesetting this quote for the beginning of my dissertation about adapting the methods of sensory psychophysics, and it's genuinely one of my favorite parts of my dissertation).