vishaal27.github.io

We construct a stable & reliable set of evaluations (StableEval) inspired by the inverse-variance-weighting method, to prune out unreliable evals!

We construct a stable & reliable set of evaluations (StableEval) inspired by the inverse-variance-weighting method, to prune out unreliable evals!

Outperforming strong baselines including Apple's MobileCLIP, TinyCLIP and @datologyai.com CLIP models!

Outperforming strong baselines including Apple's MobileCLIP, TinyCLIP and @datologyai.com CLIP models!

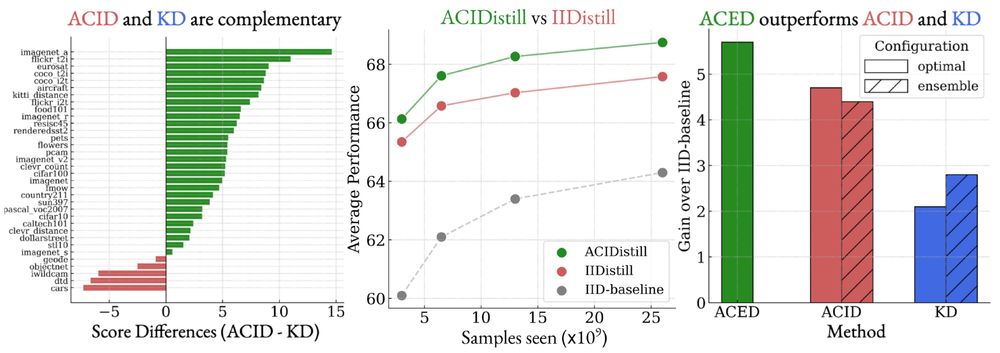

Further, ACID significantly outperforms KD as we scale up the reference/teacher sizes.

Further, ACID significantly outperforms KD as we scale up the reference/teacher sizes.

arxiv.org/abs/2411.18674

Smol models are all the rage these days & knowledge distillation (KD) is key for model compression!

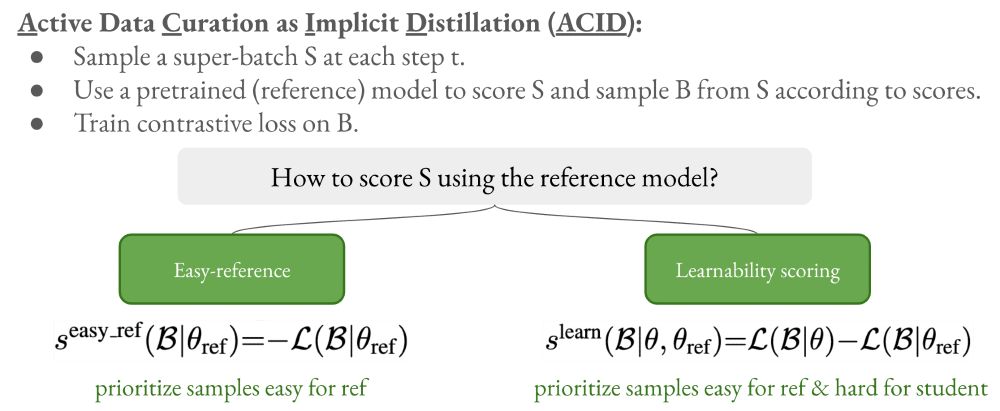

We show how data curation can effectively distill to yield SoTA FLOP-efficient {C/Sig}LIPs!!

🧵👇

arxiv.org/abs/2411.18674

Smol models are all the rage these days & knowledge distillation (KD) is key for model compression!

We show how data curation can effectively distill to yield SoTA FLOP-efficient {C/Sig}LIPs!!

🧵👇