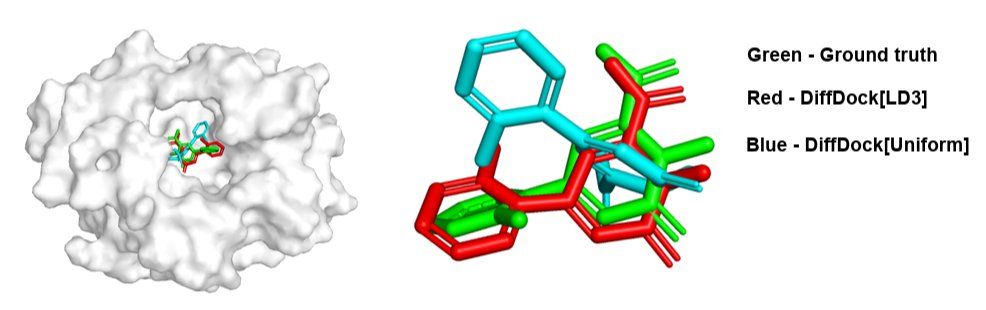

LD3 can be applied to diffusion models in other domains, such as molecular docking.

LD3 can be applied to diffusion models in other domains, such as molecular docking.

LD3 can be trained on a single GPU in under one hour. For smaller datasets like CIFAR-10, training can be completed in less than 6 minutes.

LD3 can be trained on a single GPU in under one hour. For smaller datasets like CIFAR-10, training can be completed in less than 6 minutes.

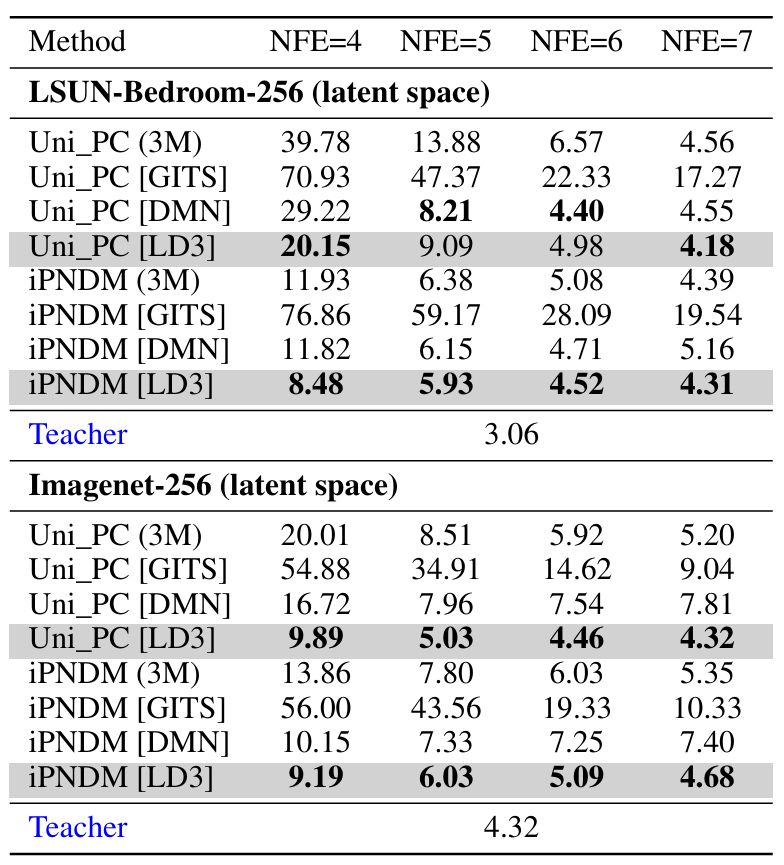

LD3 significantly improves sample quality.

LD3 significantly improves sample quality.

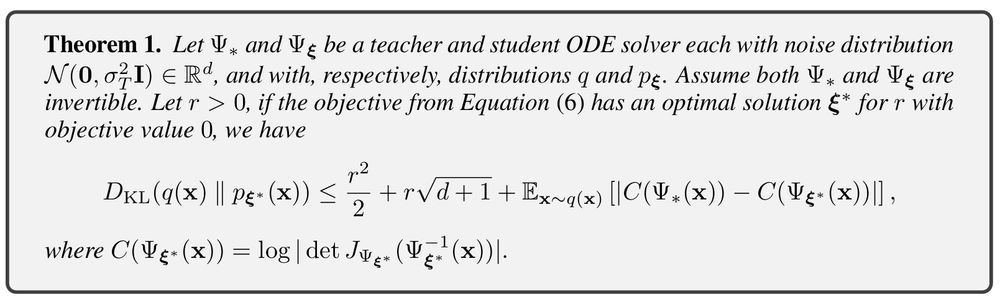

This surrogate loss is theoretically close to the original distillation objective, leading to better convergence and avoiding underfitting.

This surrogate loss is theoretically close to the original distillation objective, leading to better convergence and avoiding underfitting.

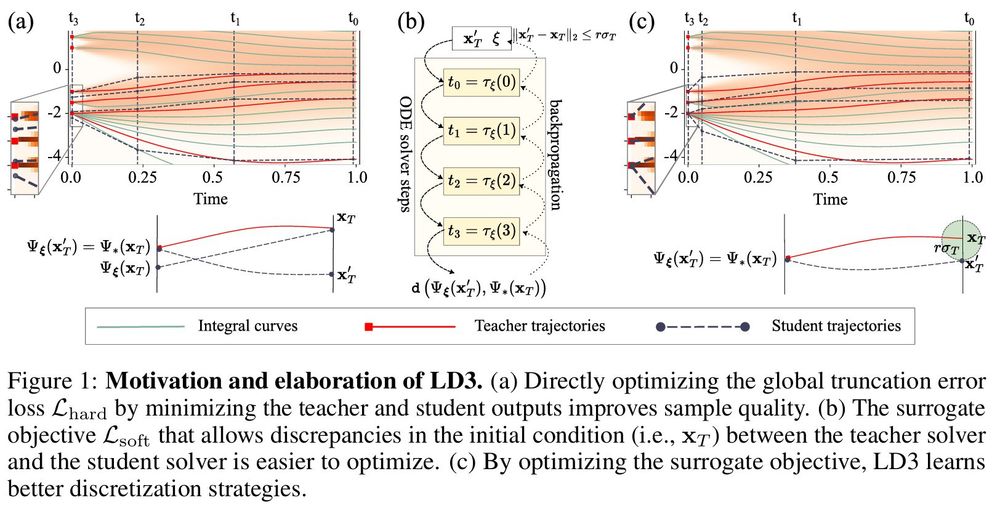

A potential problem with the student model is its limited capacity. To address this, we propose a soft surrogate loss, simplifying the student's optimization task.

A potential problem with the student model is its limited capacity. To address this, we propose a soft surrogate loss, simplifying the student's optimization task.

LD3 uses a teacher-student framework:

🔹Teacher: Runs the ODE solver with small step sizes.

🔹Student: Learns optimal discretization to match the teacher's output.

🔹Backpropagates through the ODE solver to refine time steps.

LD3 uses a teacher-student framework:

🔹Teacher: Runs the ODE solver with small step sizes.

🔹Student: Learns optimal discretization to match the teacher's output.

🔹Backpropagates through the ODE solver to refine time steps.

LD3 optimizes the time discretization for diffusion ODE solvers by minimizing the global truncation error, resulting in higher sample quality with fewer sampling steps.

LD3 optimizes the time discretization for diffusion ODE solvers by minimizing the global truncation error, resulting in higher sample quality with fewer sampling steps.

Diffusion models produce high-quality generations but are computationally expensive due to multi-step sampling. Existing acceleration methods either require costly retraining (distillation) or depend on manually designed time discretization heuristics. LD3 changes that.

Diffusion models produce high-quality generations but are computationally expensive due to multi-step sampling. Existing acceleration methods either require costly retraining (distillation) or depend on manually designed time discretization heuristics. LD3 changes that.