Prev: Cambridge CBL, St John's College, ETH Zürich, Google Brain, Microsoft Research, Disney Research.

https://fortuin.github.io/

What better way to celebrate it than to speak at the ELLIS UnConference in Bad Teinach?

What better way to celebrate it than to speak at the ELLIS UnConference in Bad Teinach?

@bertrand-sharp.bsky.social, and Stephan Günnemann

@bertrand-sharp.bsky.social, and Stephan Günnemann

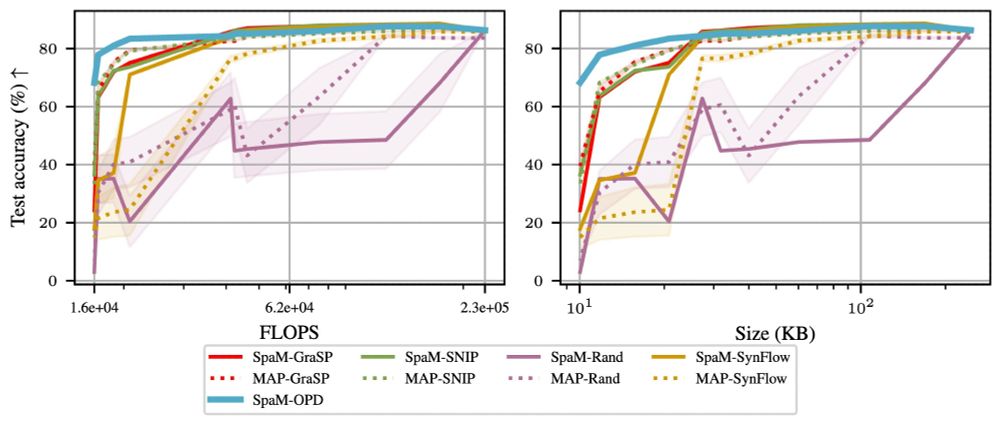

☝️We showcase significant computational savings while retaining high performance across different sparsity levels:

📈 Achieves up to 20x computational savings with minimal accuracy degradation

☝️We showcase significant computational savings while retaining high performance across different sparsity levels:

📈 Achieves up to 20x computational savings with minimal accuracy degradation

⚡ Reuses posterior precision to guide efficient sparsification

⚡ Covers structured & unstructured pruning seamlessly

⚡ Scales well across various architectures and sparsity levels

⚡ Reuses posterior precision to guide efficient sparsification

⚡ Covers structured & unstructured pruning seamlessly

⚡ Scales well across various architectures and sparsity levels

📄 "Shaving Weights with Occam's Razor: Bayesian Sparsification for Neural Networks using the Marginal Likelihood"

Read it here: arxiv.org/abs/2402.15978

Details in 🧵

📄 "Shaving Weights with Occam's Razor: Bayesian Sparsification for Neural Networks using the Marginal Likelihood"

Read it here: arxiv.org/abs/2402.15978

Details in 🧵