verenablaschke.github.io

👥 Rob van der Goot, Esther Ploeger, @verenablaschke.bsky.social Tanja Samardžic

🔗 aclanthology.org/2025.emnlp-d...

🎯A convenient toolkit for obtaining distance measures across languages

▶️ www.youtube.com/watch?v=SSk9...

👥 Rob van der Goot, Esther Ploeger, @verenablaschke.bsky.social Tanja Samardžic

🔗 aclanthology.org/2025.emnlp-d...

🎯A convenient toolkit for obtaining distance measures across languages

▶️ www.youtube.com/watch?v=SSk9...

bsky.app/profile/vere...

bsky.app/profile/vere...

- Paper submission: Dec 19

- Commitment for pre-reviewed papers: Jan 2

- Acceptance notifs: Jan 23

- Camera-ready: Feb 3

- Workshop: TBD (Mar 24-29)

Organizers:

Yves Scherrer, Noëmi Aepli, @tosaja.bsky.social, Nikola Ljubešić, Preslav Nakov, @tiedeman.bsky.social, Marcos Zampieri & me

- Paper submission: Dec 19

- Commitment for pre-reviewed papers: Jan 2

- Acceptance notifs: Jan 23

- Camera-ready: Feb 3

- Workshop: TBD (Mar 24-29)

Organizers:

Yves Scherrer, Noëmi Aepli, @tosaja.bsky.social, Nikola Ljubešić, Preslav Nakov, @tiedeman.bsky.social, Marcos Zampieri & me

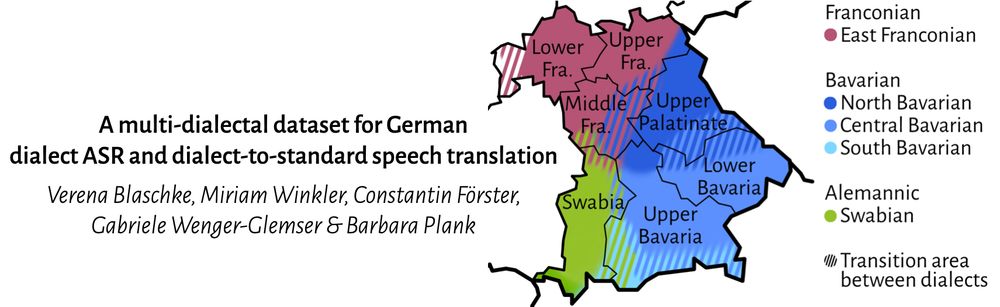

- talk on Mon Aug 18, 15:50–16:10

- preprint: arxiv.org/abs/2506.02894

- suppl. material: github.com/mainlp/betth...

Joint work w/ Miriam Winkler & @barbaraplank.bsky.social from @mainlp.bsky.social, and Constantin Förster & Gabriele Wenger-Glemser from Bayerischer Rundfunk!

- talk on Mon Aug 18, 15:50–16:10

- preprint: arxiv.org/abs/2506.02894

- suppl. material: github.com/mainlp/betth...

Joint work w/ Miriam Winkler & @barbaraplank.bsky.social from @mainlp.bsky.social, and Constantin Förster & Gabriele Wenger-Glemser from Bayerischer Rundfunk!

See you at the Findings poster reception on Monday July 28 (18:00-19:30) :)

Preprint: arxiv.org/abs/2501.14491

See you at the Findings poster reception on Monday July 28 (18:00-19:30) :)

Preprint: arxiv.org/abs/2501.14491