https://valentin.deschaintre.fr

This is the combination of the work of many people, mentors, collaborators, students, friends who trusted me and taught me so much along the way!

This is the combination of the work of many people, mentors, collaborators, students, friends who trusted me and taught me so much along the way!

Given a user click, we propose to select all regions on an objects with the same material. We can also do segmentation in under a minute: mfischer-ucl.github.io/sama/

Given a user click, we propose to select all regions on an objects with the same material. We can also do segmentation in under a minute: mfischer-ucl.github.io/sama/

Come to our session on Thursday afternoon!

Come to our session on Thursday afternoon!

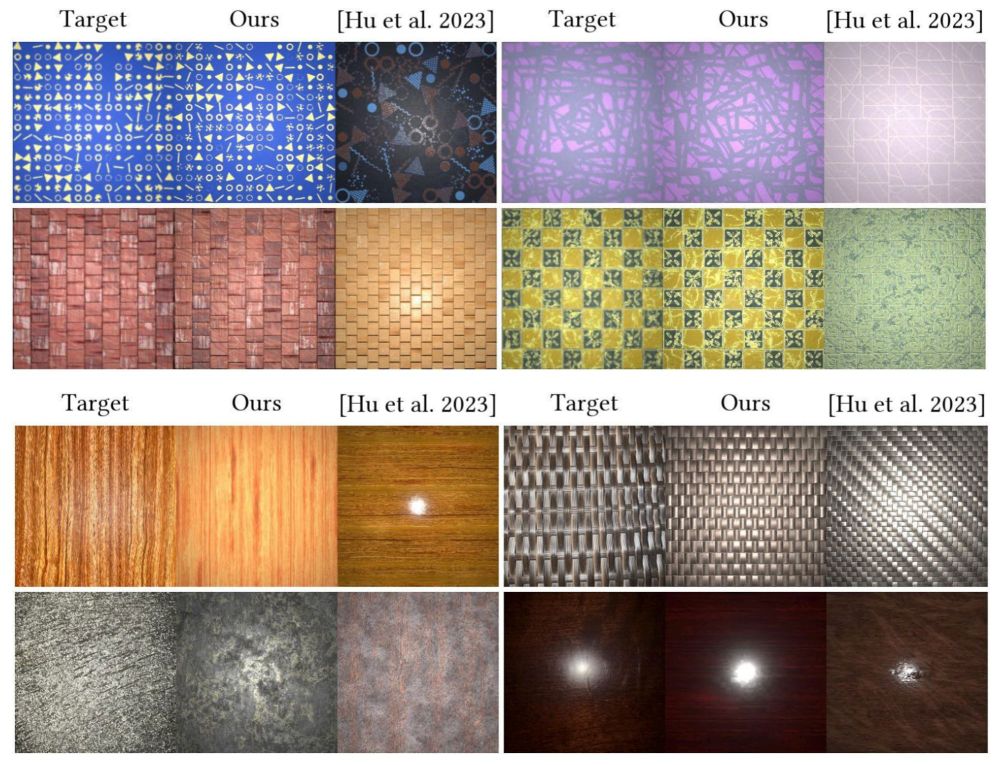

🤖 We propose to use RL as a way to improve procedural material parameter generation, avoiding the ground truth data bottleneck, and improve appearance matching!

Open Access: dl.acm.org/doi/10.1145/...

🤖 We propose to use RL as a way to improve procedural material parameter generation, avoiding the ground truth data bottleneck, and improve appearance matching!

Open Access: dl.acm.org/doi/10.1145/...

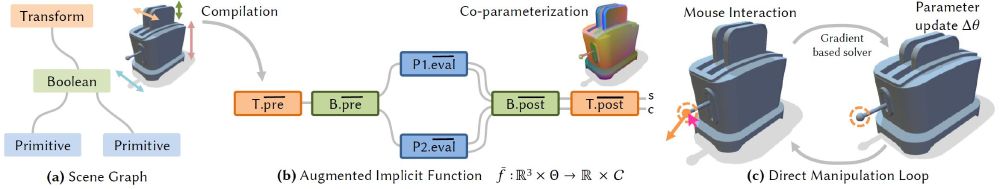

"Direct Manipulation of Procedural Implicit Surfaces" where we explore how to create a WYSIWYG editing interface for procedural representations, avoiding awkward sliders!

eliemichel.github.io/SdfManipulat...

"Direct Manipulation of Procedural Implicit Surfaces" where we explore how to create a WYSIWYG editing interface for procedural representations, avoiding awkward sliders!

eliemichel.github.io/SdfManipulat...

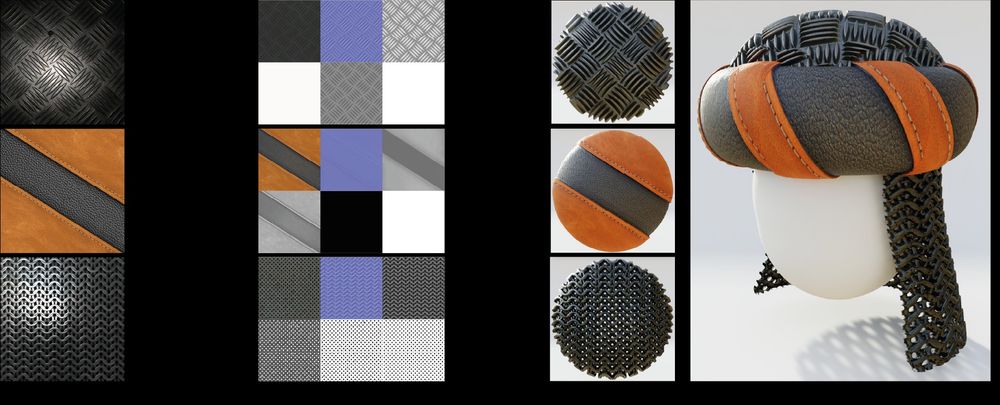

The work is a collaboration with colleagues at Adobe Research and was led by Giuseppe (gvecchio.com), check him out!

The work is a collaboration with colleagues at Adobe Research and was led by Giuseppe (gvecchio.com), check him out!

🎓 1/3 Controlmat, a diffusion model for material acquisition.

gvecchio.com/controlmat/

Given an image we generate a corresponding tileable, high-resolution relightable material!

🎓 1/3 Controlmat, a diffusion model for material acquisition.

gvecchio.com/controlmat/

Given an image we generate a corresponding tileable, high-resolution relightable material!