https://philpeople.org/profiles/vincent-carchidi

All opinions entirely my own.

I saw something from Benedict Evans recently where he noticed that the term "AI slop" at some point shifted from its original meaning of "trash output" to "anything automated by an LLM."

It seems like having a barrage of even *better* outputs in areas like recruitment becomes slop

I saw something from Benedict Evans recently where he noticed that the term "AI slop" at some point shifted from its original meaning of "trash output" to "anything automated by an LLM."

It seems like having a barrage of even *better* outputs in areas like recruitment becomes slop

The follow-up email almost certainly was generated as well, when I asked about that report I apparently forgot writing.

The follow-up email almost certainly was generated as well, when I asked about that report I apparently forgot writing.

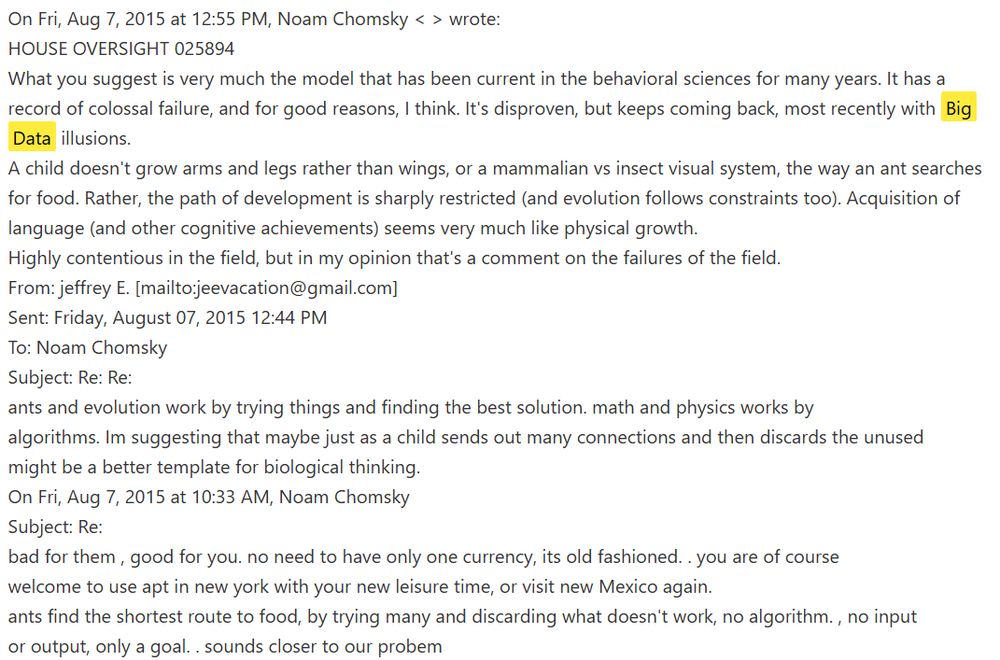

A student sent me this. Verified edu email. Personal info blocked obviously.

A student sent me this. Verified edu email. Personal info blocked obviously.

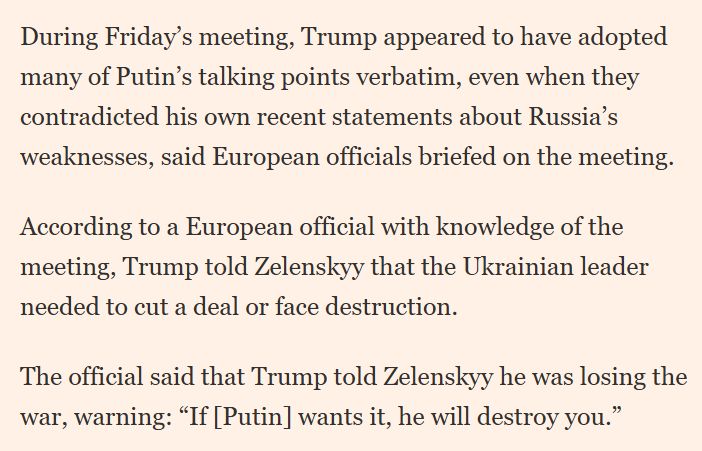

www.nytimes.com/2025/06/11/t...

www.nytimes.com/2025/06/11/t...

arxiv.org/abs/2308.03598

arxiv.org/abs/2308.03598

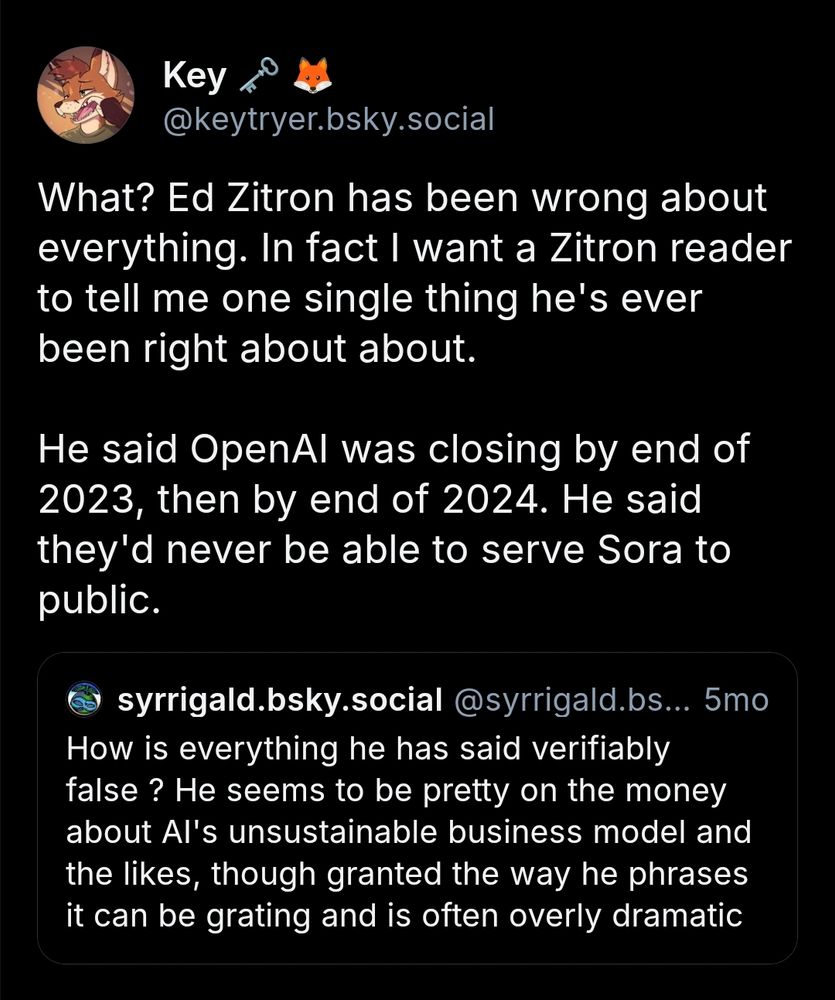

The whole thread about failed Zitron predictions is interesting, and sort of gets back to my earlier post on video generators: I do think, for a lot of people, technological advancement confers zero sense of improved well-being, and its promotion is interpreted as hostile.

The whole thread about failed Zitron predictions is interesting, and sort of gets back to my earlier post on video generators: I do think, for a lot of people, technological advancement confers zero sense of improved well-being, and its promotion is interpreted as hostile.

www.google.com/books/editio...

www.google.com/books/editio...

And I mean, it might be true, but this does feel a tad...copey

And I mean, it might be true, but this does feel a tad...copey