Details: varworkshop.github.io/challenges/

🚨🚨🚨The winning teams will receive a prize and a contributed talk.

P.S. GPT-4o does not do too well.

Details: varworkshop.github.io/challenges/

🚨🚨🚨The winning teams will receive a prize and a contributed talk.

P.S. GPT-4o does not do too well.

🚨The winning teams will receive a prize along with a contributed talk. 🚨

Website: varworkshop.github.io/challenges/

🚨The winning teams will receive a prize along with a contributed talk. 🚨

Website: varworkshop.github.io/challenges/

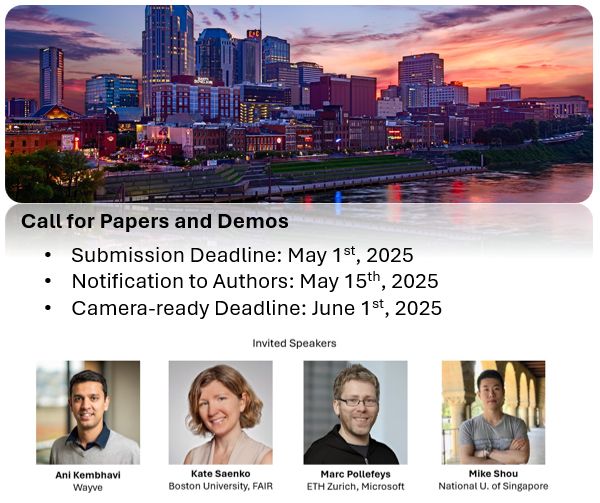

Link: varworkshop.github.io/calls/

Link: varworkshop.github.io/calls/

Workshop Page: varworkshop.github.io

Workshop Page: varworkshop.github.io