Join us to work on novel and exciting ideas in machine/deep learning, #agenticAI / #generativeAI, #neurosymbolicAI, and #knowledgegraphs, applied to problems in social, business, and health domains.

1/4 🧵

Join us to work on novel and exciting ideas in machine/deep learning, #agenticAI / #generativeAI, #neurosymbolicAI, and #knowledgegraphs, applied to problems in social, business, and health domains.

1/4 🧵

Meet Trilok to say hi if you are at ACL in Vienna.

#NeuroSymbolicAI #CyberSocialSafety

#AdvisorProud

Meet Trilok to say hi if you are at ACL in Vienna.

#NeuroSymbolicAI #CyberSocialSafety

#AdvisorProud

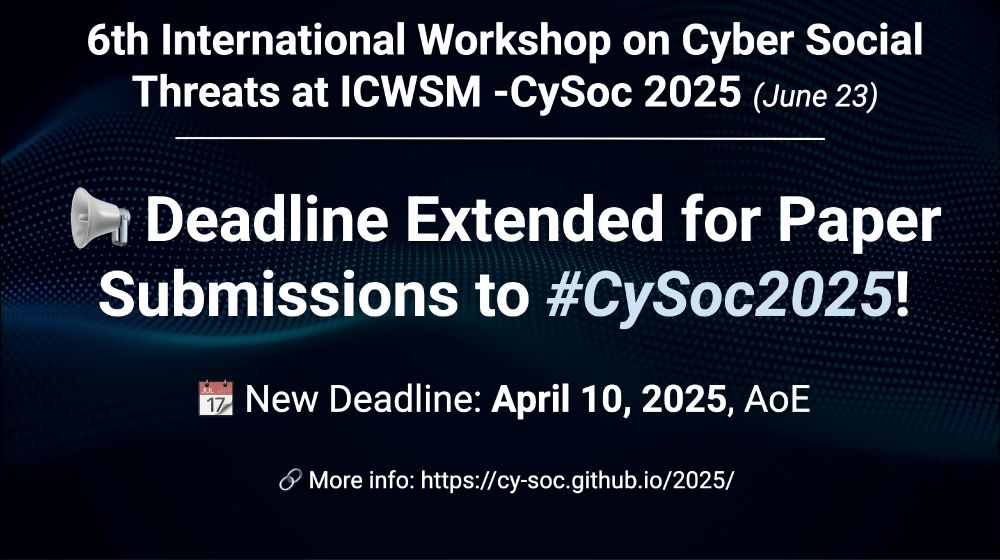

💡Share your research on generative AI, online safety, harms and threats, or political conflict in online platforms, with the leading minds in the field. We’d love to see your submissions.

📆New Deadline: April 10 AoE

💡Share your research on generative AI, online safety, harms and threats, or political conflict in online platforms, with the leading minds in the field. We’d love to see your submissions.

📆New Deadline: April 10 AoE

📢 and Call for Papers is still open!

📆 Submission Deadline: March 31, 2025

🔗 More: cy-soc.github.io/2025/

For more: 🧵 1/6 👇

📢 and Call for Papers is still open!

📆 Submission Deadline: March 31, 2025

🔗 More: cy-soc.github.io/2025/

For more: 🧵 1/6 👇

We’re excited to announce the 6th International Workshop on Cyber Social Threats hashtag#CySoc2025 that will be held at hashtag#ICWSM2025 in Denmark! 🎉

🌍 Spotlight Topic: "Political Conflicts in Online Platforms in the Era of Gen-AI."

📅 Submission Deadline: March 31, 2025

We’re excited to announce the 6th International Workshop on Cyber Social Threats hashtag#CySoc2025 that will be held at hashtag#ICWSM2025 in Denmark! 🎉

🌍 Spotlight Topic: "Political Conflicts in Online Platforms in the Era of Gen-AI."

📅 Submission Deadline: March 31, 2025

📄 Read more in our preprint: arxiv.org/pdf/2411.12174

📄 Read more in our preprint: arxiv.org/pdf/2411.12174

"Just KIDDIN’: Knowledge Infusion and Distillation for Detection of INdecent Memes"

"Just KIDDIN’: Knowledge Infusion and Distillation for Detection of INdecent Memes"