🌐 www.kiinformatik.mri.tum.de/en/chair-artificial-intelligence-healthcare-and-medicine

Paper: www.nature.com/articles/s41...

Paper: www.nature.com/articles/s41...

Thank you both for sharing your work and always welcome!

Thank you both for sharing your work and always welcome!

Paper: link.springer.com/chapter/10.1...

Paper: link.springer.com/chapter/10.1...

🧑💻 @zilleralex.bsky.social further explored the nuanced trade-offs between privacy guarantees and model accuracy: www.nature.com/articles/s42....

🧑💻 @zilleralex.bsky.social further explored the nuanced trade-offs between privacy guarantees and model accuracy: www.nature.com/articles/s42....

Paper: www.nature.com/articles/s42...

Paper: www.nature.com/articles/s42...

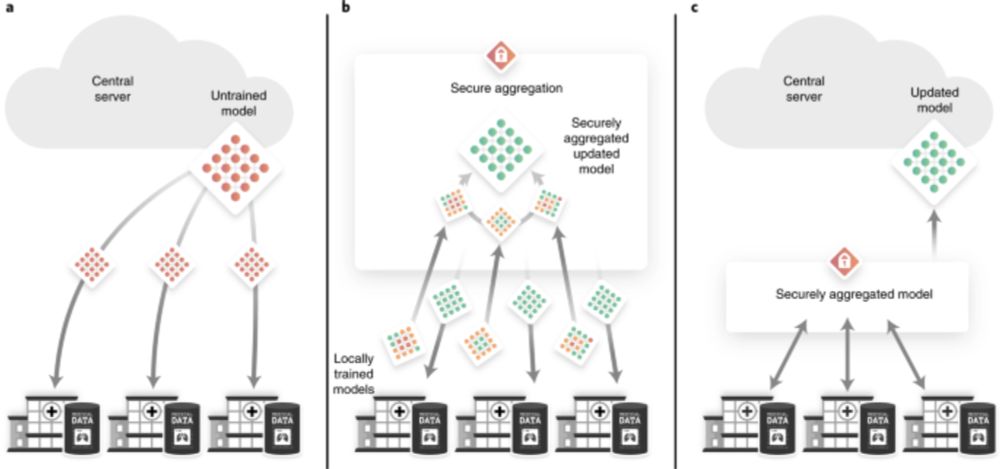

How do we train powerful AI models on sensitive medical data from multiple hospitals while maintaining patient privacy and data locality?

How do we train powerful AI models on sensitive medical data from multiple hospitals while maintaining patient privacy and data locality?