Thousand Brains Project

@thousandbrains.org

Advancing AI & robotics by reverse engineering the neocortex.

Leveraging sensorimotor learning, structured reference frames, & cortical modularity.

Open-source research backed by Jeff Hawkins & Gates Foundation.

Explore thousandbrains.org

Leveraging sensorimotor learning, structured reference frames, & cortical modularity.

Open-source research backed by Jeff Hawkins & Gates Foundation.

Explore thousandbrains.org

💡 Want to help shape our next community event?

It only takes 30 seconds, share your thoughts here:

👉 forms.gle/jDM4j4Non5w4...

It only takes 30 seconds, share your thoughts here:

👉 forms.gle/jDM4j4Non5w4...

October 31, 2025 at 2:08 PM

💡 Want to help shape our next community event?

It only takes 30 seconds, share your thoughts here:

👉 forms.gle/jDM4j4Non5w4...

It only takes 30 seconds, share your thoughts here:

👉 forms.gle/jDM4j4Non5w4...

✨ @vivianeclay.bsky.social gives a summary of today’s research meeting where we discuss a “Proposal for How Columns Learn Sequences and Behaviors”

Watch the full video here: youtu.be/01U-ZXEjEsc

Watch the full video here: youtu.be/01U-ZXEjEsc

October 24, 2025 at 7:09 PM

✨ @vivianeclay.bsky.social gives a summary of today’s research meeting where we discuss a “Proposal for How Columns Learn Sequences and Behaviors”

Watch the full video here: youtu.be/01U-ZXEjEsc

Watch the full video here: youtu.be/01U-ZXEjEsc

✨ @cortical-canonical.bsky.social gives a summary of today’s research meeting where we discuss “Modeling and Evaluating Compositional Object Representations.”

Watch the full video here: youtu.be/boAYuEy7A9o

Watch the full video here: youtu.be/boAYuEy7A9o

October 23, 2025 at 8:06 PM

✨ @cortical-canonical.bsky.social gives a summary of today’s research meeting where we discuss “Modeling and Evaluating Compositional Object Representations.”

Watch the full video here: youtu.be/boAYuEy7A9o

Watch the full video here: youtu.be/boAYuEy7A9o

4/

Jeff Hawkins presents on complex goal oriented behaviours and defines a language to talk about the fundamental building blocks of robotics.

Jeff Hawkins presents on complex goal oriented behaviours and defines a language to talk about the fundamental building blocks of robotics.

August 12, 2025 at 9:05 PM

4/

Jeff Hawkins presents on complex goal oriented behaviours and defines a language to talk about the fundamental building blocks of robotics.

Jeff Hawkins presents on complex goal oriented behaviours and defines a language to talk about the fundamental building blocks of robotics.

3/

@canoetrip.bsky.social discusses how model free/based policies can come together and how that might be represented in subcortical approximations in Monty using salience maps.

@canoetrip.bsky.social discusses how model free/based policies can come together and how that might be represented in subcortical approximations in Monty using salience maps.

August 12, 2025 at 9:05 PM

3/

@canoetrip.bsky.social discusses how model free/based policies can come together and how that might be represented in subcortical approximations in Monty using salience maps.

@canoetrip.bsky.social discusses how model free/based policies can come together and how that might be represented in subcortical approximations in Monty using salience maps.

2/

@ramymounir.com presents his work on hypothesis resampling so that Monty can quickly and accurately deal with object changes in a scene.

@ramymounir.com presents his work on hypothesis resampling so that Monty can quickly and accurately deal with object changes in a scene.

August 12, 2025 at 9:05 PM

2/

@ramymounir.com presents his work on hypothesis resampling so that Monty can quickly and accurately deal with object changes in a scene.

@ramymounir.com presents his work on hypothesis resampling so that Monty can quickly and accurately deal with object changes in a scene.

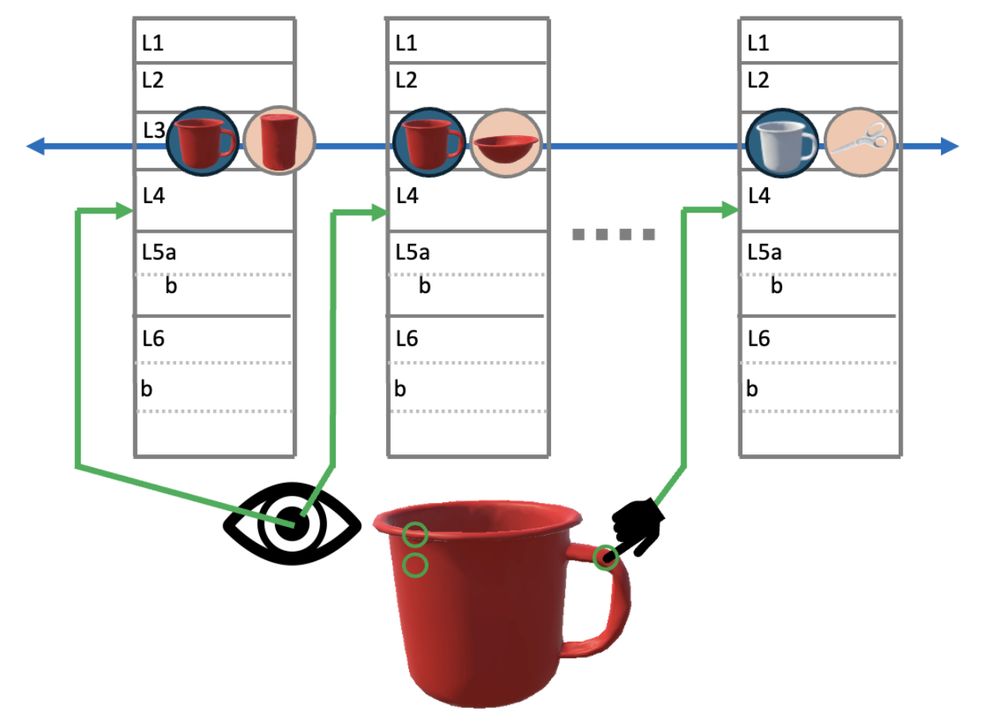

9/

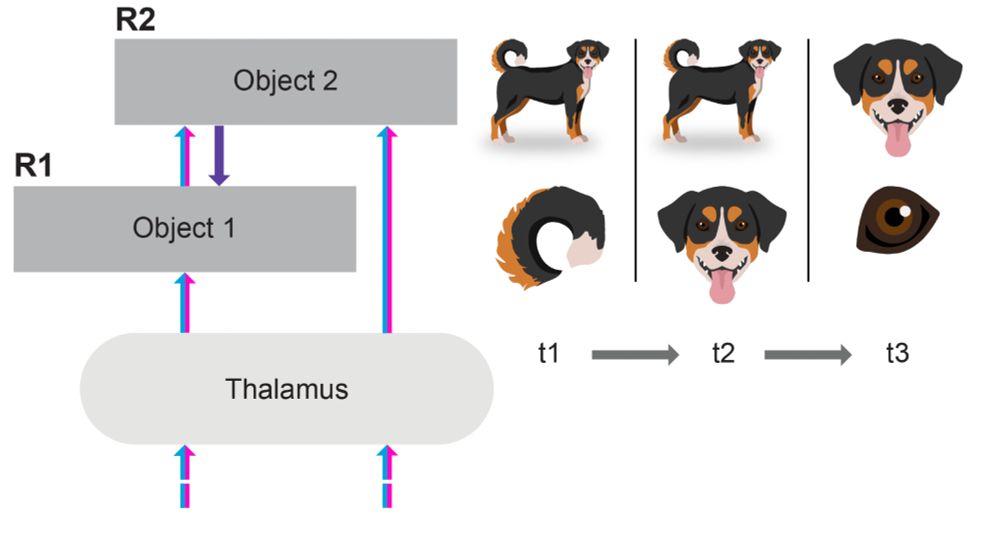

Hierarchical connections are used to learn compositional models.

A new mug with a known logo means that you don’t relearn either one. Your brain composes: “mug” + “logo.” Columns at different levels but with overlapping receptive field link representations spatially.

Hierarchical connections are used to learn compositional models.

A new mug with a known logo means that you don’t relearn either one. Your brain composes: “mug” + “logo.” Columns at different levels but with overlapping receptive field link representations spatially.

July 11, 2025 at 6:35 PM

9/

Hierarchical connections are used to learn compositional models.

A new mug with a known logo means that you don’t relearn either one. Your brain composes: “mug” + “logo.” Columns at different levels but with overlapping receptive field link representations spatially.

Hierarchical connections are used to learn compositional models.

A new mug with a known logo means that you don’t relearn either one. Your brain composes: “mug” + “logo.” Columns at different levels but with overlapping receptive field link representations spatially.

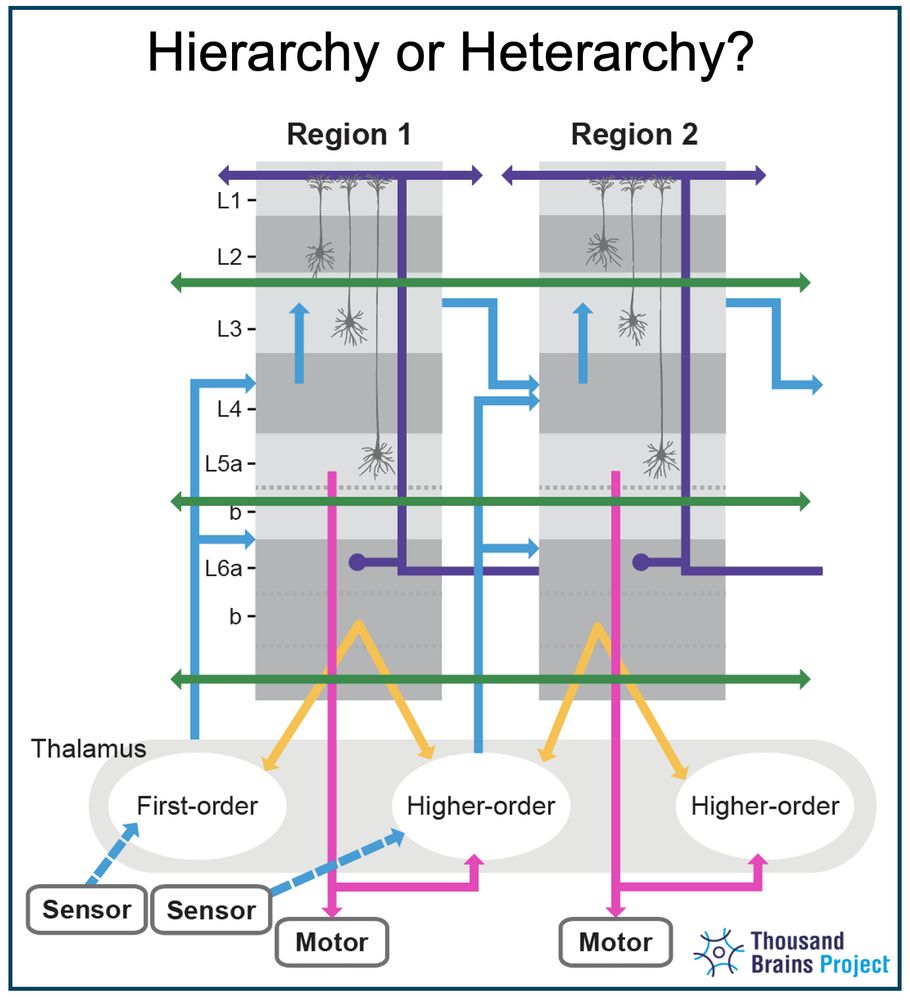

7/

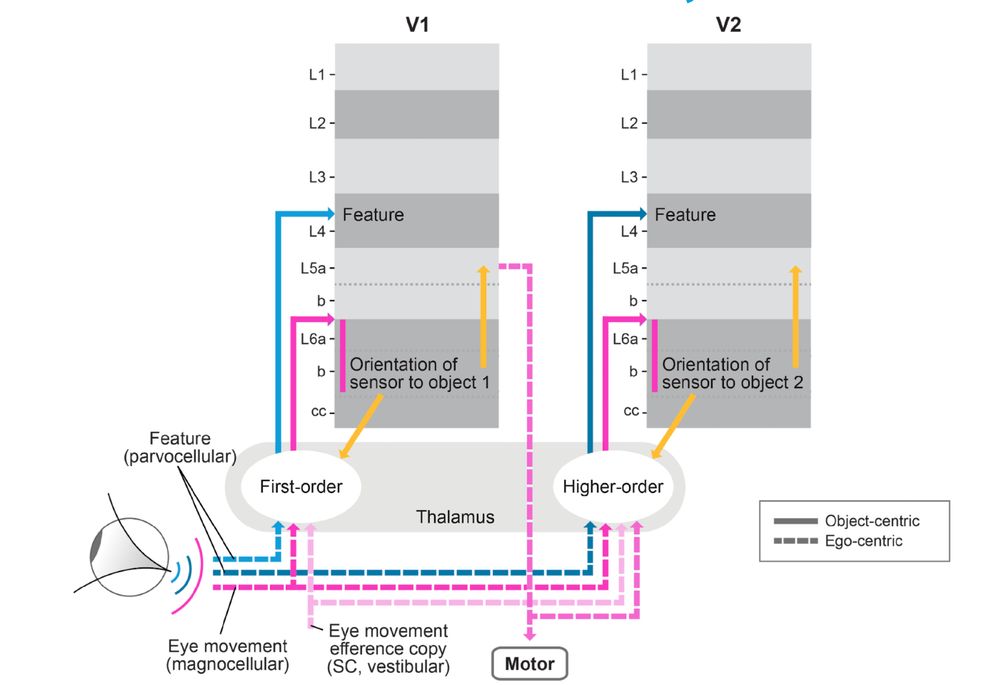

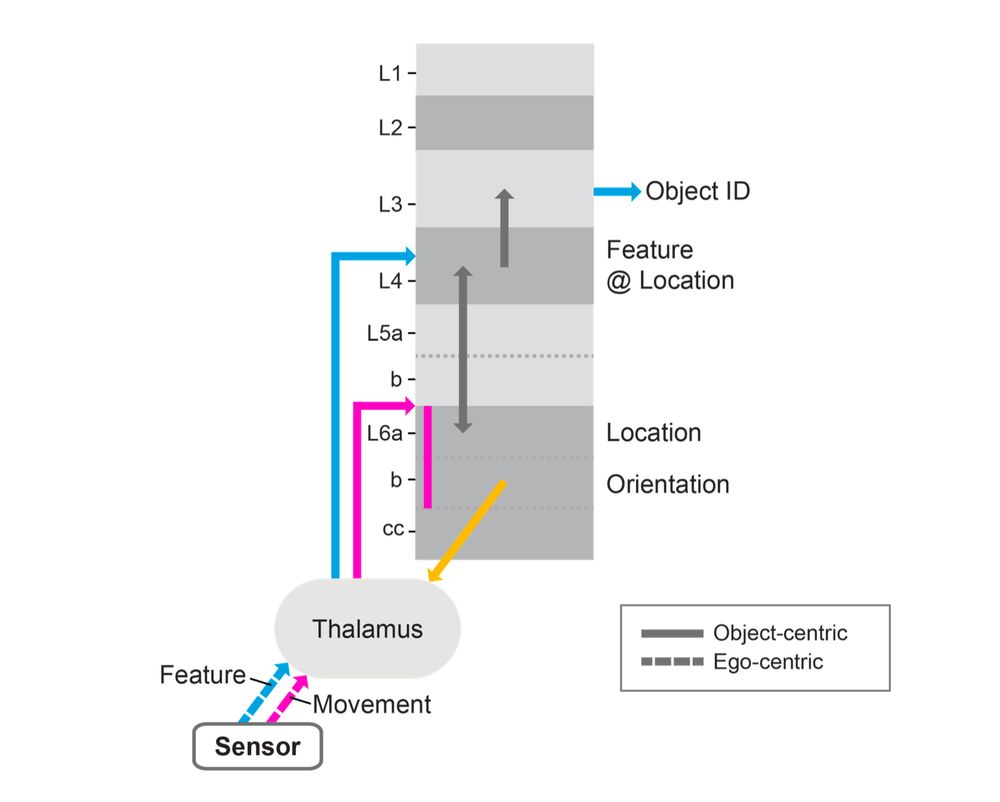

Neocortical columns don’t think in egocentric space, “left of hand.” They think in object-centric space, “on the handle.” The thalamus helps do this translation. This is a key new proposal about the role of the thalamus.

Neocortical columns don’t think in egocentric space, “left of hand.” They think in object-centric space, “on the handle.” The thalamus helps do this translation. This is a key new proposal about the role of the thalamus.

July 11, 2025 at 6:35 PM

7/

Neocortical columns don’t think in egocentric space, “left of hand.” They think in object-centric space, “on the handle.” The thalamus helps do this translation. This is a key new proposal about the role of the thalamus.

Neocortical columns don’t think in egocentric space, “left of hand.” They think in object-centric space, “on the handle.” The thalamus helps do this translation. This is a key new proposal about the role of the thalamus.

6/

The Thousand Brains Theory already outlined several key proposals:

Each column builds its own model.

They vote.

They form consensus.

No need to wait for a final decision at the top.

The Thousand Brains Theory already outlined several key proposals:

Each column builds its own model.

They vote.

They form consensus.

No need to wait for a final decision at the top.

July 11, 2025 at 6:35 PM

6/

The Thousand Brains Theory already outlined several key proposals:

Each column builds its own model.

They vote.

They form consensus.

No need to wait for a final decision at the top.

The Thousand Brains Theory already outlined several key proposals:

Each column builds its own model.

They vote.

They form consensus.

No need to wait for a final decision at the top.

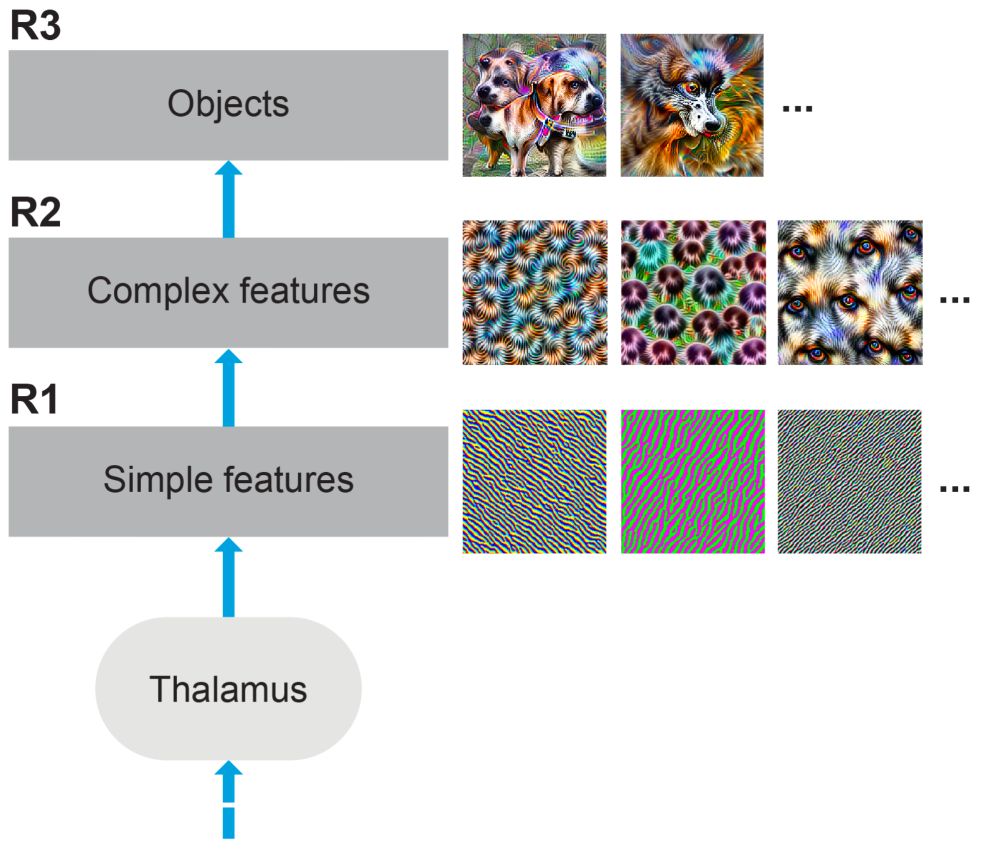

4/

Our new view recasts cortical hierarchy as composition, not feature extraction. Hierarchy is used to combine known parts into new wholes.

Our new view recasts cortical hierarchy as composition, not feature extraction. Hierarchy is used to combine known parts into new wholes.

July 11, 2025 at 6:35 PM

4/

Our new view recasts cortical hierarchy as composition, not feature extraction. Hierarchy is used to combine known parts into new wholes.

Our new view recasts cortical hierarchy as composition, not feature extraction. Hierarchy is used to combine known parts into new wholes.

3/

Classically, the neocortex is viewed as a hierarchy: low levels detect edges, high levels recognize objects. But that model is incomplete. There are several other important connections most people overlook. But they are crucial for sensorimotor intelligence.

Classically, the neocortex is viewed as a hierarchy: low levels detect edges, high levels recognize objects. But that model is incomplete. There are several other important connections most people overlook. But they are crucial for sensorimotor intelligence.

July 11, 2025 at 6:35 PM

3/

Classically, the neocortex is viewed as a hierarchy: low levels detect edges, high levels recognize objects. But that model is incomplete. There are several other important connections most people overlook. But they are crucial for sensorimotor intelligence.

Classically, the neocortex is viewed as a hierarchy: low levels detect edges, high levels recognize objects. But that model is incomplete. There are several other important connections most people overlook. But they are crucial for sensorimotor intelligence.

1/

🚨Another New Paper Drop! 🚨 “Hierarchy or Heterarchy? A Theory of Long-Range Connections for the Sensorimotor Brain”

👇 Dive into the full thread 🧵

arxiv.org/abs/2507.05888

🚨Another New Paper Drop! 🚨 “Hierarchy or Heterarchy? A Theory of Long-Range Connections for the Sensorimotor Brain”

👇 Dive into the full thread 🧵

arxiv.org/abs/2507.05888

July 11, 2025 at 6:35 PM

1/

🚨Another New Paper Drop! 🚨 “Hierarchy or Heterarchy? A Theory of Long-Range Connections for the Sensorimotor Brain”

👇 Dive into the full thread 🧵

arxiv.org/abs/2507.05888

🚨Another New Paper Drop! 🚨 “Hierarchy or Heterarchy? A Theory of Long-Range Connections for the Sensorimotor Brain”

👇 Dive into the full thread 🧵

arxiv.org/abs/2507.05888

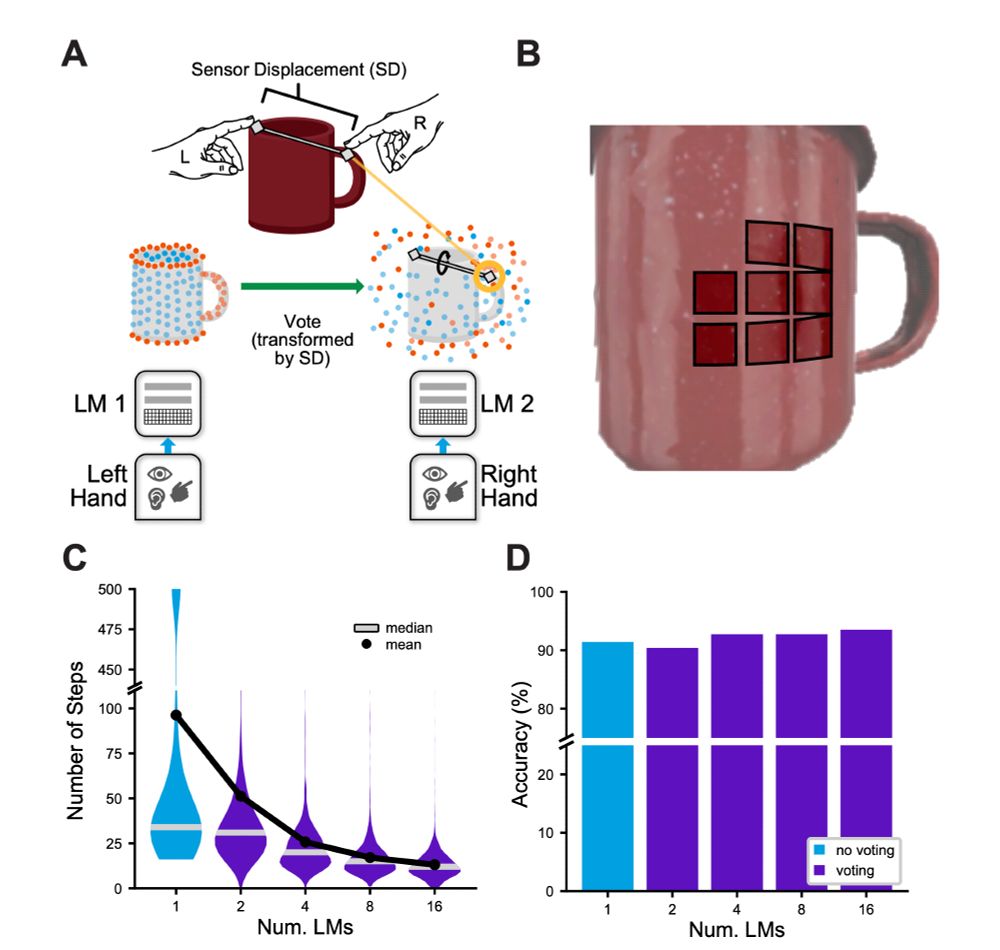

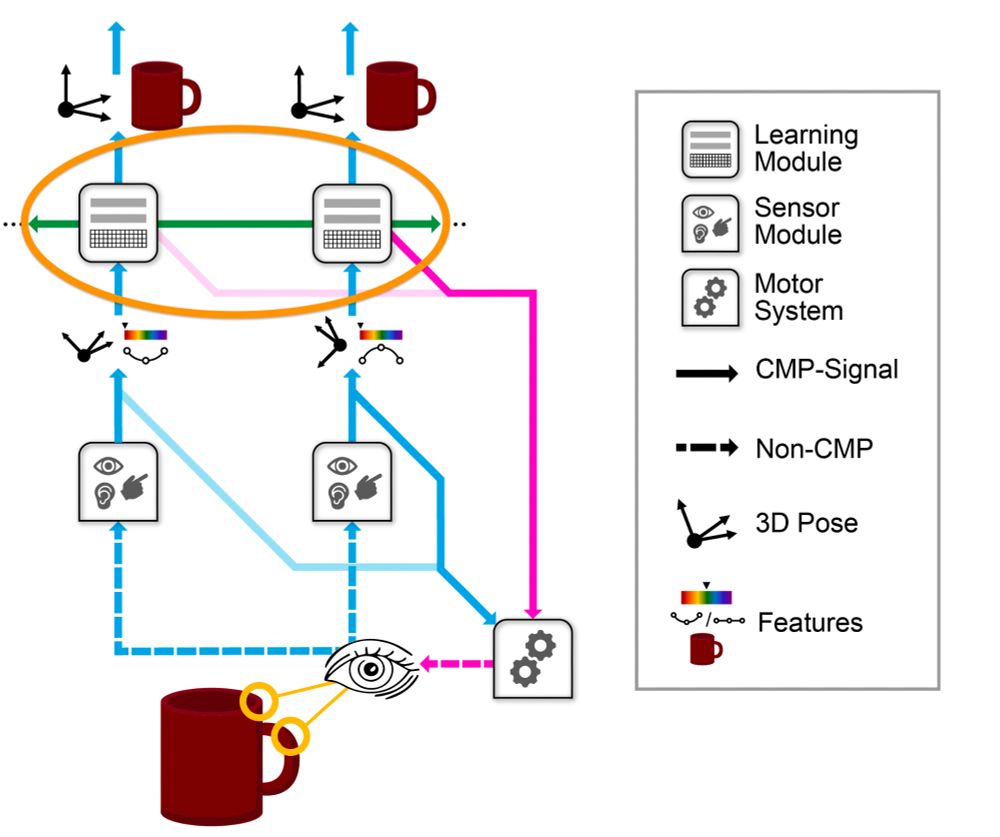

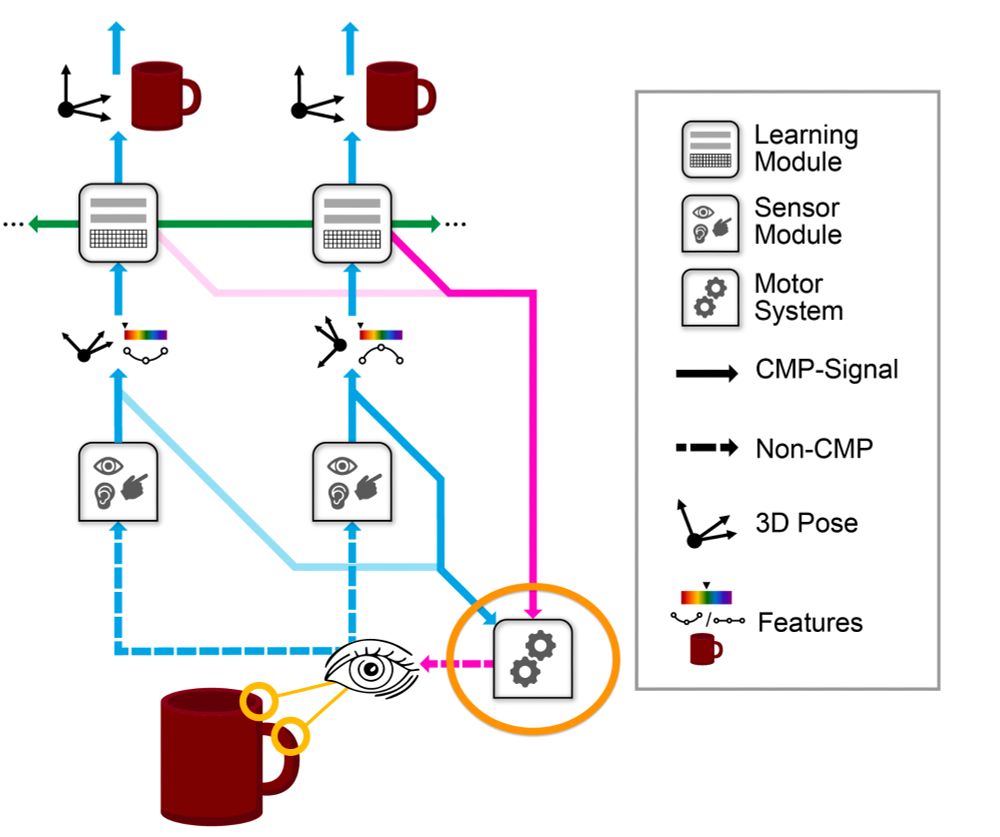

/14

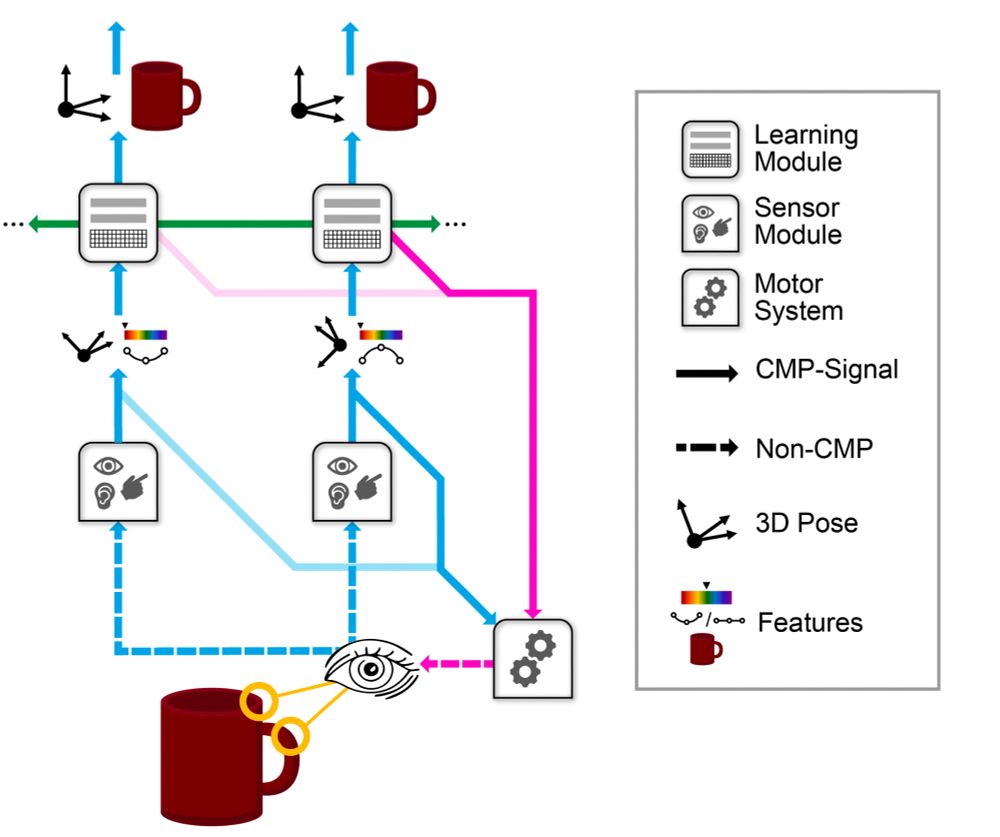

Voting across modules

Multiple learning modules share hypotheses; consensus arrives >2× faster without losing accuracy. Imagine two eyes, two fingers, or a sensor grid collaborating in real time.

Voting across modules

Multiple learning modules share hypotheses; consensus arrives >2× faster without losing accuracy. Imagine two eyes, two fingers, or a sensor grid collaborating in real time.

July 9, 2025 at 9:02 PM

/14

Voting across modules

Multiple learning modules share hypotheses; consensus arrives >2× faster without losing accuracy. Imagine two eyes, two fingers, or a sensor grid collaborating in real time.

Voting across modules

Multiple learning modules share hypotheses; consensus arrives >2× faster without losing accuracy. Imagine two eyes, two fingers, or a sensor grid collaborating in real time.

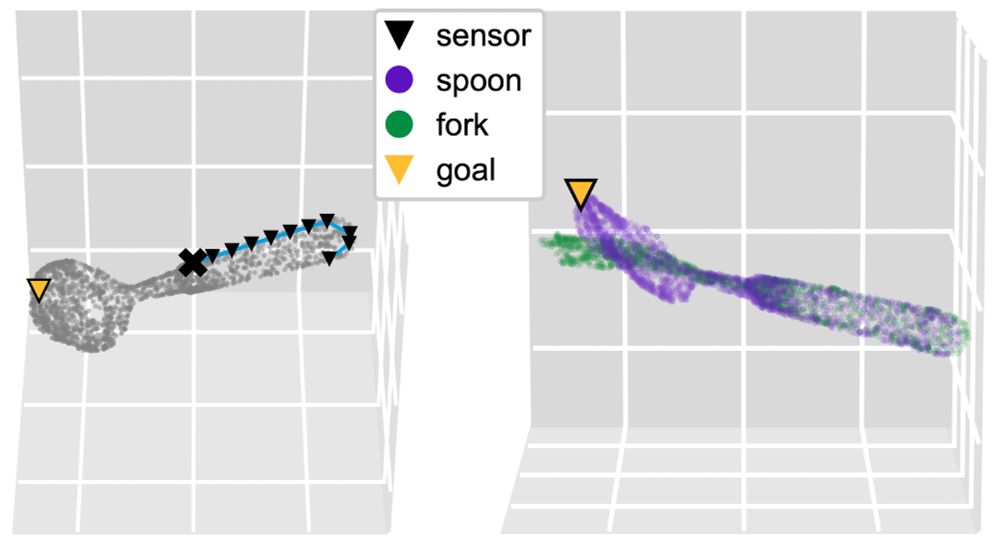

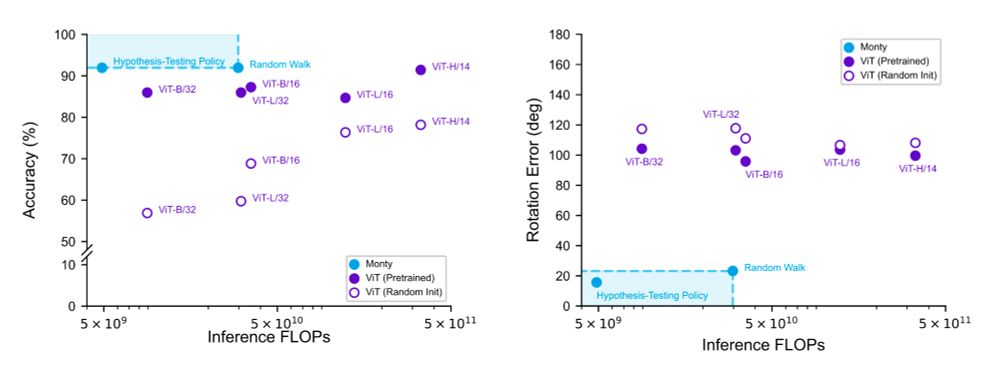

/13

🏃♀️ Movement matters

A simple curvature-following policy + a hypothesis-testing policy cut inference steps ~3× vs. random walks. Monty can leverage its learned models to perform principled movements to resolve uncertainty.

🏃♀️ Movement matters

A simple curvature-following policy + a hypothesis-testing policy cut inference steps ~3× vs. random walks. Monty can leverage its learned models to perform principled movements to resolve uncertainty.

July 9, 2025 at 9:02 PM

/13

🏃♀️ Movement matters

A simple curvature-following policy + a hypothesis-testing policy cut inference steps ~3× vs. random walks. Monty can leverage its learned models to perform principled movements to resolve uncertainty.

🏃♀️ Movement matters

A simple curvature-following policy + a hypothesis-testing policy cut inference steps ~3× vs. random walks. Monty can leverage its learned models to perform principled movements to resolve uncertainty.

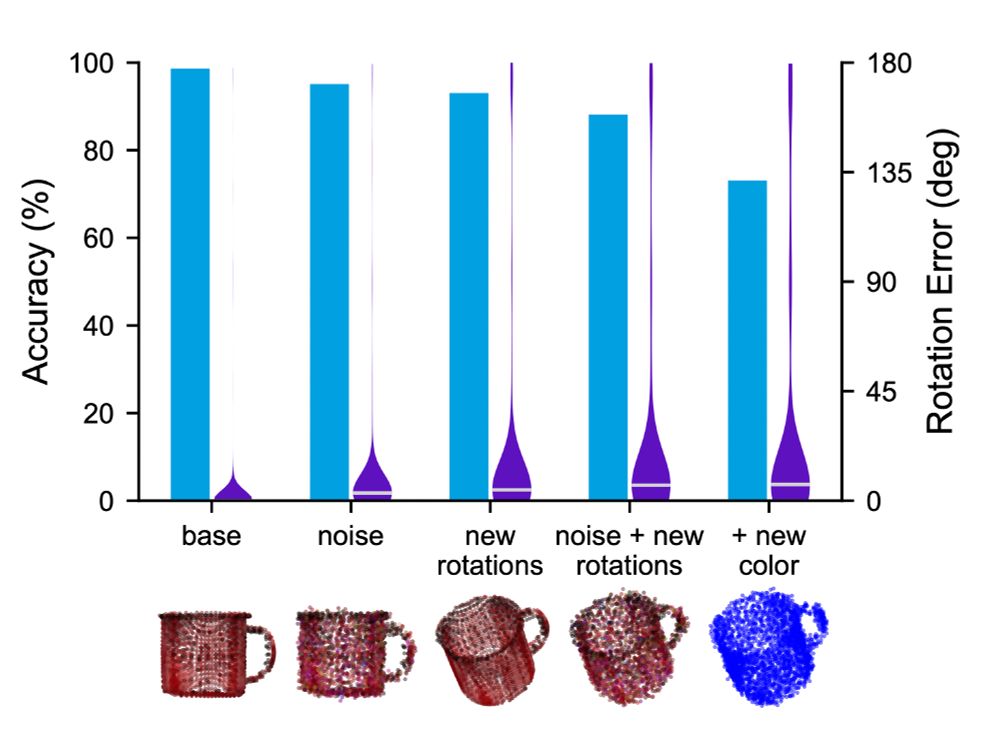

/12

Robust inference and generalization

Monty recognized all 77 YCB objects and their pose with 90+% accuracy, even with noise, novel rotations. Even showing the object in a never-before-seen color doesn’t phase Monty.

Robust inference and generalization

Monty recognized all 77 YCB objects and their pose with 90+% accuracy, even with noise, novel rotations. Even showing the object in a never-before-seen color doesn’t phase Monty.

July 9, 2025 at 9:02 PM

/12

Robust inference and generalization

Monty recognized all 77 YCB objects and their pose with 90+% accuracy, even with noise, novel rotations. Even showing the object in a never-before-seen color doesn’t phase Monty.

Robust inference and generalization

Monty recognized all 77 YCB objects and their pose with 90+% accuracy, even with noise, novel rotations. Even showing the object in a never-before-seen color doesn’t phase Monty.

/11

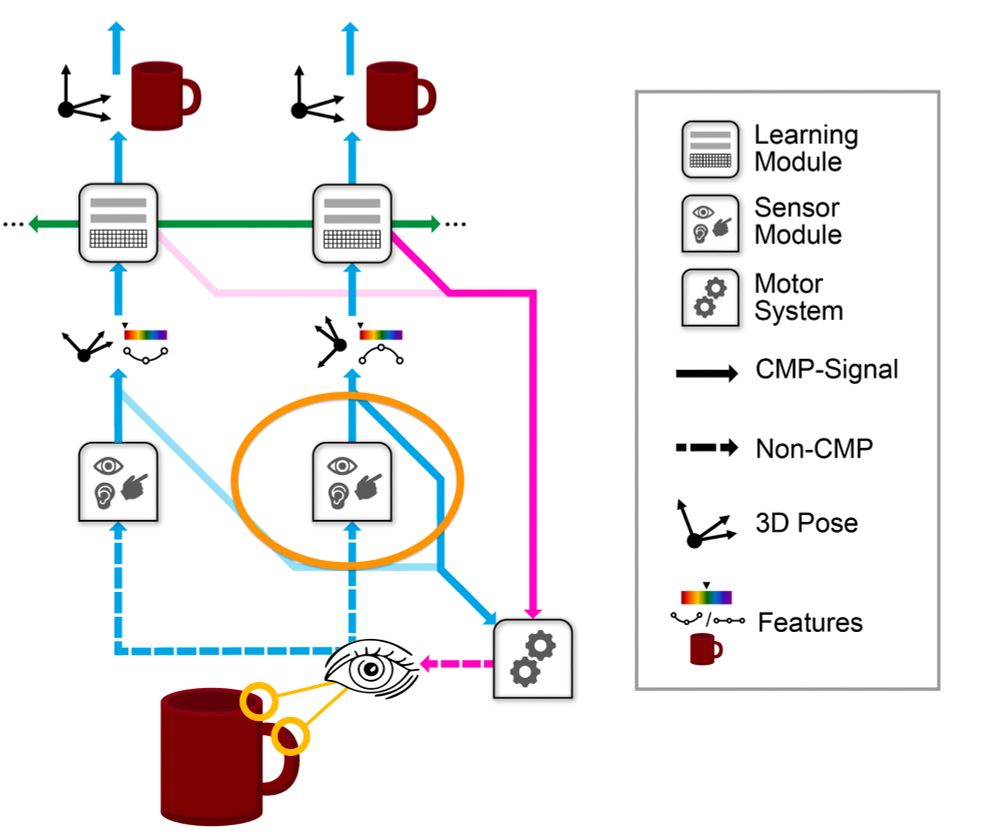

Learning modules can quickly reach consensus through voting about their most likely object and pose hypotheses, rather than having to integrate over time with multiple sensations.

Learning modules can quickly reach consensus through voting about their most likely object and pose hypotheses, rather than having to integrate over time with multiple sensations.

July 9, 2025 at 9:02 PM

/11

Learning modules can quickly reach consensus through voting about their most likely object and pose hypotheses, rather than having to integrate over time with multiple sensations.

Learning modules can quickly reach consensus through voting about their most likely object and pose hypotheses, rather than having to integrate over time with multiple sensations.

/10

Motor commands from all the learning modules tell the system where it should observe next, causing a subsequent movement to observe that location.

Motor commands from all the learning modules tell the system where it should observe next, causing a subsequent movement to observe that location.

July 9, 2025 at 9:02 PM

/10

Motor commands from all the learning modules tell the system where it should observe next, causing a subsequent movement to observe that location.

Motor commands from all the learning modules tell the system where it should observe next, causing a subsequent movement to observe that location.

/9

Learning Modules: a semi-independent modelling system that builds models of objects by integrating sensed observations with object-relative coordinates derived from the body-centric sensor locations.

Learning Modules: a semi-independent modelling system that builds models of objects by integrating sensed observations with object-relative coordinates derived from the body-centric sensor locations.

July 9, 2025 at 9:02 PM

/9

Learning Modules: a semi-independent modelling system that builds models of objects by integrating sensed observations with object-relative coordinates derived from the body-centric sensor locations.

Learning Modules: a semi-independent modelling system that builds models of objects by integrating sensed observations with object-relative coordinates derived from the body-centric sensor locations.

/8

This new architecture comprises of the following subsystems that communicate using the cortical messaging protocol (CMP)

Sensor Modules: that observe a small patch of the world and send it to the learning module.

This new architecture comprises of the following subsystems that communicate using the cortical messaging protocol (CMP)

Sensor Modules: that observe a small patch of the world and send it to the learning module.

July 9, 2025 at 9:02 PM

/8

This new architecture comprises of the following subsystems that communicate using the cortical messaging protocol (CMP)

Sensor Modules: that observe a small patch of the world and send it to the learning module.

This new architecture comprises of the following subsystems that communicate using the cortical messaging protocol (CMP)

Sensor Modules: that observe a small patch of the world and send it to the learning module.

/7

This is a new type of machine learning architecture based on principles derived from 20+ years of research into the neocortex.

This is a new type of machine learning architecture based on principles derived from 20+ years of research into the neocortex.

July 9, 2025 at 9:02 PM

/7

This is a new type of machine learning architecture based on principles derived from 20+ years of research into the neocortex.

This is a new type of machine learning architecture based on principles derived from 20+ years of research into the neocortex.

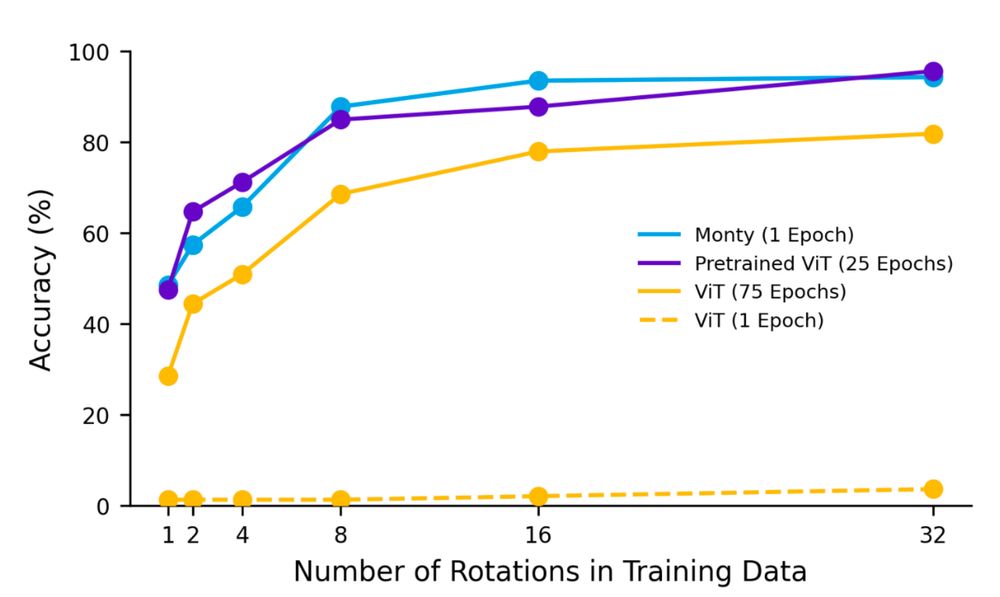

/6

Few-shot learning

After just 8 views per object Monty hits ~90 % accuracy.

Few-shot learning

After just 8 views per object Monty hits ~90 % accuracy.

July 9, 2025 at 9:02 PM

/6

Few-shot learning

After just 8 views per object Monty hits ~90 % accuracy.

Few-shot learning

After just 8 views per object Monty hits ~90 % accuracy.

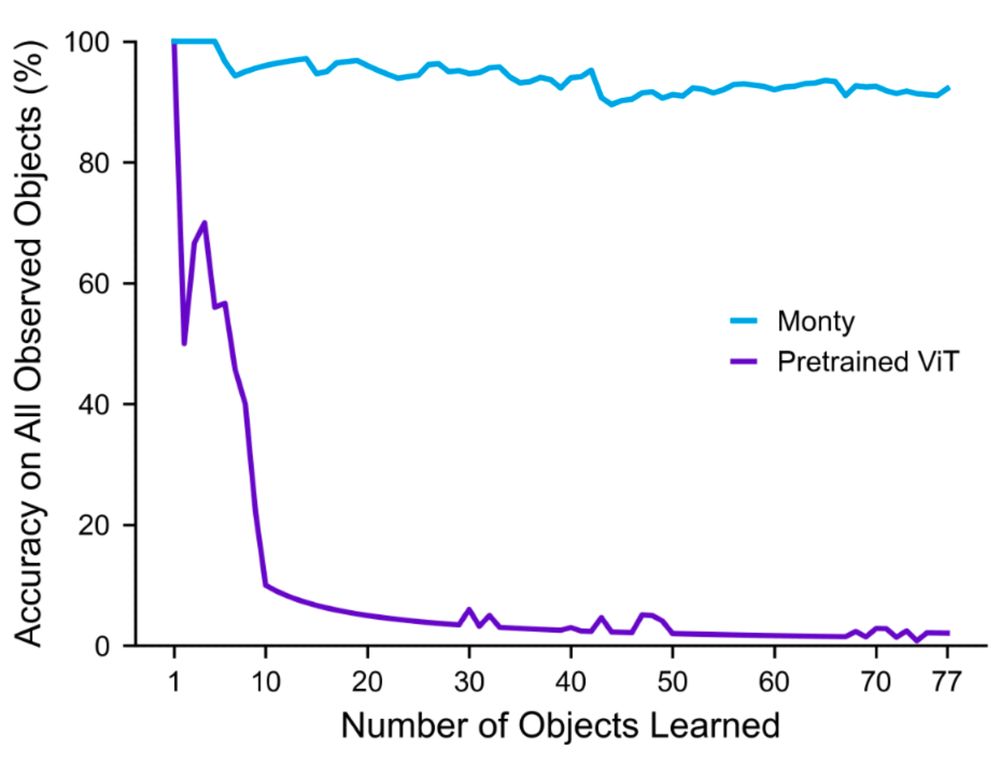

/5

Monty learns continually with virtually no loss of accuracy as new objects are added to the model. Deep learning famously suffers from catastrophic forgetting in those settings.

Monty learns continually with virtually no loss of accuracy as new objects are added to the model. Deep learning famously suffers from catastrophic forgetting in those settings.

July 9, 2025 at 9:02 PM

/5

Monty learns continually with virtually no loss of accuracy as new objects are added to the model. Deep learning famously suffers from catastrophic forgetting in those settings.

Monty learns continually with virtually no loss of accuracy as new objects are added to the model. Deep learning famously suffers from catastrophic forgetting in those settings.

/4

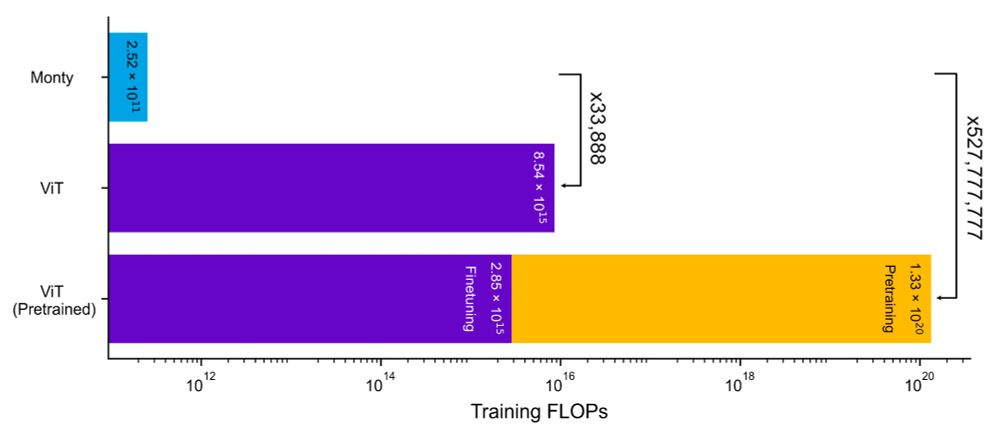

Green AI is here: Monty slashes compute needs in training and inference, yet beats a pretrained, finetuned ViT on object and pose tasks. It used 33,888× fewer FLOPs than ViT trained from scratch and 527,000,000× fewer than a pretrained ViT.

Green AI is here: Monty slashes compute needs in training and inference, yet beats a pretrained, finetuned ViT on object and pose tasks. It used 33,888× fewer FLOPs than ViT trained from scratch and 527,000,000× fewer than a pretrained ViT.

July 9, 2025 at 9:02 PM

/4

Green AI is here: Monty slashes compute needs in training and inference, yet beats a pretrained, finetuned ViT on object and pose tasks. It used 33,888× fewer FLOPs than ViT trained from scratch and 527,000,000× fewer than a pretrained ViT.

Green AI is here: Monty slashes compute needs in training and inference, yet beats a pretrained, finetuned ViT on object and pose tasks. It used 33,888× fewer FLOPs than ViT trained from scratch and 527,000,000× fewer than a pretrained ViT.

3/

A remarkable result: Monty achieves the same accuracy as a Vision Transformer while using 527 million times less computation, and it does so without suffering from catastrophic forgetting.

A remarkable result: Monty achieves the same accuracy as a Vision Transformer while using 527 million times less computation, and it does so without suffering from catastrophic forgetting.

July 9, 2025 at 9:02 PM

3/

A remarkable result: Monty achieves the same accuracy as a Vision Transformer while using 527 million times less computation, and it does so without suffering from catastrophic forgetting.

A remarkable result: Monty achieves the same accuracy as a Vision Transformer while using 527 million times less computation, and it does so without suffering from catastrophic forgetting.

/1

🚨 New Paper Drop 🚨

We’ve released “Thousand-Brains Systems: Sensorimotor Intelligence for Rapid, Robust Learning & Inference” — the first working implementation of a thousand-brains system, code-named Monty.

👇 Dive into the full thread 🧵

arxiv.org/abs/2507.04494

🚨 New Paper Drop 🚨

We’ve released “Thousand-Brains Systems: Sensorimotor Intelligence for Rapid, Robust Learning & Inference” — the first working implementation of a thousand-brains system, code-named Monty.

👇 Dive into the full thread 🧵

arxiv.org/abs/2507.04494

July 9, 2025 at 9:02 PM

/1

🚨 New Paper Drop 🚨

We’ve released “Thousand-Brains Systems: Sensorimotor Intelligence for Rapid, Robust Learning & Inference” — the first working implementation of a thousand-brains system, code-named Monty.

👇 Dive into the full thread 🧵

arxiv.org/abs/2507.04494

🚨 New Paper Drop 🚨

We’ve released “Thousand-Brains Systems: Sensorimotor Intelligence for Rapid, Robust Learning & Inference” — the first working implementation of a thousand-brains system, code-named Monty.

👇 Dive into the full thread 🧵

arxiv.org/abs/2507.04494