Thomas Davidson

@thomasdavidson.bsky.social

Sociologist at Rutgers. Studies far-right politics, populism, and hate speech. Computational social science.

https://www.thomasrdavidson.com/

https://www.thomasrdavidson.com/

Big professional news!

September 22, 2025 at 8:19 PM

Big professional news!

Analysis of the reasoning traces for Gemini 2.5 shows that the model identifies second-order factors when faced with these decisions, helping to address common false positives like flagging reclaimed slurs as hate speech (Warning: offensive language in example)

September 9, 2025 at 4:00 PM

Analysis of the reasoning traces for Gemini 2.5 shows that the model identifies second-order factors when faced with these decisions, helping to address common false positives like flagging reclaimed slurs as hate speech (Warning: offensive language in example)

On a content moderation task, humans take longer and LRMs use more tokens when offensiveness is identical or fixed.

This suggests that LRM behavior is consistent with dual process theories of cognition, as the models expend more reasoning effort when simple heuristics are insufficient

This suggests that LRM behavior is consistent with dual process theories of cognition, as the models expend more reasoning effort when simple heuristics are insufficient

September 9, 2025 at 4:00 PM

On a content moderation task, humans take longer and LRMs use more tokens when offensiveness is identical or fixed.

This suggests that LRM behavior is consistent with dual process theories of cognition, as the models expend more reasoning effort when simple heuristics are insufficient

This suggests that LRM behavior is consistent with dual process theories of cognition, as the models expend more reasoning effort when simple heuristics are insufficient

The results are consistent across three frontier LRMs: o3, Gemini 2.5 Pro, and Grok 4

September 9, 2025 at 4:00 PM

The results are consistent across three frontier LRMs: o3, Gemini 2.5 Pro, and Grok 4

New pre-print on large reasoning models 🤖🧠

To what extent does LRM behavior resemble human reasoning processes?

I find that LRM reasoning effort predicts human decision time on a pairwise comparison task, and both humans and LRMs require more time/effort on challenging tasks

To what extent does LRM behavior resemble human reasoning processes?

I find that LRM reasoning effort predicts human decision time on a pairwise comparison task, and both humans and LRMs require more time/effort on challenging tasks

September 9, 2025 at 4:00 PM

New pre-print on large reasoning models 🤖🧠

To what extent does LRM behavior resemble human reasoning processes?

I find that LRM reasoning effort predicts human decision time on a pairwise comparison task, and both humans and LRMs require more time/effort on challenging tasks

To what extent does LRM behavior resemble human reasoning processes?

I find that LRM reasoning effort predicts human decision time on a pairwise comparison task, and both humans and LRMs require more time/effort on challenging tasks

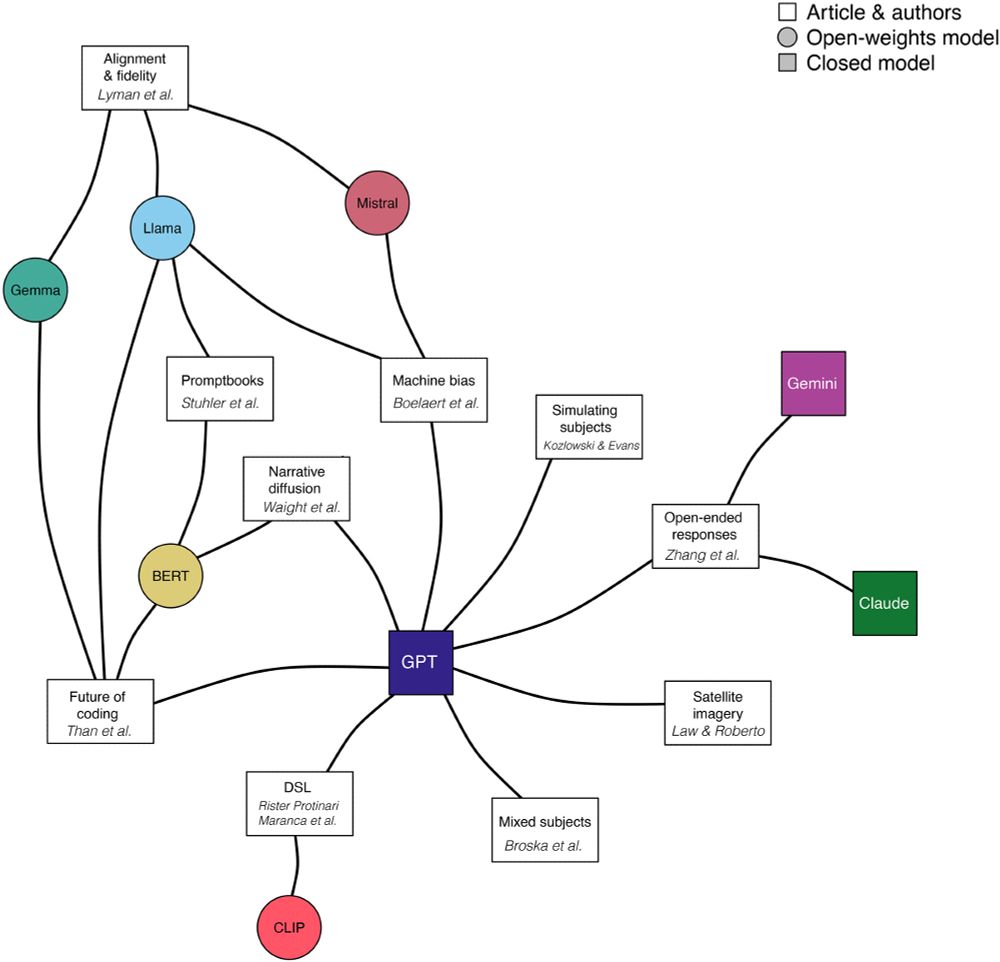

If you want to learn more about these articles, check out our editors’ introduction, where we provide an overview and discuss some central themes.

One point we emphasize is how the model ecosystem has matured & open-weight models are viable for many problems journals.sagepub.com/doi/10.1177/...

One point we emphasize is how the model ecosystem has matured & open-weight models are viable for many problems journals.sagepub.com/doi/10.1177/...

August 1, 2025 at 2:54 PM

If you want to learn more about these articles, check out our editors’ introduction, where we provide an overview and discuss some central themes.

One point we emphasize is how the model ecosystem has matured & open-weight models are viable for many problems journals.sagepub.com/doi/10.1177/...

One point we emphasize is how the model ecosystem has matured & open-weight models are viable for many problems journals.sagepub.com/doi/10.1177/...

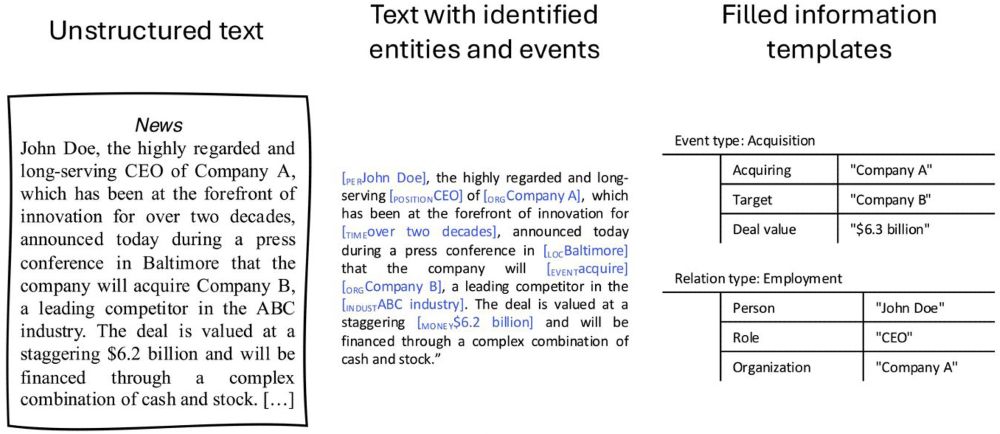

Stuhler, Ton, and Ollion show how LLMs enable more complex information extraction tasks that can be applied to text corpora, with an application to the study of obituaries journals.sagepub.com/doi/abs/10.1...

August 1, 2025 at 2:54 PM

Stuhler, Ton, and Ollion show how LLMs enable more complex information extraction tasks that can be applied to text corpora, with an application to the study of obituaries journals.sagepub.com/doi/abs/10.1...

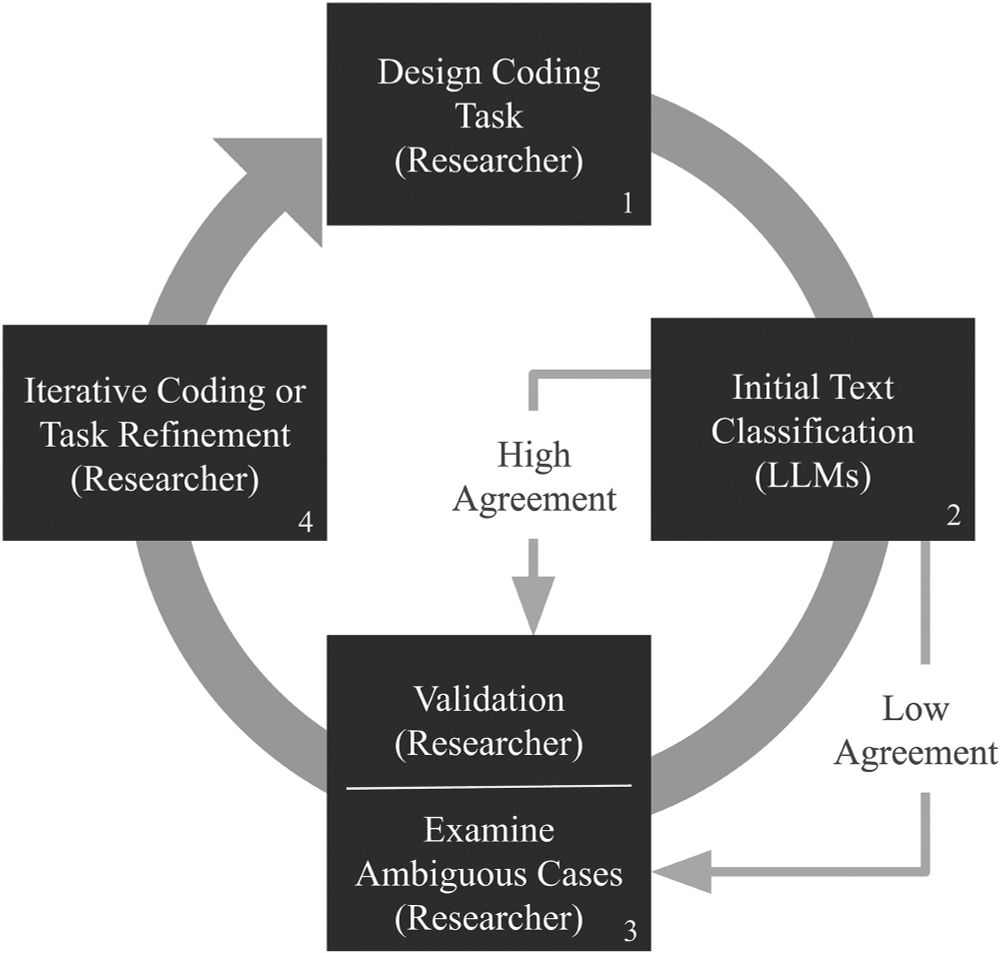

A study by Than et al. revisits an earlier SMR paper, exploring how LLMs can be used for qualitative coding tasks, providing detailed guidance on how to approach the problem journals.sagepub.com/doi/full/10....

August 1, 2025 at 2:54 PM

A study by Than et al. revisits an earlier SMR paper, exploring how LLMs can be used for qualitative coding tasks, providing detailed guidance on how to approach the problem journals.sagepub.com/doi/full/10....

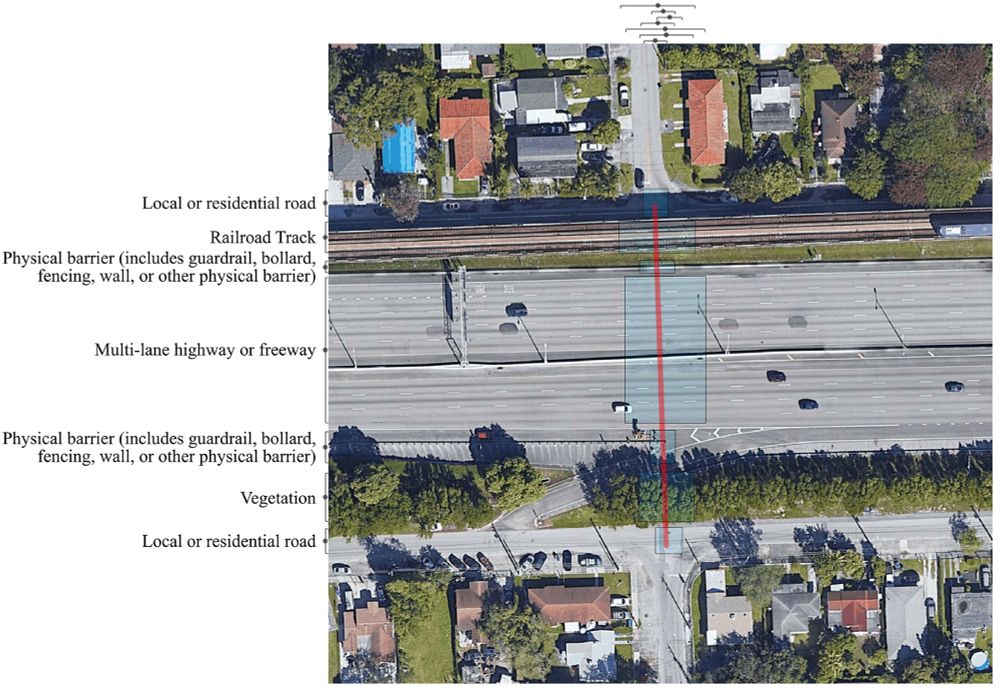

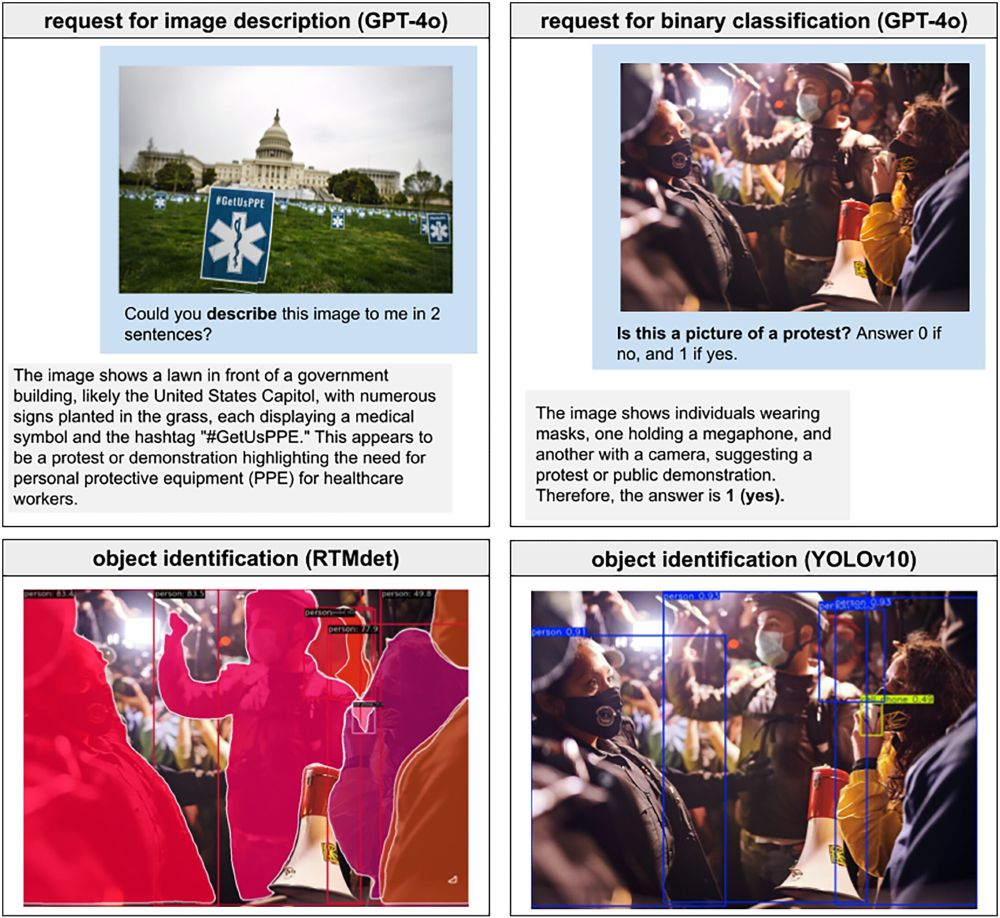

Law and Roberto showcase how vision language models like GPT-4o can extract information from satellite images, applying these techniques to study segregation in the built environment journals.sagepub.com/doi/full/10....

August 1, 2025 at 2:54 PM

Law and Roberto showcase how vision language models like GPT-4o can extract information from satellite images, applying these techniques to study segregation in the built environment journals.sagepub.com/doi/full/10....

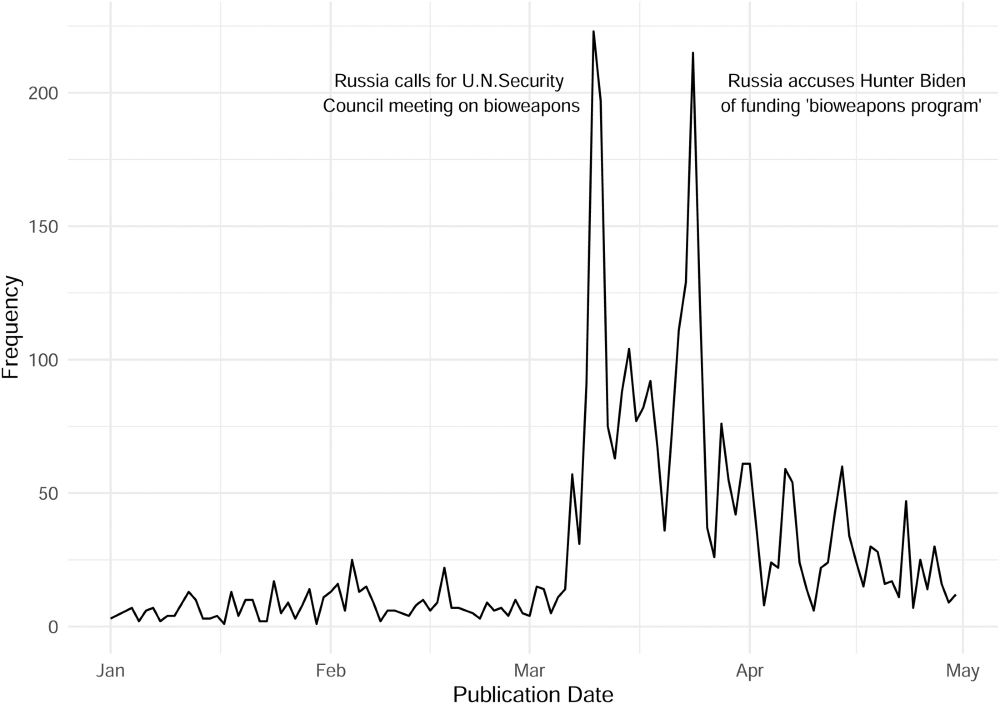

Waight and colleagues develop a pipeline for quantifying narrative similarity, combining the latest frontier LLMs with earlier techniques to study Russian influence campaigns journals.sagepub.com/doi/abs/10.1...

August 1, 2025 at 2:54 PM

Waight and colleagues develop a pipeline for quantifying narrative similarity, combining the latest frontier LLMs with earlier techniques to study Russian influence campaigns journals.sagepub.com/doi/abs/10.1...

Maranca and colleagues show the importance of statistical corrections when using predictions from multimodal LLMs and other computer vison models for downstream tasks, providing several applications journals.sagepub.com/doi/abs/10.1...

August 1, 2025 at 2:54 PM

Maranca and colleagues show the importance of statistical corrections when using predictions from multimodal LLMs and other computer vison models for downstream tasks, providing several applications journals.sagepub.com/doi/abs/10.1...

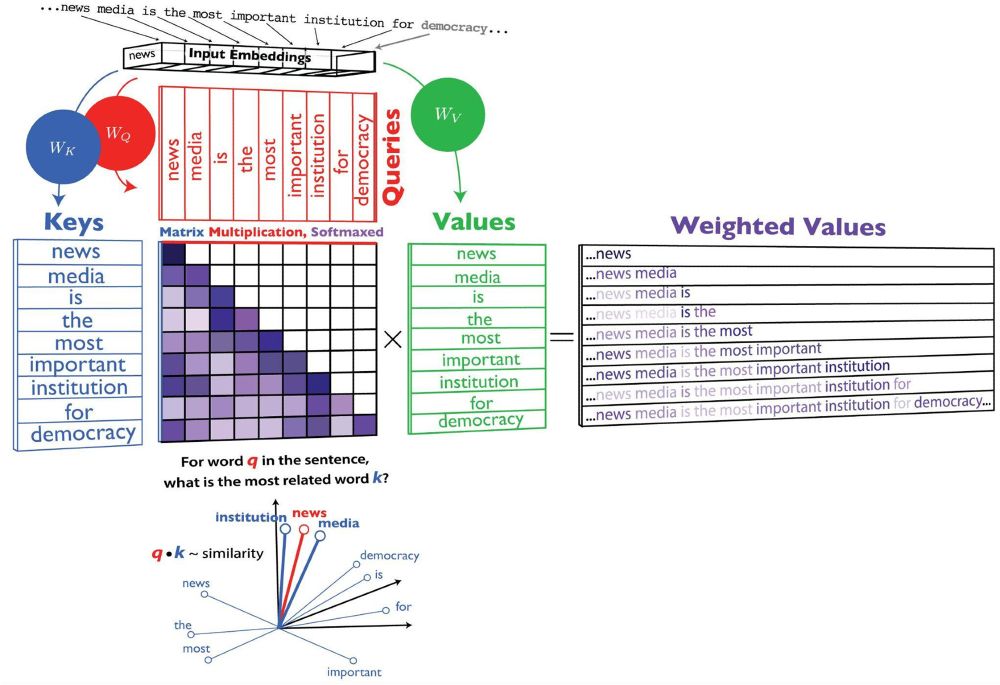

Kozlowski and Evans synthesize work using generative AI for simulation, giving us an in-depth view into how AI models work and how scholars are addressing six challenging issues journals.sagepub.com/doi/abs/10.1...

August 1, 2025 at 2:54 PM

Kozlowski and Evans synthesize work using generative AI for simulation, giving us an in-depth view into how AI models work and how scholars are addressing six challenging issues journals.sagepub.com/doi/abs/10.1...

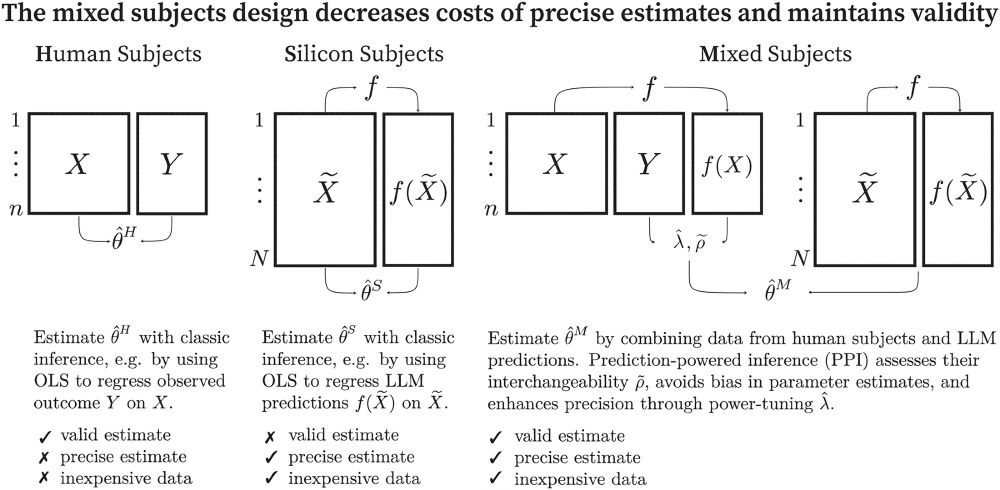

Broska, Howes, and van Loon propose the mixed-subjects approach, providing a methodology for combining estimates from human subjects and silicon samples journals.sagepub.com/doi/abs/10.1...

August 1, 2025 at 2:54 PM

Broska, Howes, and van Loon propose the mixed-subjects approach, providing a methodology for combining estimates from human subjects and silicon samples journals.sagepub.com/doi/abs/10.1...

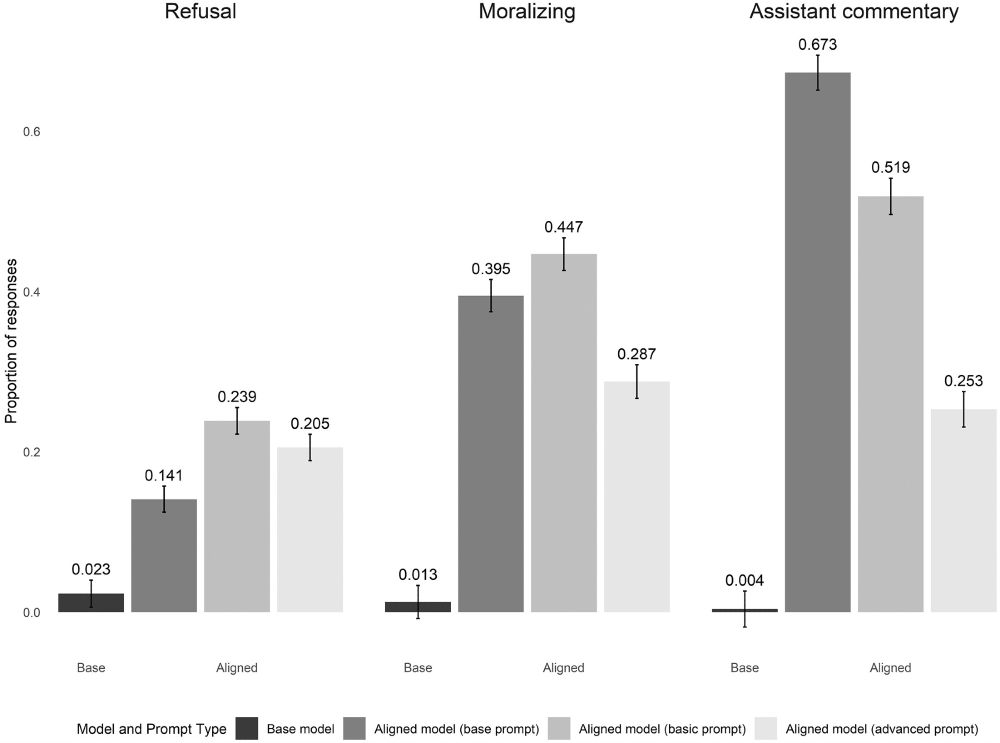

Lyman and colleagues explore the trade-offs between using instruction-tuned models and base versions of LLMs for downstream tasks, emphasizing the need to better understand model training journals.sagepub.com/doi/abs/10.1...

August 1, 2025 at 2:54 PM

Lyman and colleagues explore the trade-offs between using instruction-tuned models and base versions of LLMs for downstream tasks, emphasizing the need to better understand model training journals.sagepub.com/doi/abs/10.1...

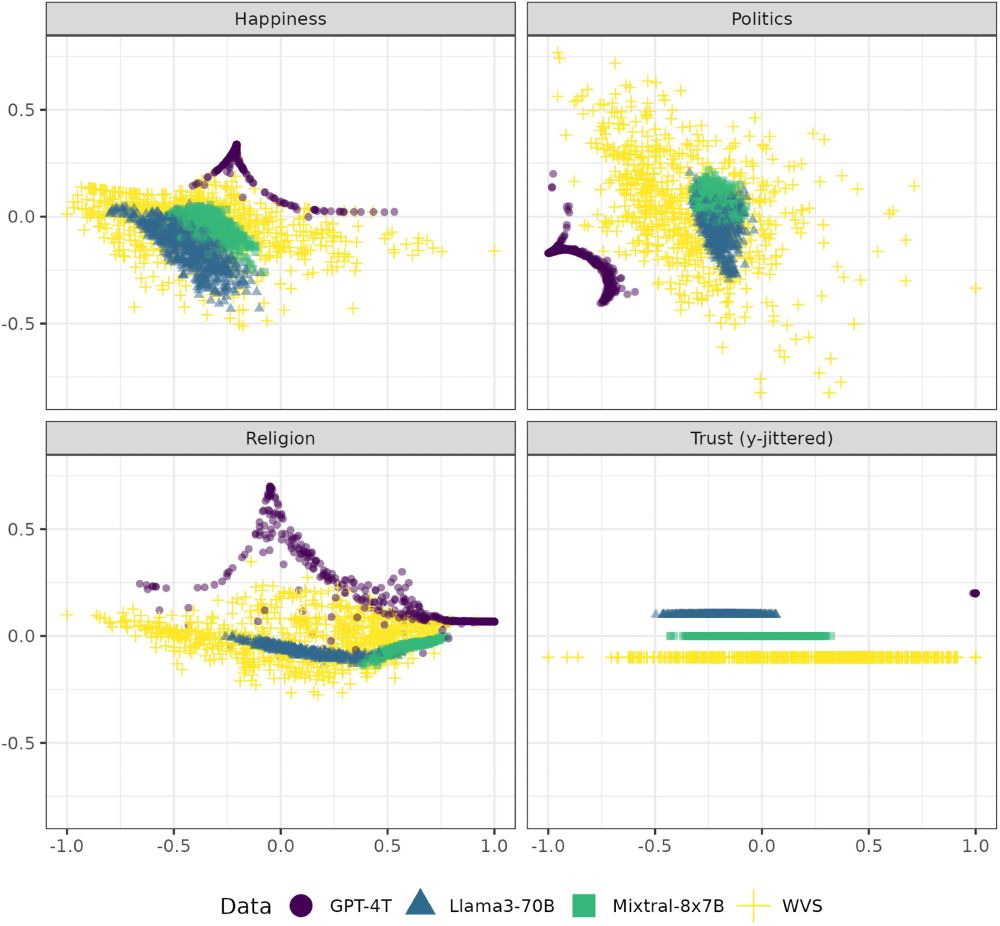

Boelaert and colleagues pose a challenge to work that uses LLMs as substitutes for humans in surveys, finding that AI models exhibit idiosyncratic biases they term “machine bias” journals.sagepub.com/doi/abs/10.1...

August 1, 2025 at 2:54 PM

Boelaert and colleagues pose a challenge to work that uses LLMs as substitutes for humans in surveys, finding that AI models exhibit idiosyncratic biases they term “machine bias” journals.sagepub.com/doi/abs/10.1...

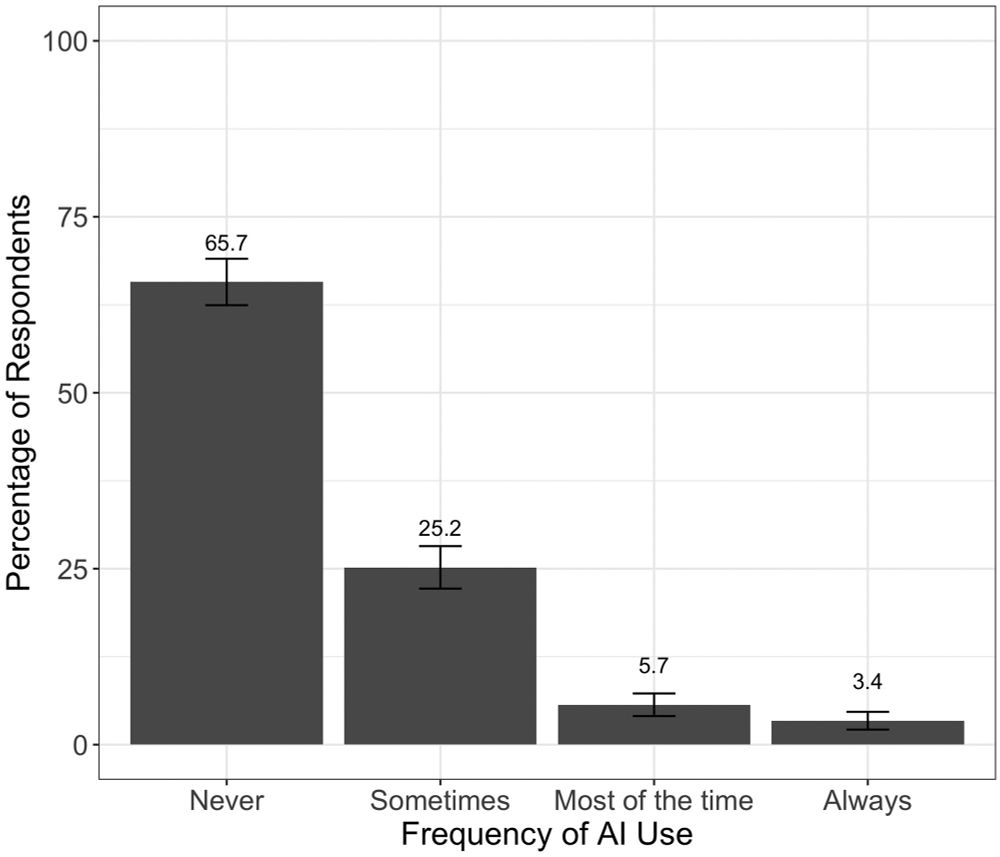

Zhang, Xu, and Alvero show how online survey participants are already using AI for open-ended responses, demonstratng how many researchers will have to grapple with the impacts of AI-generated data journals.sagepub.com/doi/abs/10.1...

August 1, 2025 at 2:54 PM

Zhang, Xu, and Alvero show how online survey participants are already using AI for open-ended responses, demonstratng how many researchers will have to grapple with the impacts of AI-generated data journals.sagepub.com/doi/abs/10.1...

I’m delighted to share that the August 2025 special issue of Sociological Methods & Research on Generative AI is out now. Along with my co-editor, Daniel Karell, we put together this issue to build on the conference we organized last year.

Here's a thread on each of the ten papers:

Here's a thread on each of the ten papers:

August 1, 2025 at 2:54 PM

I’m delighted to share that the August 2025 special issue of Sociological Methods & Research on Generative AI is out now. Along with my co-editor, Daniel Karell, we put together this issue to build on the conference we organized last year.

Here's a thread on each of the ten papers:

Here's a thread on each of the ten papers:

It was an honor to visit the University of Kansas to give the 2025 Blackmar Lecture.

I had a great time learning about the department and the legacy of Frank Blackmar, who taught the first sociology class in the US, which has continued for 135 years www.asanet.org/frank-w-blac...

I had a great time learning about the department and the legacy of Frank Blackmar, who taught the first sociology class in the US, which has continued for 135 years www.asanet.org/frank-w-blac...

May 2, 2025 at 6:10 PM

It was an honor to visit the University of Kansas to give the 2025 Blackmar Lecture.

I had a great time learning about the department and the legacy of Frank Blackmar, who taught the first sociology class in the US, which has continued for 135 years www.asanet.org/frank-w-blac...

I had a great time learning about the department and the legacy of Frank Blackmar, who taught the first sociology class in the US, which has continued for 135 years www.asanet.org/frank-w-blac...

Our article on using LLMs for text classification is out now in SMR! 🤖🧑💻💬

We compare different learning regimes, from zero-shot to instruction-tuning, and share recommendations for sociologists and other social scientists interested in using these models.

doi.org/10.1177/0049...

We compare different learning regimes, from zero-shot to instruction-tuning, and share recommendations for sociologists and other social scientists interested in using these models.

doi.org/10.1177/0049...

April 24, 2025 at 2:36 PM

Our article on using LLMs for text classification is out now in SMR! 🤖🧑💻💬

We compare different learning regimes, from zero-shot to instruction-tuning, and share recommendations for sociologists and other social scientists interested in using these models.

doi.org/10.1177/0049...

We compare different learning regimes, from zero-shot to instruction-tuning, and share recommendations for sociologists and other social scientists interested in using these models.

doi.org/10.1177/0049...

Hot off the press! @pardoguerra.bsky.social

April 21, 2025 at 5:51 PM

Hot off the press! @pardoguerra.bsky.social

My review of Natalie-Anne Hall's recent book on Brexit supporters' post-referendum engagement on Facebook is out now in Contemporary Sociology: doi.org/10.1177/0094...

Hall's analysis explains why extremism and conspiracy theories resonate with online audiences (and some platform owners).

Hall's analysis explains why extremism and conspiracy theories resonate with online audiences (and some platform owners).

March 13, 2025 at 5:19 PM

My review of Natalie-Anne Hall's recent book on Brexit supporters' post-referendum engagement on Facebook is out now in Contemporary Sociology: doi.org/10.1177/0094...

Hall's analysis explains why extremism and conspiracy theories resonate with online audiences (and some platform owners).

Hall's analysis explains why extremism and conspiracy theories resonate with online audiences (and some platform owners).

My article on Generative AI and Sociology is out now in Socius!

I explore the applications of GenAI across computational, qualitative, and experimental research, and discuss important issues including bias, reliability, and interpretability.

journals.sagepub.com/doi/full/10....

I explore the applications of GenAI across computational, qualitative, and experimental research, and discuss important issues including bias, reliability, and interpretability.

journals.sagepub.com/doi/full/10....

September 23, 2024 at 2:31 PM

My article on Generative AI and Sociology is out now in Socius!

I explore the applications of GenAI across computational, qualitative, and experimental research, and discuss important issues including bias, reliability, and interpretability.

journals.sagepub.com/doi/full/10....

I explore the applications of GenAI across computational, qualitative, and experimental research, and discuss important issues including bias, reliability, and interpretability.

journals.sagepub.com/doi/full/10....

Join our CBSM small group on computational methods for a discussion of new data, methodologies, and the future of social movements research 🪧 💻📱#️⃣🤖

With @lauraknelson.bsky.social, @haphazardsoc.bsky.social, Josh Zhang, Eunkyung Song, and Danny Karell. PM me for Zoom details.

With @lauraknelson.bsky.social, @haphazardsoc.bsky.social, Josh Zhang, Eunkyung Song, and Danny Karell. PM me for Zoom details.

March 21, 2024 at 9:28 PM

Join our CBSM small group on computational methods for a discussion of new data, methodologies, and the future of social movements research 🪧 💻📱#️⃣🤖

With @lauraknelson.bsky.social, @haphazardsoc.bsky.social, Josh Zhang, Eunkyung Song, and Danny Karell. PM me for Zoom details.

With @lauraknelson.bsky.social, @haphazardsoc.bsky.social, Josh Zhang, Eunkyung Song, and Danny Karell. PM me for Zoom details.

Seeing some discussion about AI course policies. It's the first time teaching my CSS classes in a couple of years, so I came up with this.

Interested to hear any feedback / how others have addressed this in technical courses.

Interested to hear any feedback / how others have addressed this in technical courses.

January 16, 2024 at 8:17 PM

Seeing some discussion about AI course policies. It's the first time teaching my CSS classes in a couple of years, so I came up with this.

Interested to hear any feedback / how others have addressed this in technical courses.

Interested to hear any feedback / how others have addressed this in technical courses.

Submit an abstract to the generative AI and sociology workshop, due next Friday 12/15

We also welcome submissions from other social scientists and fellow travelers

For more info visit: tinyurl.com/soc-gen-ai

We also welcome submissions from other social scientists and fellow travelers

For more info visit: tinyurl.com/soc-gen-ai

December 6, 2023 at 9:56 PM

Submit an abstract to the generative AI and sociology workshop, due next Friday 12/15

We also welcome submissions from other social scientists and fellow travelers

For more info visit: tinyurl.com/soc-gen-ai

We also welcome submissions from other social scientists and fellow travelers

For more info visit: tinyurl.com/soc-gen-ai