Tobias Werner

@tfwerner.com

Postdoc the Center for Humans and Machines (CHM/MPIB) | PhD in Economics | Affiliated with DICE/HHU & BCCP

I am on the economic job market 2024/2025.

Currently visiting the EconCS group @ Harvard.

Tfwerner.com

I am on the economic job market 2024/2025.

Currently visiting the EconCS group @ Harvard.

Tfwerner.com

💡 Takeaway: Humans-in-the-loop are key.

When people are involved, even a ``collusive'' AI can lead to more competition and lower prices .

Full paper: arxiv.org/abs/2510.27636

When people are involved, even a ``collusive'' AI can lead to more competition and lower prices .

Full paper: arxiv.org/abs/2510.27636

Delegate Pricing Decisions to an Algorithm? Experimental Evidence

We analyze the delegation of pricing by participants, representing firms, to a collusive, self-learning algorithm in a repeated Bertrand experiment. In the baseline treatment, participants set prices ...

arxiv.org

November 3, 2025 at 11:30 AM

💡 Takeaway: Humans-in-the-loop are key.

When people are involved, even a ``collusive'' AI can lead to more competition and lower prices .

Full paper: arxiv.org/abs/2510.27636

When people are involved, even a ``collusive'' AI can lead to more competition and lower prices .

Full paper: arxiv.org/abs/2510.27636

🔧 Why? (2/2): In RECOMMENDATION, adoption is high, but adherence is low.

Participants frequently override the AI's advice, resulting in longer price wars than in the baseline.

Participants frequently override the AI's advice, resulting in longer price wars than in the baseline.

November 3, 2025 at 11:30 AM

🔧 Why? (2/2): In RECOMMENDATION, adoption is high, but adherence is low.

Participants frequently override the AI's advice, resulting in longer price wars than in the baseline.

Participants frequently override the AI's advice, resulting in longer price wars than in the baseline.

🔧 Why? (1/2): In OUTSOURCING, non-adopters often use a cyclic-deviation strategy (repeatedly undercutting the AI).

This is not profitable, but it punishes adopters & discourages delegation. Our evidence suggests that this is driven by spite towards AI, not myopia.

This is not profitable, but it punishes adopters & discourages delegation. Our evidence suggests that this is driven by spite towards AI, not myopia.

November 3, 2025 at 11:30 AM

🔧 Why? (1/2): In OUTSOURCING, non-adopters often use a cyclic-deviation strategy (repeatedly undercutting the AI).

This is not profitable, but it punishes adopters & discourages delegation. Our evidence suggests that this is driven by spite towards AI, not myopia.

This is not profitable, but it punishes adopters & discourages delegation. Our evidence suggests that this is driven by spite towards AI, not myopia.

📉 Result 2 (Prices): Pro-competitive effects of AI!

We thought the collusive AI would raise prices.

However, by the end, prices are significantly lower in both AI treatments than in the human-only BASELINE.

We thought the collusive AI would raise prices.

However, by the end, prices are significantly lower in both AI treatments than in the human-only BASELINE.

November 3, 2025 at 11:30 AM

📉 Result 2 (Prices): Pro-competitive effects of AI!

We thought the collusive AI would raise prices.

However, by the end, prices are significantly lower in both AI treatments than in the human-only BASELINE.

We thought the collusive AI would raise prices.

However, by the end, prices are significantly lower in both AI treatments than in the human-only BASELINE.

📈 Result 1 (Adoption): Participants delegate a lot.

But control matters. Adoption is significantly higher when participants can override the AI (RECOMMENDATION).

But control matters. Adoption is significantly higher when participants can override the AI (RECOMMENDATION).

November 3, 2025 at 11:30 AM

📈 Result 1 (Adoption): Participants delegate a lot.

But control matters. Adoption is significantly higher when participants can override the AI (RECOMMENDATION).

But control matters. Adoption is significantly higher when participants can override the AI (RECOMMENDATION).

We ran a lab experiment with 3 conditions:

1️⃣ BASELINE: Humans only.

2️⃣ OUTSOURCING: Full delegation to a collusive AI.

3️⃣ RECOMMENDATION: AI advice, but humans can override it .

1️⃣ BASELINE: Humans only.

2️⃣ OUTSOURCING: Full delegation to a collusive AI.

3️⃣ RECOMMENDATION: AI advice, but humans can override it .

November 3, 2025 at 11:30 AM

We ran a lab experiment with 3 conditions:

1️⃣ BASELINE: Humans only.

2️⃣ OUTSOURCING: Full delegation to a collusive AI.

3️⃣ RECOMMENDATION: AI advice, but humans can override it .

1️⃣ BASELINE: Humans only.

2️⃣ OUTSOURCING: Full delegation to a collusive AI.

3️⃣ RECOMMENDATION: AI advice, but humans can override it .

Most work on AI collusion simulates AI vs. AI and simply assumes firms use them. This overlooks the (human) decision to adopt.

So we ask: Will firms delegate to a collusive AI? And how does this strategic choice change market outcomes?

So we ask: Will firms delegate to a collusive AI? And how does this strategic choice change market outcomes?

November 3, 2025 at 11:30 AM

Most work on AI collusion simulates AI vs. AI and simply assumes firms use them. This overlooks the (human) decision to adopt.

So we ask: Will firms delegate to a collusive AI? And how does this strategic choice change market outcomes?

So we ask: Will firms delegate to a collusive AI? And how does this strategic choice change market outcomes?

And yes: I thought before leaving Berlin I had to go full Berlin and get a bleached buzzcut

September 1, 2025 at 1:41 PM

And yes: I thought before leaving Berlin I had to go full Berlin and get a bleached buzzcut

Absolutely! That's part of the solution, but online studies also offer key advantages, like more representative samples + larger N. Hopefully, we'll see more cross-lab collaborations.

August 6, 2025 at 9:57 AM

Absolutely! That's part of the solution, but online studies also offer key advantages, like more representative samples + larger N. Hopefully, we'll see more cross-lab collaborations.

If you run studies online or use platforms like Prolific, MTurk, or CloudResearch, this impacts you!

We hope this paper encourages discussion, collaboration, and improved safeguards.

🔗 arxiv.org/abs/2508.01390

We hope this paper encourages discussion, collaboration, and improved safeguards.

🔗 arxiv.org/abs/2508.01390

August 5, 2025 at 8:04 AM

If you run studies online or use platforms like Prolific, MTurk, or CloudResearch, this impacts you!

We hope this paper encourages discussion, collaboration, and improved safeguards.

🔗 arxiv.org/abs/2508.01390

We hope this paper encourages discussion, collaboration, and improved safeguards.

🔗 arxiv.org/abs/2508.01390

At the end of the day, this isn't just a researcher's problem.

Platforms that advertise "100% human" samples must be held accountable.

Researchers need tools and transparency to protect their work, and in some cases, it may be time to go back to the physical lab!

Platforms that advertise "100% human" samples must be held accountable.

Researchers need tools and transparency to protect their work, and in some cases, it may be time to go back to the physical lab!

August 5, 2025 at 8:04 AM

At the end of the day, this isn't just a researcher's problem.

Platforms that advertise "100% human" samples must be held accountable.

Researchers need tools and transparency to protect their work, and in some cases, it may be time to go back to the physical lab!

Platforms that advertise "100% human" samples must be held accountable.

Researchers need tools and transparency to protect their work, and in some cases, it may be time to go back to the physical lab!

We propose a layered defence strategy, but let's be clear: this is already an arms race.

Some measures:

- reCAPTCHA and Cloudflare

- Multimodal instructions (images, audio)

- Input restrictions (no paste, voice answers)

- Behavioural logging

- Platform enforcement

Some measures:

- reCAPTCHA and Cloudflare

- Multimodal instructions (images, audio)

- Input restrictions (no paste, voice answers)

- Behavioural logging

- Platform enforcement

August 5, 2025 at 8:04 AM

We propose a layered defence strategy, but let's be clear: this is already an arms race.

Some measures:

- reCAPTCHA and Cloudflare

- Multimodal instructions (images, audio)

- Input restrictions (no paste, voice answers)

- Behavioural logging

- Platform enforcement

Some measures:

- reCAPTCHA and Cloudflare

- Multimodal instructions (images, audio)

- Input restrictions (no paste, voice answers)

- Behavioural logging

- Platform enforcement

LLM Pollution scrambles your data:

🧠 Dampens variance

📈 Inflates effects

📊 Mocks WEIRD norms

🔍 Obscures who your participants really are

… all while staying nearly undetectable!

🧠 Dampens variance

📈 Inflates effects

📊 Mocks WEIRD norms

🔍 Obscures who your participants really are

… all while staying nearly undetectable!

August 5, 2025 at 8:04 AM

LLM Pollution scrambles your data:

🧠 Dampens variance

📈 Inflates effects

📊 Mocks WEIRD norms

🔍 Obscures who your participants really are

… all while staying nearly undetectable!

🧠 Dampens variance

📈 Inflates effects

📊 Mocks WEIRD norms

🔍 Obscures who your participants really are

… all while staying nearly undetectable!

In a recent Prolific pilot, 45% of participants copied/pasted open-ended items or showed signs of AI-generated content, with responses starting like "As an artificial entity…"

This is contamination, not noise!

This is contamination, not noise!

August 5, 2025 at 8:04 AM

In a recent Prolific pilot, 45% of participants copied/pasted open-ended items or showed signs of AI-generated content, with responses starting like "As an artificial entity…"

This is contamination, not noise!

This is contamination, not noise!

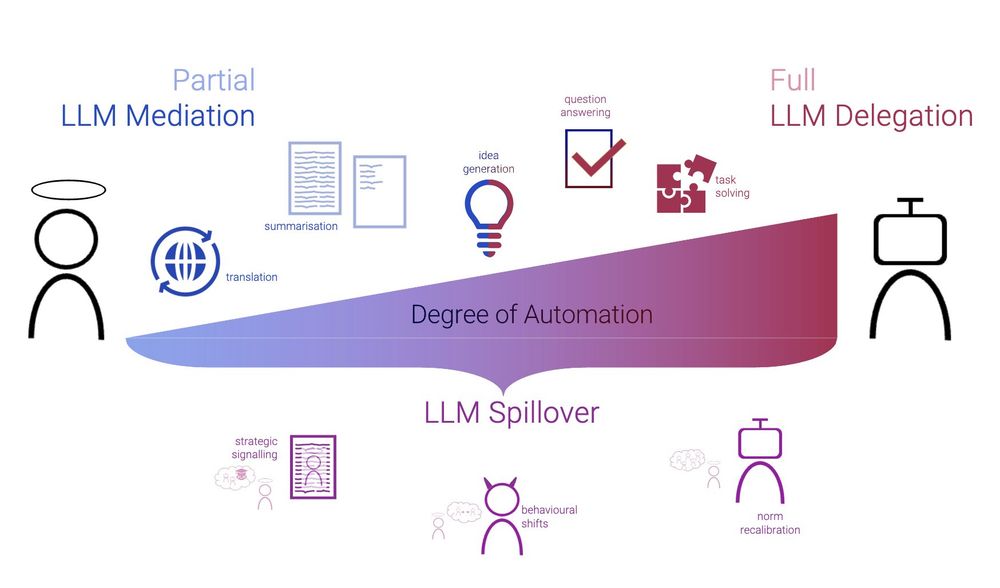

We map three invasion paths for LLMs:

1. Partial Mediation: LLM assists with rephrasing or answering.

2. Full Delegation: Agents like OpenAI Operator handle everything.

3. Spillover: Humans act differently due to bot expectations.

(See figure below.)

1. Partial Mediation: LLM assists with rephrasing or answering.

2. Full Delegation: Agents like OpenAI Operator handle everything.

3. Spillover: Humans act differently due to bot expectations.

(See figure below.)

August 5, 2025 at 8:04 AM

We map three invasion paths for LLMs:

1. Partial Mediation: LLM assists with rephrasing or answering.

2. Full Delegation: Agents like OpenAI Operator handle everything.

3. Spillover: Humans act differently due to bot expectations.

(See figure below.)

1. Partial Mediation: LLM assists with rephrasing or answering.

2. Full Delegation: Agents like OpenAI Operator handle everything.

3. Spillover: Humans act differently due to bot expectations.

(See figure below.)

This isn't sci-fi. Tools like OpenAI's Operator and open-source browser agents can now read surveys, click consent, and answer your questions, all without a human.

LLM Pollution is not hypothetical. It is happening now.

We map three variants in the paper 👇

LLM Pollution is not hypothetical. It is happening now.

We map three variants in the paper 👇

August 5, 2025 at 8:04 AM

This isn't sci-fi. Tools like OpenAI's Operator and open-source browser agents can now read surveys, click consent, and answer your questions, all without a human.

LLM Pollution is not hypothetical. It is happening now.

We map three variants in the paper 👇

LLM Pollution is not hypothetical. It is happening now.

We map three variants in the paper 👇