5. Launch criteria should identify "final" metrics with conversion factors from "proximal" metrics. Don't make decisions on stat-significance.

5. Launch criteria should identify "final" metrics with conversion factors from "proximal" metrics. Don't make decisions on stat-significance.

1. Experiments are not the primary way we learn causal relationships.

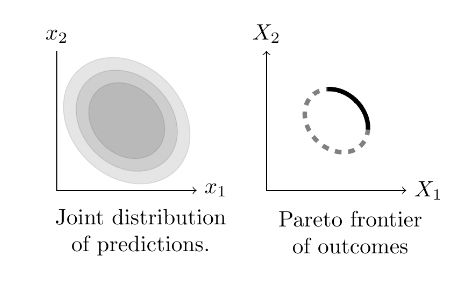

2. A simple Gaussian model gives you a robust way of thinking about challenging cases.

3. The Bayesian approach makes it easy to think about things that are confusing (multiple testing, peeking, selective reporting).

1. Experiments are not the primary way we learn causal relationships.

2. A simple Gaussian model gives you a robust way of thinking about challenging cases.

3. The Bayesian approach makes it easy to think about things that are confusing (multiple testing, peeking, selective reporting).