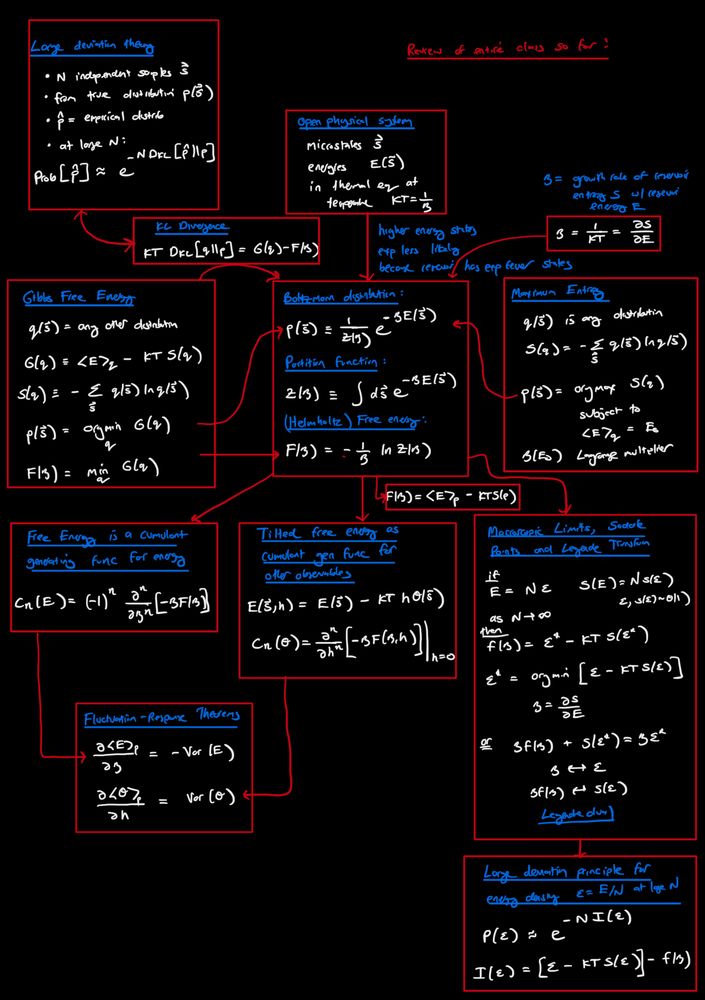

arxiv.org/abs/2509.12202

arxiv.org/abs/2509.12202

arxiv.org/abs/2412.20292

Our closed-form theory needs no training, is mechanistically interpretable & accurately predicts diffusion model outputs with high median r^2~0.9

arxiv.org/abs/2412.20292

Our closed-form theory needs no training, is mechanistically interpretable & accurately predicts diffusion model outputs with high median r^2~0.9