🔗 https://stojnicv.xyz

📅 October 22, 2025, 14:30 – 16:30 HST

📍 Location: Exhibit Hall I, Poster #207

📅 October 22, 2025, 14:30 – 16:30 HST

📍 Location: Exhibit Hall I, Poster #207

Here, we show kNN classification in a few cases, depending on whether the semantic positives and negatives share the same processing parameters as the test image.

Here, we show kNN classification in a few cases, depending on whether the semantic positives and negatives share the same processing parameters as the test image.

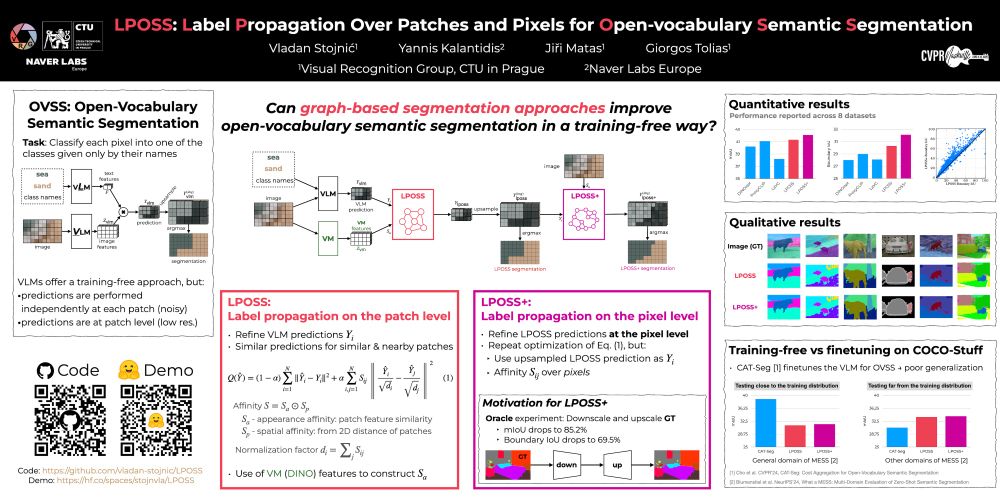

We show how can graph-based label propagation be used to improve weak, patch-level predictions from VLMs for open-vocabulary semantic segmentation.

📅 June 13, 2025, 16:00 – 18:00 CDT

📍 Location: ExHall D, Poster #421

We show how can graph-based label propagation be used to improve weak, patch-level predictions from VLMs for open-vocabulary semantic segmentation.

📅 June 13, 2025, 16:00 – 18:00 CDT

📍 Location: ExHall D, Poster #421

LPOSS is a training-free method for open-vocabulary semantic segmentation using Vision-Language Models.

LPOSS is a training-free method for open-vocabulary semantic segmentation using Vision-Language Models.