https://stellalisy.com

r/AskHistorians:📚values verbosity

r/RoastMe:💥values directness

r/confession:❤️values empathy

We visualize each group’s unique preference decisions—no more one-size-fits-all. Understand your audience at a glance🏷️

r/AskHistorians:📚values verbosity

r/RoastMe:💥values directness

r/confession:❤️values empathy

We visualize each group’s unique preference decisions—no more one-size-fits-all. Understand your audience at a glance🏷️

📈Performance boost: +46.6% vs GPT-4o

💪Outperforms other training-based baselines w/ statistical significance

🕰️Robust to temporal shifts—trained pref models can be used out-of-the box!

📈Performance boost: +46.6% vs GPT-4o

💪Outperforms other training-based baselines w/ statistical significance

🕰️Robust to temporal shifts—trained pref models can be used out-of-the box!

1: 🎛️Train compact, efficient detectors for every attribute

2: 🎯Learn community-specific attribute weights during preference training

3: 🔧Add attribute embeddings to preference model for accurate & explainable predictions

1: 🎛️Train compact, efficient detectors for every attribute

2: 🎯Learn community-specific attribute weights during preference training

3: 🔧Add attribute embeddings to preference model for accurate & explainable predictions

📜Define 19 sociolinguistics & cultural attributes from literature

🏭Novel preference data generation pipeline to isolate attributes

Our data gen pipeline generates pairwise data on *any* decomposed dimension, w/ applications beyond preference modeling

📜Define 19 sociolinguistics & cultural attributes from literature

🏭Novel preference data generation pipeline to isolate attributes

Our data gen pipeline generates pairwise data on *any* decomposed dimension, w/ applications beyond preference modeling

🔍⚖️models preferences w/ 19 attribute detectors and dynamic, context-aware weights

🕶️👍uses unobtrusive signals from Reddit to avoid response bias

🧠mirrors attribute-mediated human judgment—so you know not just what it predicts, but *why*🧐

🔍⚖️models preferences w/ 19 attribute detectors and dynamic, context-aware weights

🕶️👍uses unobtrusive signals from Reddit to avoid response bias

🧠mirrors attribute-mediated human judgment—so you know not just what it predicts, but *why*🧐

Reward models treat preference as a black-box😶🌫️but human brains🧠decompose decisions into hidden attributes

We built the first system to mirror how people really make decisions in our recent COLM paper🎨PrefPalette✨

Why it matters👉🏻🧵

Reward models treat preference as a black-box😶🌫️but human brains🧠decompose decisions into hidden attributes

We built the first system to mirror how people really make decisions in our recent COLM paper🎨PrefPalette✨

Why it matters👉🏻🧵

ALFA-trained models maintain strong performance even on completely new interactive medical tasks (MediQ-MedQA).

highlighting ALFA’s potential for broader applicability in real-world clinical scenarios‼️

ALFA-trained models maintain strong performance even on completely new interactive medical tasks (MediQ-MedQA).

highlighting ALFA’s potential for broader applicability in real-world clinical scenarios‼️

Removing any single attribute hurts performance‼️

Grouping general (clarify, focus, answerability) vs. clinical (medical accuracy, diagnostic relevance, avoiding DDX bias) attributes leads to drastically different outputs👩⚕️

Check out some cool examples!👇

Removing any single attribute hurts performance‼️

Grouping general (clarify, focus, answerability) vs. clinical (medical accuracy, diagnostic relevance, avoiding DDX bias) attributes leads to drastically different outputs👩⚕️

Check out some cool examples!👇

Is it just good synthetic data❓ No❗️

Simply showing good examples isn't enough! Models need to learn directional differences between good and bad questions.

(but only SFT no DPO also doesn't work!)

Is it just good synthetic data❓ No❗️

Simply showing good examples isn't enough! Models need to learn directional differences between good and bad questions.

(but only SFT no DPO also doesn't work!)

ALFA-aligned models achieve:

⭐️56.6% reduction in diagnostic errors🦾

⭐️64.4% win rate in question quality✅

⭐️Strong generalization.

in comparison with baseline SoTA instruction-tuned LLMs.

ALFA-aligned models achieve:

⭐️56.6% reduction in diagnostic errors🦾

⭐️64.4% win rate in question quality✅

⭐️Strong generalization.

in comparison with baseline SoTA instruction-tuned LLMs.

A systematic, general question-asking framework that:

1️⃣ Decomposes the concept of good questioning into attributes📋

2️⃣ Generates targeted attribute-specific data📚

3️⃣ Teaches LLMs through preference learning🧑🏫

A systematic, general question-asking framework that:

1️⃣ Decomposes the concept of good questioning into attributes📋

2️⃣ Generates targeted attribute-specific data📚

3️⃣ Teaches LLMs through preference learning🧑🏫

Our new framework ALFA—ALignment with Fine-grained Attributes—teaches LLMs to PROACTIVE seek information through better questions through **structured rewards**🏥❓

(co-led with @jiminmun.bsky.social)

👉🏻🧵

Our new framework ALFA—ALignment with Fine-grained Attributes—teaches LLMs to PROACTIVE seek information through better questions through **structured rewards**🏥❓

(co-led with @jiminmun.bsky.social)

👉🏻🧵

• Adding rationale generation (RG) improves diagnostic accuracy and reduces calibration error.

• Self-consistency boosts reliability (but only with RG!).

Combining both enhances performance by 22%.

Each piece matters for better LLM reasoning! 📊

• Adding rationale generation (RG) improves diagnostic accuracy and reduces calibration error.

• Self-consistency boosts reliability (but only with RG!).

Combining both enhances performance by 22%.

Each piece matters for better LLM reasoning! 📊

📉SOTA LLMs fail at interactive clinical reasoning‼️they don’t ask questions.

👑BEST Expert improves diagnostic accuracy by 22% w/ abstention module.

🗨️ Iterative questioning helped gather crucial missing information in incomplete scenarios.

📉SOTA LLMs fail at interactive clinical reasoning‼️they don’t ask questions.

👑BEST Expert improves diagnostic accuracy by 22% w/ abstention module.

🗨️ Iterative questioning helped gather crucial missing information in incomplete scenarios.

✅If confident, provide a final answer.

❓If unsure, it asks targeted questions to gather more info.

This process mimics how clinicians manage uncertainty, reducing errors and improving safety.

✅If confident, provide a final answer.

❓If unsure, it asks targeted questions to gather more info.

This process mimics how clinicians manage uncertainty, reducing errors and improving safety.

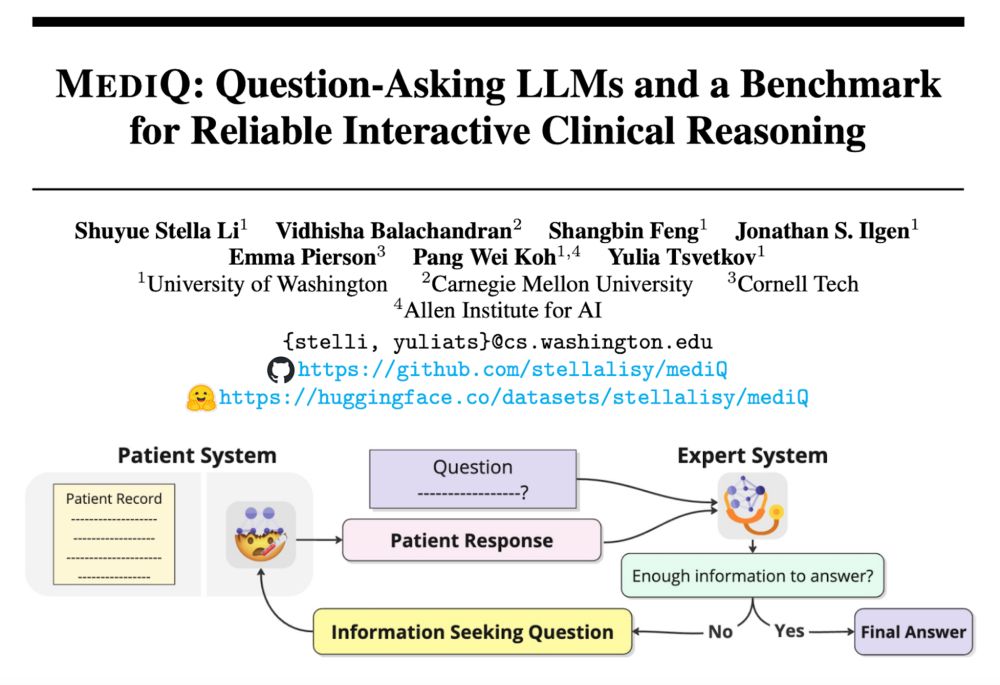

🤒Patient System: Simulates realistic patient responses with partial info.

🩺Expert System: Decides when to ask questions or answer using an abstention mechanism.

📊 Benchmark: Tests LLMs’ ability to handle iterative decision-making in medicine.

🤒Patient System: Simulates realistic patient responses with partial info.

🩺Expert System: Decides when to ask questions or answer using an abstention mechanism.

📊 Benchmark: Tests LLMs’ ability to handle iterative decision-making in medicine.