Actually our methodology is extremely cheap, mostly runs on CPU, and adds an overhead of less than 1%.

Actually our methodology is extremely cheap, mostly runs on CPU, and adds an overhead of less than 1%.

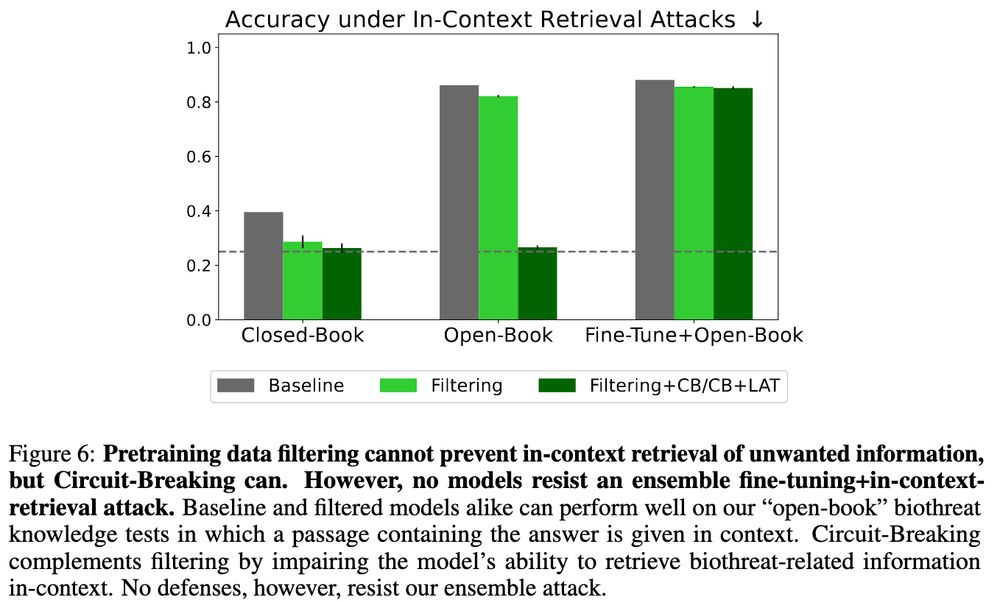

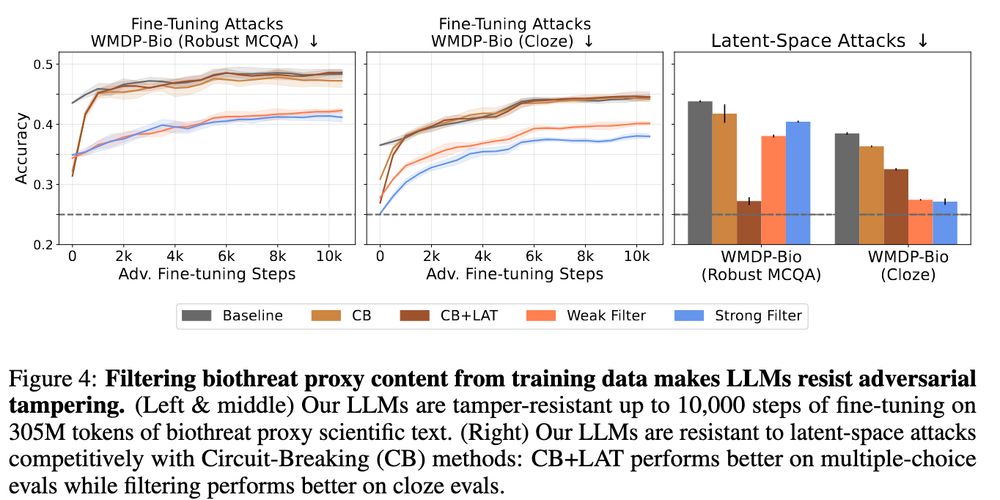

@eleutherai.bsky.social and the UK AISI joined forces to see what would happen, pretraining three 6.9B models for 500B tokens and producing 15 total models to study

@eleutherai.bsky.social and the UK AISI joined forces to see what would happen, pretraining three 6.9B models for 500B tokens and producing 15 total models to study

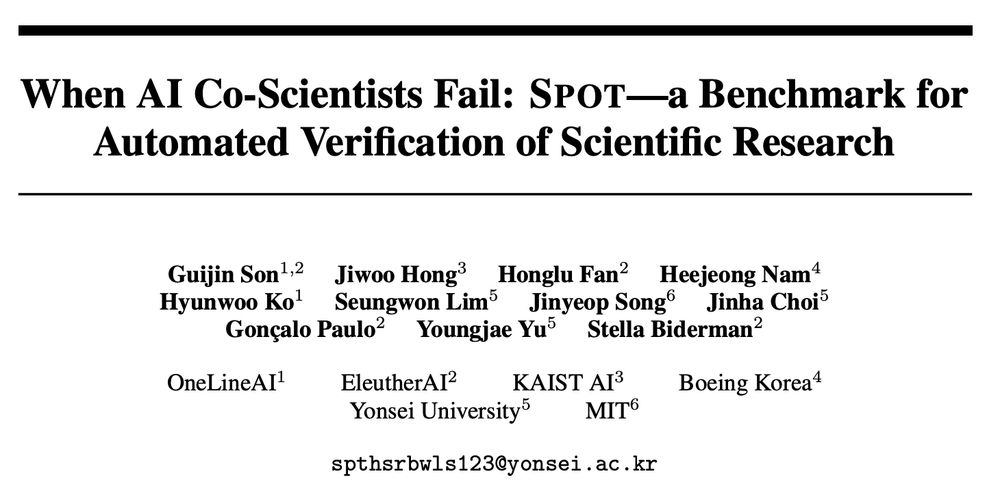

To make sure these errors are genuine, we only include those that have been acknowledged by the original authors!

To make sure these errors are genuine, we only include those that have been acknowledged by the original authors!

We note the location, severe, and have short human description of errors.

We note the location, severe, and have short human description of errors.

We built SPOT, a dataset of STEM manuscripts across 10 fields annotated with real errors to find out.

(tl;dr not even close to usable) #NLProc

arxiv.org/abs/2505.11855

We built SPOT, a dataset of STEM manuscripts across 10 fields annotated with real errors to find out.

(tl;dr not even close to usable) #NLProc

arxiv.org/abs/2505.11855

arxiv.org/abs/2306.17806

arxiv.org/abs/2306.17806

It's remarkable how what OpenAI considers "dangerous" changes continuously to exclude the thing they're currently selling. There was a time this was a red line.

It's remarkable how what OpenAI considers "dangerous" changes continuously to exclude the thing they're currently selling. There was a time this was a red line.

It seems possible you could deliberately induce that, but again is there evidence that works well?

It seems possible you could deliberately induce that, but again is there evidence that works well?

arxiv.org/abs/2411.19826

arxiv.org/abs/2411.19826

I sure how there aren't multiple papers that match "J. Doe et al. NeurIPS (2023)."

arxiv.org/abs/2206.15378

Why would anyone do this?

I sure how there aren't multiple papers that match "J. Doe et al. NeurIPS (2023)."

arxiv.org/abs/2206.15378

Why would anyone do this?