📄 jmlr.org/papers/v26/2...

💻 github.com/stellatogrp/...

📄 jmlr.org/papers/v26/2...

💻 github.com/stellatogrp/...

🔗 DOI: doi.org/10.1007/s10107-025-02261-w

📄 arXiv: arxiv.org/pdf/2403.033...

💻 Code: github.com/stellatogrp/...

🔗 DOI: doi.org/10.1007/s10107-025-02261-w

📄 arXiv: arxiv.org/pdf/2403.033...

💻 Code: github.com/stellatogrp/...

🎥 Recording: https://euroorml.euro-online.org/

Big thanks to Dolores Romero Morales for the invitation! 🙌 #MachineLearning #Optimization #ORMS

🎥 Recording: https://euroorml.euro-online.org/

Big thanks to Dolores Romero Morales for the invitation! 🙌 #MachineLearning #Optimization #ORMS

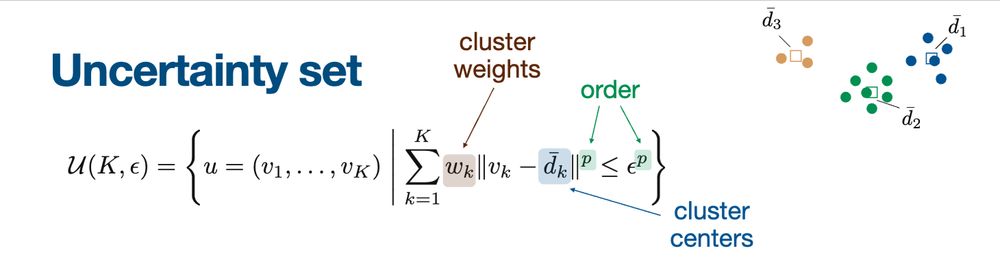

Work w/ my students Irina Wang (lead) and Cole Becker, in collab. w/

Bart Van Parys

🧵 (7/7)

Work w/ my students Irina Wang (lead) and Cole Becker, in collab. w/

Bart Van Parys

🧵 (7/7)

📰 Arxiv (longer version): https://buff.ly/3CT4aWD

👩💻 Code: https://buff.ly/3ATqAXh

w/ Irina Wang, Cole Becker, and Bart van Parys

A thread 🧵 (1/7)👇

📰 Arxiv (longer version): https://buff.ly/3CT4aWD

👩💻 Code: https://buff.ly/3ATqAXh

w/ Irina Wang, Cole Becker, and Bart van Parys

A thread 🧵 (1/7)👇

Rajiv has done excellent work on learning optimization algorithms for large-scale and embedded optimization, with strong convergence and generalization guarantees.

He will soon start a postdoc at UPenn Engineering!

Rajiv has done excellent work on learning optimization algorithms for large-scale and embedded optimization, with strong convergence and generalization guarantees.

He will soon start a postdoc at UPenn Engineering!

Missed the live sessions? Catch up on all the talks with the video recordings here: https://buff.ly/3YwomX6

Missed the live sessions? Catch up on all the talks with the video recordings here: https://buff.ly/3YwomX6