They call any layer that can read a separate context plus a query a “contextual layer”.

Stack this layer on top of a normal multilayer perceptron and you get a “contextual block”.

For that block, the context acts exactly like a rank 1 additive patch on the

--- ...

They call any layer that can read a separate context plus a query a “contextual layer”.

Stack this layer on top of a normal multilayer perceptron and you get a “contextual block”.

For that block, the context acts exactly like a rank 1 additive patch on the

--- ...

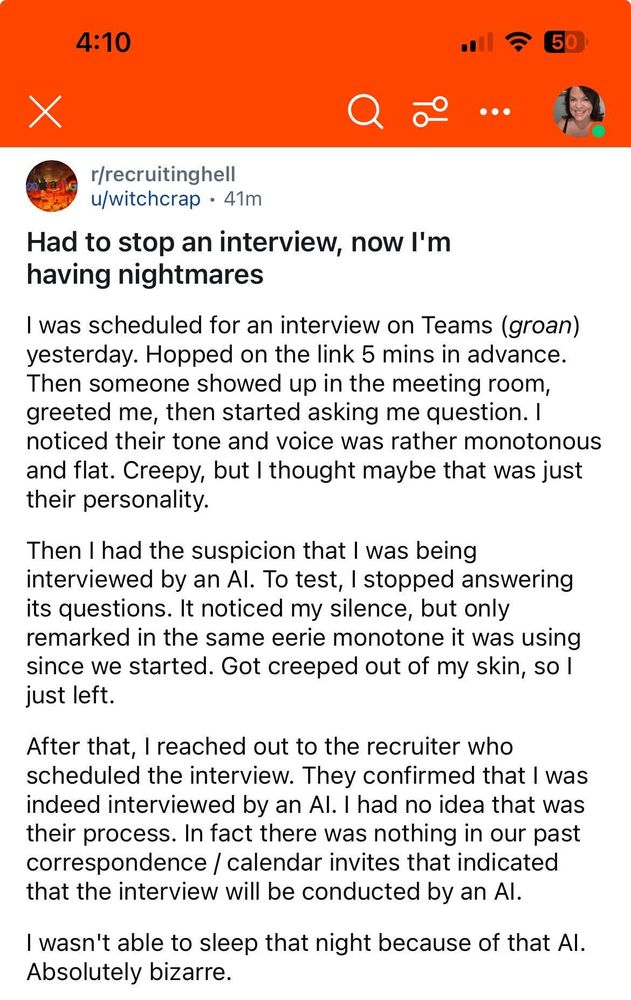

We live in a weird timeline…

We live in a weird timeline…

👉 What’s the right generative modelling objective when data—not compute—is the bottleneck?

TL;DR:

▶️Compute-constrained? Train Autoregressive models

▶️Data-constrained? Train Diffusion models

Get ready for 🤿 1/n

--- ...

👉 What’s the right generative modelling objective when data—not compute—is the bottleneck?

TL;DR:

▶️Compute-constrained? Train Autoregressive models

▶️Data-constrained? Train Diffusion models

Get ready for 🤿 1/n

--- ...

Across different unique data scales, we observe:

1️⃣ At low compute, Autoregressive models win.

2️⃣ After a certain amount of compute,

--- ...

Across different unique data scales, we observe:

1️⃣ At low compute, Autoregressive models win.

2️⃣ After a certain amount of compute,

--- ...

---

paper : https://arxiv.org/abs/2507.15857

---

paper : https://arxiv.org/abs/2507.15857

Inverse Scaling in Test-Time Compute

This study shows that longer reasoning in Large Reasoning Models (LRMs) can hurt performance—revealing a surprising inverse scaling between reasoning length and accuracy. ...

Inverse Scaling in Test-Time Compute

This study shows that longer reasoning in Large Reasoning Models (LRMs) can hurt performance—revealing a surprising inverse scaling between reasoning length and accuracy. ...

🔓 Open for the community to leverage model weights & outputs

🛠️ API outputs can now be used for fine-tuning & distillation

🔓 Open for the community to leverage model weights & outputs

🛠️ API outputs can now be used for fine-tuning & distillation