His thesis focused on structured visual representations:

• VidEdit - zero-shot text-to-video editing

• DiffCut - zero-shot segmentation via diffusion features

• JAFAR - high-res visual representation upsampling

His thesis focused on structured visual representations:

• VidEdit - zero-shot text-to-video editing

• DiffCut - zero-shot segmentation via diffusion features

• JAFAR - high-res visual representation upsampling

- < 9h on a single A100 gpu.

- Improves across 6 segmentation benchmarks

- Boosts performance for in-context depth prediction.

- Plug-and-play for different ViTs: DINOv2, CLIP, MAE.

- Robust in low-shot and domain shift.

- < 9h on a single A100 gpu.

- Improves across 6 segmentation benchmarks

- Boosts performance for in-context depth prediction.

- Plug-and-play for different ViTs: DINOv2, CLIP, MAE.

- Robust in low-shot and domain shift.

DIP doesn't require manually annotated segmentation masks for its post-training. To accomplish this, it leverages Stable Diffusion (via DiffCut) alongside DINOv2R features to automatically construct in-context pseudo-tasks for its post-training.

DIP doesn't require manually annotated segmentation masks for its post-training. To accomplish this, it leverages Stable Diffusion (via DiffCut) alongside DINOv2R features to automatically construct in-context pseudo-tasks for its post-training.

- Meta-learning inspired: adopts episodic training principles

- Task-aligned: Explicitly mimics downstream dense in-context tasks during post-training.

- Purpose-built: Optimizes the model for dense in-context performance.

- Meta-learning inspired: adopts episodic training principles

- Task-aligned: Explicitly mimics downstream dense in-context tasks during post-training.

- Purpose-built: Optimizes the model for dense in-context performance.

Formulate dense prediction tasks as nearest-neighbor retrieval problems using patch feature similarities between query and the labeled prompt images (introduced in @ibalazevic.bsky.social et al.’s HummingBird; figure below from their work).

Formulate dense prediction tasks as nearest-neighbor retrieval problems using patch feature similarities between query and the labeled prompt images (introduced in @ibalazevic.bsky.social et al.’s HummingBird; figure below from their work).

Introducing DIP: unsupervised post-training that enhances dense features in pretrained ViTs for dense in-context scene understanding

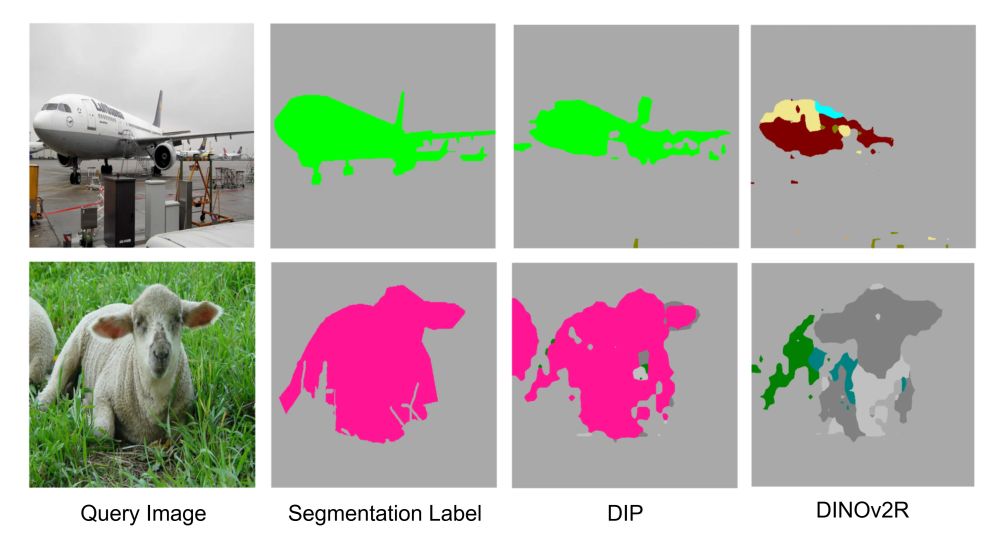

Below: Low-shot in-context semantic segmentation examples. DIP features outperform DINOv2!

Introducing DIP: unsupervised post-training that enhances dense features in pretrained ViTs for dense in-context scene understanding

Below: Low-shot in-context semantic segmentation examples. DIP features outperform DINOv2!