searchivarius.org/blog/curious...

searchivarius.org/blog/curious...

5. Bonus point: of course, often times the complaints are incorrect. If you further poke the model it will likely accept being wrong. Which, in turn, may not mean much because models are also clearly trained to agree with humans as much as possible.

↩️

5. Bonus point: of course, often times the complaints are incorrect. If you further poke the model it will likely accept being wrong. Which, in turn, may not mean much because models are also clearly trained to agree with humans as much as possible.

↩️

🟦

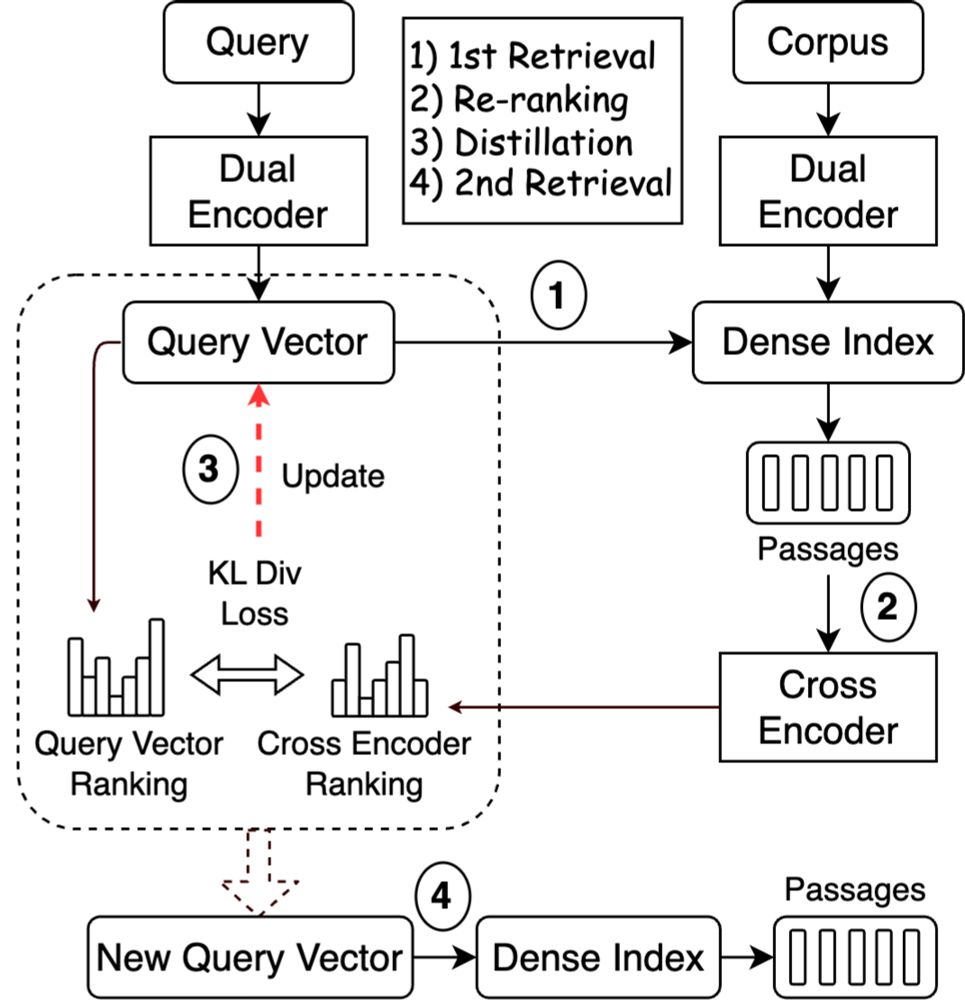

dl.acm.org/doi/abs/10.1...

🟦

dl.acm.org/doi/abs/10.1...

↩️

↩️

↩️

research.ibm.com/publications...

↩️

research.ibm.com/publications...

↩️

↩️

↩️

↩️

x.com/srchvrs/stat...

x.com/srchvrs/stat...