Soroush Mirjalili

@soroushmirjalili.bsky.social

Postdoc in the Kuhl Lab at the University of Oregon, PhD from UT Austin. Episodic Memory | Computational Neuroscience | Cognitive Neuroscience | Machine Learning. 🌿 -> 🐝 -> 🐂 -> 🦆, he/him

soroushmirjalili.com

soroushmirjalili.com

Thank you so much!!

October 15, 2025 at 6:05 PM

Thank you so much!!

Thanks Ziyao!! :)

October 15, 2025 at 6:04 PM

Thanks Ziyao!! :)

This work was a true team effort, and I’d like to thank Wanjia Guo, Dominik Grätz, Eric Wang, Dr. Ulrich Mayr, and Dr. Brice Kuhl for their invaluable help throughout the process. I’m especially grateful to Brice for his guidance and support that extended far beyond this paper.

October 14, 2025 at 4:48 PM

This work was a true team effort, and I’d like to thank Wanjia Guo, Dominik Grätz, Eric Wang, Dr. Ulrich Mayr, and Dr. Brice Kuhl for their invaluable help throughout the process. I’m especially grateful to Brice for his guidance and support that extended far beyond this paper.

These findings provide insight into how the hippocampus resolves memory interference 'one dimension at a time', demonstrating highly dynamic and adaptive processes that dramatically increase the representational distance between memories that are most at risk for interference.

October 14, 2025 at 4:48 PM

These findings provide insight into how the hippocampus resolves memory interference 'one dimension at a time', demonstrating highly dynamic and adaptive processes that dramatically increase the representational distance between memories that are most at risk for interference.

Finally, dimension-specific input-output functions in CA3/DG strikingly mirrored the sequential pattern observed in behavior: CA3/DG inverted each similarity dimension when it contributed to memory interference but preserved the dimension when it didn't contribute to interference.

October 14, 2025 at 4:48 PM

Finally, dimension-specific input-output functions in CA3/DG strikingly mirrored the sequential pattern observed in behavior: CA3/DG inverted each similarity dimension when it contributed to memory interference but preserved the dimension when it didn't contribute to interference.

Among the 10 dimensions, the first 2 dimensions of similarity strongly predicted memory interference errors. However, their influence on behavior sharply changed with experience. Whereas one dimension drove interference earlier in learning, the other drove interference later.

October 14, 2025 at 4:48 PM

Among the 10 dimensions, the first 2 dimensions of similarity strongly predicted memory interference errors. However, their influence on behavior sharply changed with experience. Whereas one dimension drove interference earlier in learning, the other drove interference later.

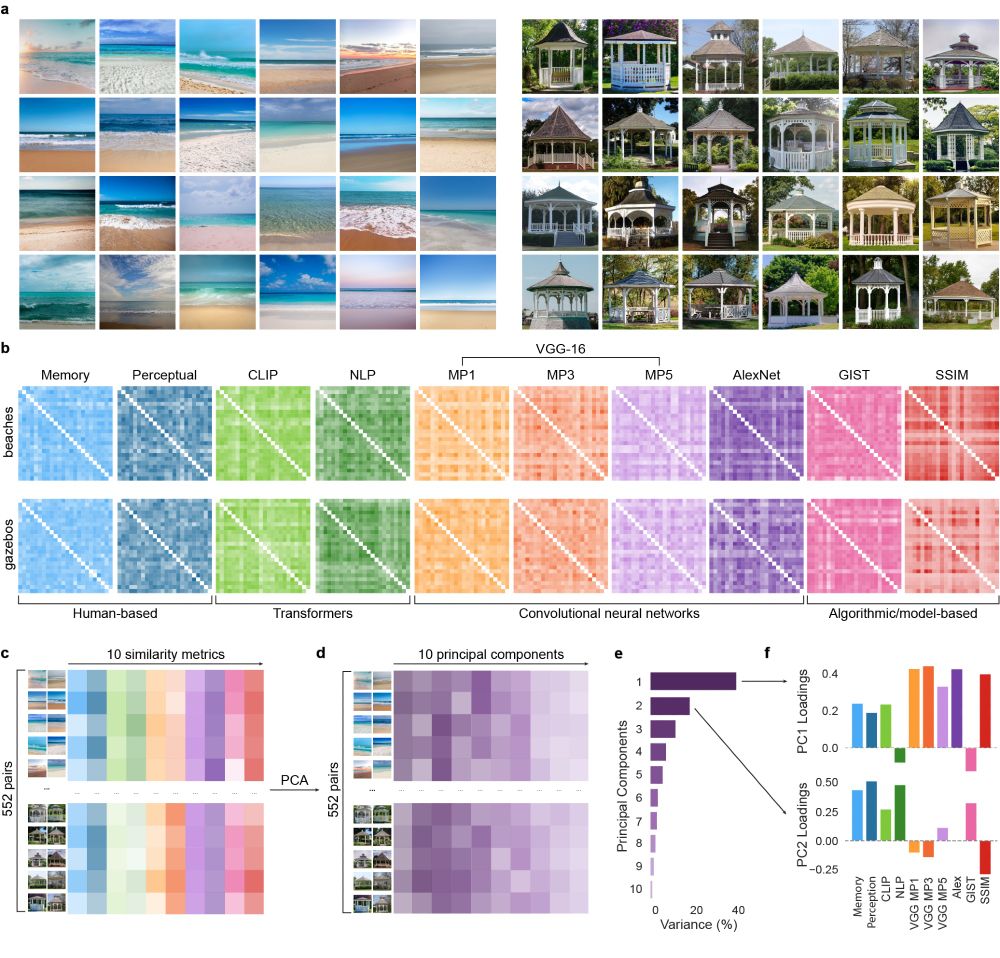

First, we generated a set of natural scene images from two visual categories and rigorously characterized similarity using a wide array of methods. We then applied PCA to these similarity matrices to identify orthogonal components (dimensions) of similarity across the 10 metrics.

October 14, 2025 at 4:48 PM

First, we generated a set of natural scene images from two visual categories and rigorously characterized similarity using a wide array of methods. We then applied PCA to these similarity matrices to identify orthogonal components (dimensions) of similarity across the 10 metrics.

This is really cool!

September 22, 2025 at 11:40 PM

This is really cool!

Congrats Ziyao!!

August 12, 2025 at 9:57 PM

Congrats Ziyao!!