more at https://soldaini.net

OF COURSE my code is better than yours

OF COURSE my code is better than yours

🚗read the full tech report: arxiv.org/abs/2501.00656

💬 try OLMo 2 Instruct 13B on the @allen_ai playground: playground.allenai.org

🚗read the full tech report: arxiv.org/abs/2501.00656

💬 try OLMo 2 Instruct 13B on the @allen_ai playground: playground.allenai.org

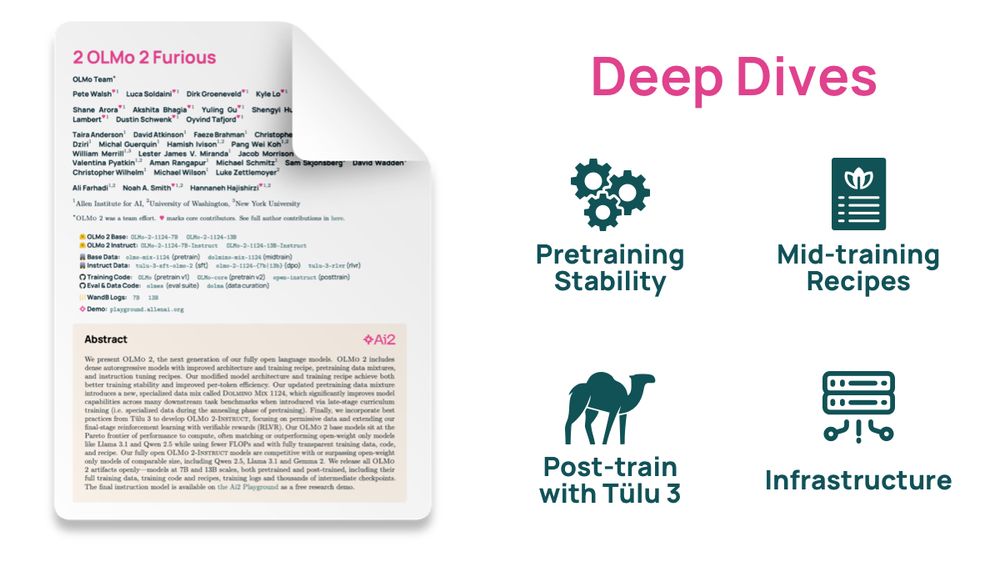

A lot of LM shops are shy when it comes to describing how their infrastructure works. Not us!

We describe our two clusters, Augusta and Jupiter, in great details, and explain what matters in making your infra reliable

A lot of LM shops are shy when it comes to describing how their infrastructure works. Not us!

We describe our two clusters, Augusta and Jupiter, in great details, and explain what matters in making your infra reliable

OLMo 2 post-training is proof that our Tulu 3 recipe is easy to adapt to any model. Took the team less than 6 days to get a strong OLMo 2-Instruct checkpoint out!

OLMo 2 post-training is proof that our Tulu 3 recipe is easy to adapt to any model. Took the team less than 6 days to get a strong OLMo 2-Instruct checkpoint out!

LLM capabilities are unlocked at the end of pretraining by curating the right mixture to show models last. We explain how to curate the right data for this stage & measure its effects

LLM capabilities are unlocked at the end of pretraining by curating the right mixture to show models last. We explain how to curate the right data for this stage & measure its effects

How do you ensure that your pretrain run doesn't blow up?

We spent months perfecting our OLMo recipe---it takes many targeted mitigations and expensive experiments to get it right… well now you don't have to!

How do you ensure that your pretrain run doesn't blow up?

We spent months perfecting our OLMo recipe---it takes many targeted mitigations and expensive experiments to get it right… well now you don't have to!

We get in the weeds with this one, with 50+ pages on 4 crucial components of LLM development pipeline:

We get in the weeds with this one, with 50+ pages on 4 crucial components of LLM development pipeline:

Meta, Google: post Corporate Memphis, big-tech minimalism

I'm ready to give my money to the first brutalist AI endeavor 😍

Meta, Google: post Corporate Memphis, big-tech minimalism

I'm ready to give my money to the first brutalist AI endeavor 😍