📄Paper: arxiv.org/abs/2503.09320

🌐 Website: sites.google.com/view/2handedafforder

Work done with Marin Heidinger, Vignesh Prasad & @georgiachal.bsky.social

See you in Hawaii at #ICCV2025! 🌴

📄Paper: arxiv.org/abs/2503.09320

🌐 Website: sites.google.com/view/2handedafforder

Work done with Marin Heidinger, Vignesh Prasad & @georgiachal.bsky.social

See you in Hawaii at #ICCV2025! 🌴

4/5

4/5

No manual labeling is required. The narrations from egocentric datasets also provide free-form text supervision! (Eg. "pour milk into bowl")

3/5

No manual labeling is required. The narrations from egocentric datasets also provide free-form text supervision! (Eg. "pour milk into bowl")

3/5

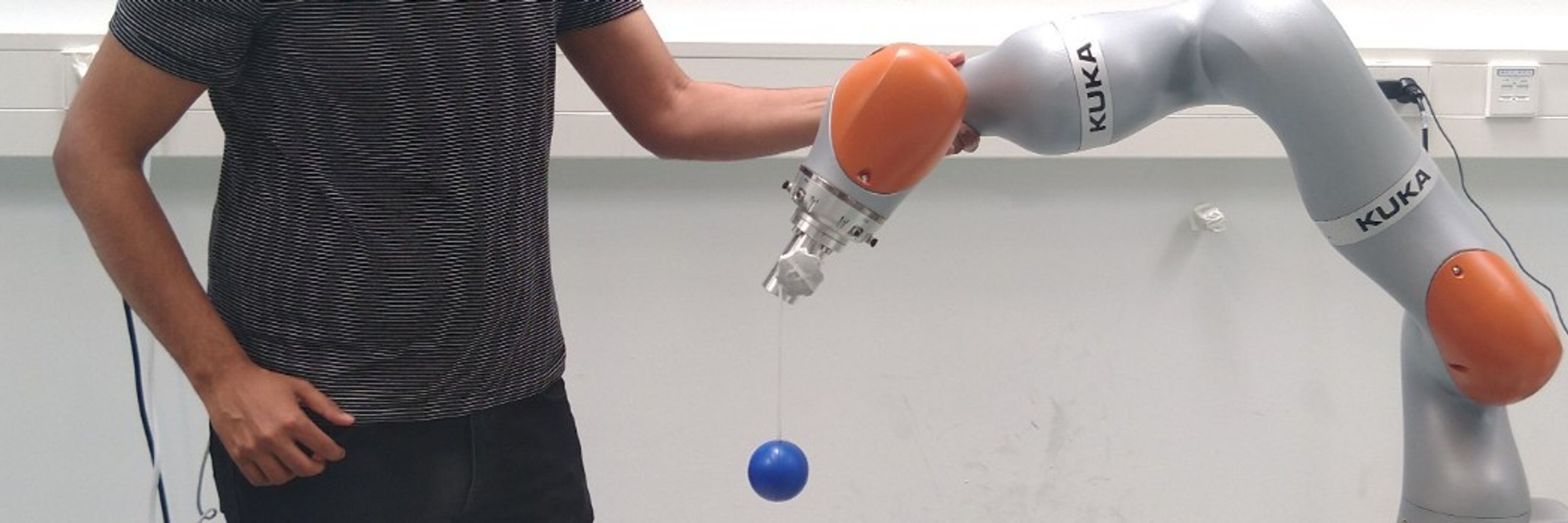

Most affordance detection methods just segment object parts & do not predict actionable regions for robots!

Our solution?

Use egocentric bimanual human videos to extract precise affordance regions considering object relationships, context, & hand coordination!

2/5

Most affordance detection methods just segment object parts & do not predict actionable regions for robots!

Our solution?

Use egocentric bimanual human videos to extract precise affordance regions considering object relationships, context, & hand coordination!

2/5

Happy to be organizing this with @georgiachal.bsky.social, Yu Xiang, @danfei.bsky.social and @galasso.bsky.social!

Happy to be organizing this with @georgiachal.bsky.social, Yu Xiang, @danfei.bsky.social and @galasso.bsky.social!

We’re inviting contributions in the form of:

📝 Full papers OR

📝 4-page extended abstracts

🗓️ Submission Deadline: April 30, 2025

🏆 Best Paper Award, sponsored by Meta!

We’re inviting contributions in the form of:

📝 Full papers OR

📝 4-page extended abstracts

🗓️ Submission Deadline: April 30, 2025

🏆 Best Paper Award, sponsored by Meta!

🥽 Egocentric interfaces for robot learning

🧠 High-level action & scene understanding

🤝 Human-to-robot transfer

🧱 Foundation models from human activity datasets

🛠️ Egocentric world models for high-level planning & low-level manipulation

🥽 Egocentric interfaces for robot learning

🧠 High-level action & scene understanding

🤝 Human-to-robot transfer

🧱 Foundation models from human activity datasets

🛠️ Egocentric world models for high-level planning & low-level manipulation