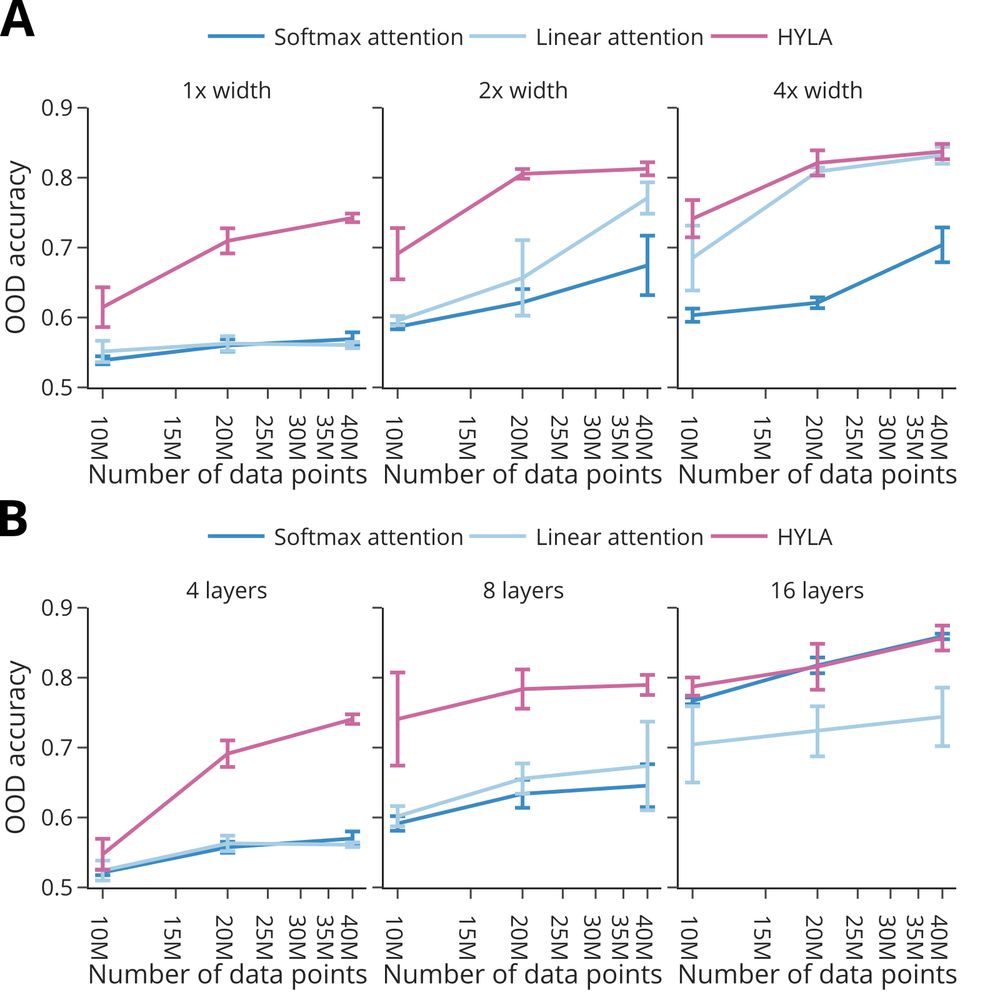

Strategically choosing the training data matters a lot.

Strategically choosing the training data matters a lot.

It might be tempting to think that we need to equip neural network architectures with stronger symbolic priors to capture this compositionality, but do we?

It might be tempting to think that we need to equip neural network architectures with stronger symbolic priors to capture this compositionality, but do we?

Our #NeurIPS2025 Spotlight paper suggests that it can -- with the right training distribution.

🧵 A short thread:

Our #NeurIPS2025 Spotlight paper suggests that it can -- with the right training distribution.

🧵 A short thread:

Come checkout our Oral at #ICLR tomorrow (Apr 26th, poster at 10:00, Oral session 6C in the afternoon).

openreview.net/forum?id=V4K...

Come checkout our Oral at #ICLR tomorrow (Apr 26th, poster at 10:00, Oral session 6C in the afternoon).

openreview.net/forum?id=V4K...

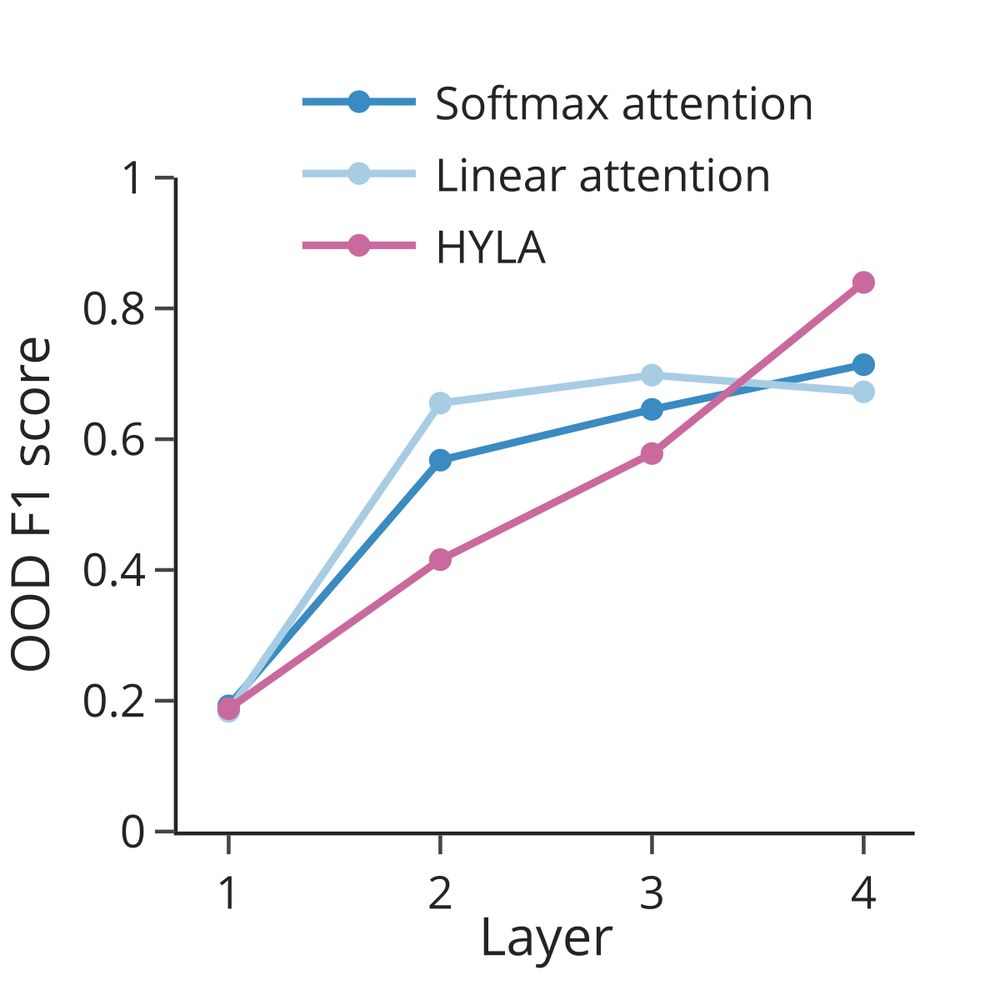

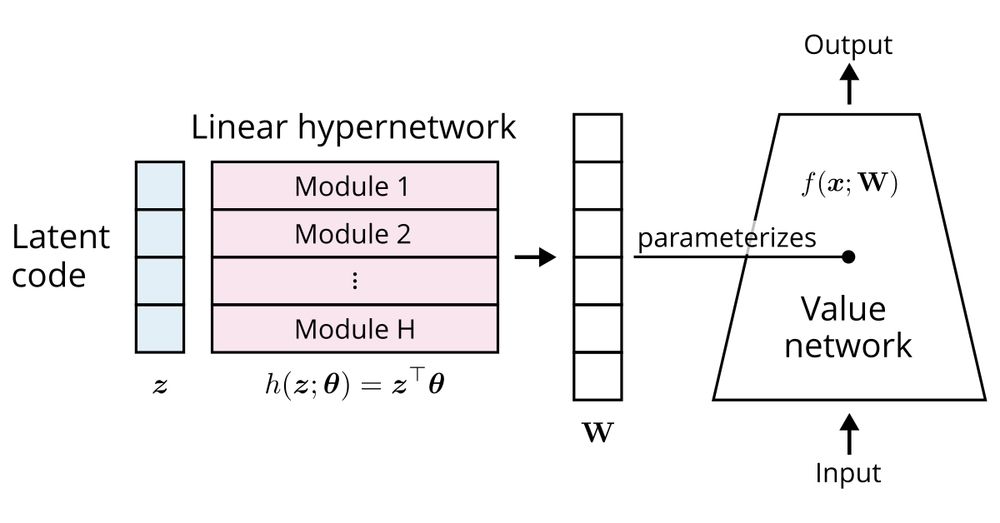

Could we maybe further improve compositionality?

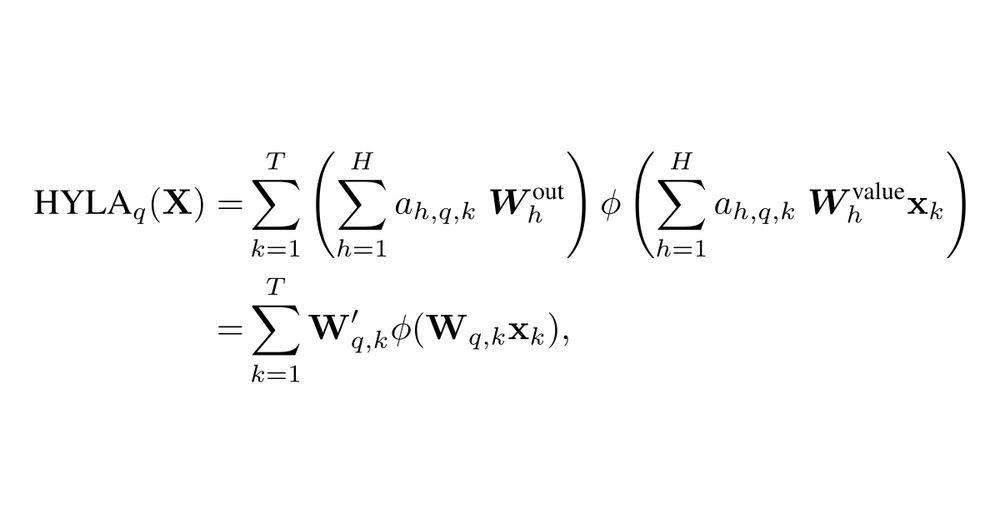

We can for instance make the value network nonlinear - without introducing additional parameters.

Could we maybe further improve compositionality?

We can for instance make the value network nonlinear - without introducing additional parameters.

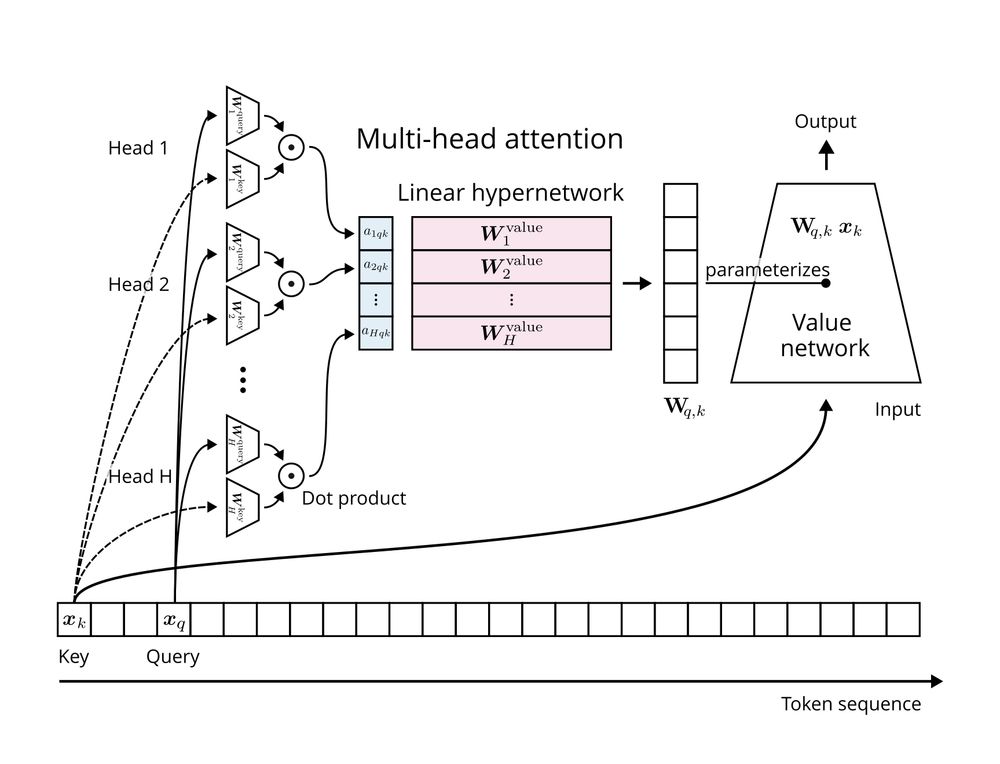

Importantly, these operations are reusable: the same hypernetwork is used across all key-query pairs.

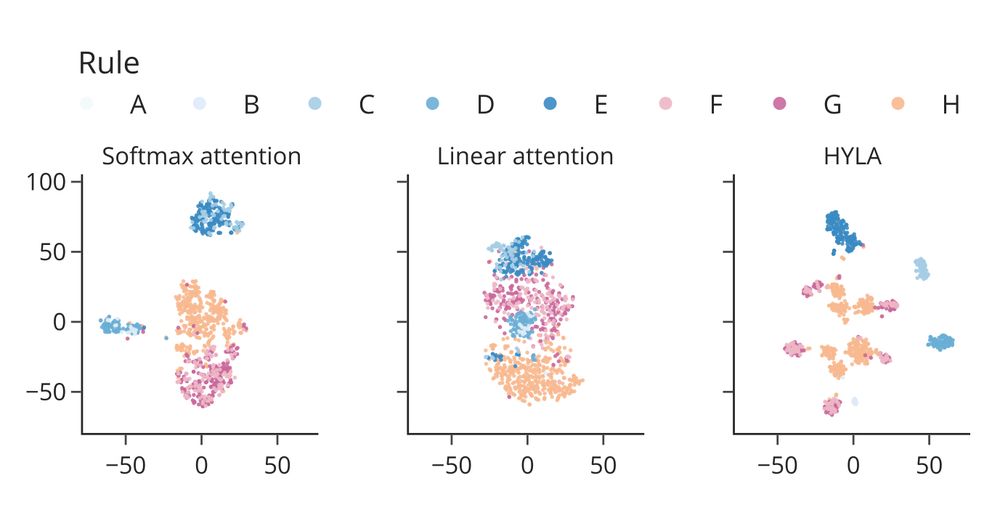

Could their reuse allow transformers to compositionally generalize?

Importantly, these operations are reusable: the same hypernetwork is used across all key-query pairs.

Could their reuse allow transformers to compositionally generalize?

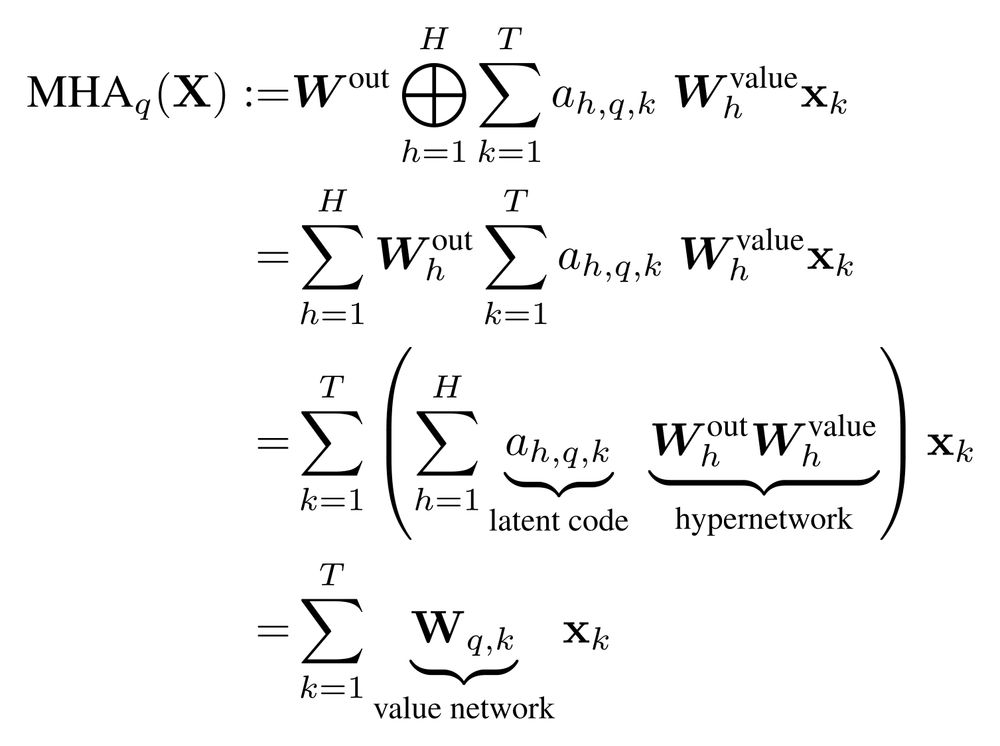

These hypernetworks are comparably simple: Both the hypernetwork and its value network are linear.

So why could this matter?

These hypernetworks are comparably simple: Both the hypernetwork and its value network are linear.

So why could this matter?

It turns out that attention with multiple heads already has them built-in!

It turns out that attention with multiple heads already has them built-in!

And why does attention work so much better with multiple heads?

There might be a common answer to both of these questions.

And why does attention work so much better with multiple heads?

There might be a common answer to both of these questions.