Sepideh Mamooler@ACL🇦🇹

@smamooler.bsky.social

PhD Candidate at @icepfl.bsky.social | Ex Research Intern@Google DeepMind

👩🏻💻 Working on multi-modal AI reasoning models in scientific domains

https://smamooler.github.io/

👩🏻💻 Working on multi-modal AI reasoning models in scientific domains

https://smamooler.github.io/

Couldn't attend @naaclmeeting.bsky.social in person as I didn't get a visa on time 🤷♀️ My colleague @mismayil.bsky.social will present PICLe on my behalf today, May 1st, at 3:15 pm in RUIDOSO. Feel free to reach out if you want to chat more!

May 1, 2025 at 8:39 AM

Couldn't attend @naaclmeeting.bsky.social in person as I didn't get a visa on time 🤷♀️ My colleague @mismayil.bsky.social will present PICLe on my behalf today, May 1st, at 3:15 pm in RUIDOSO. Feel free to reach out if you want to chat more!

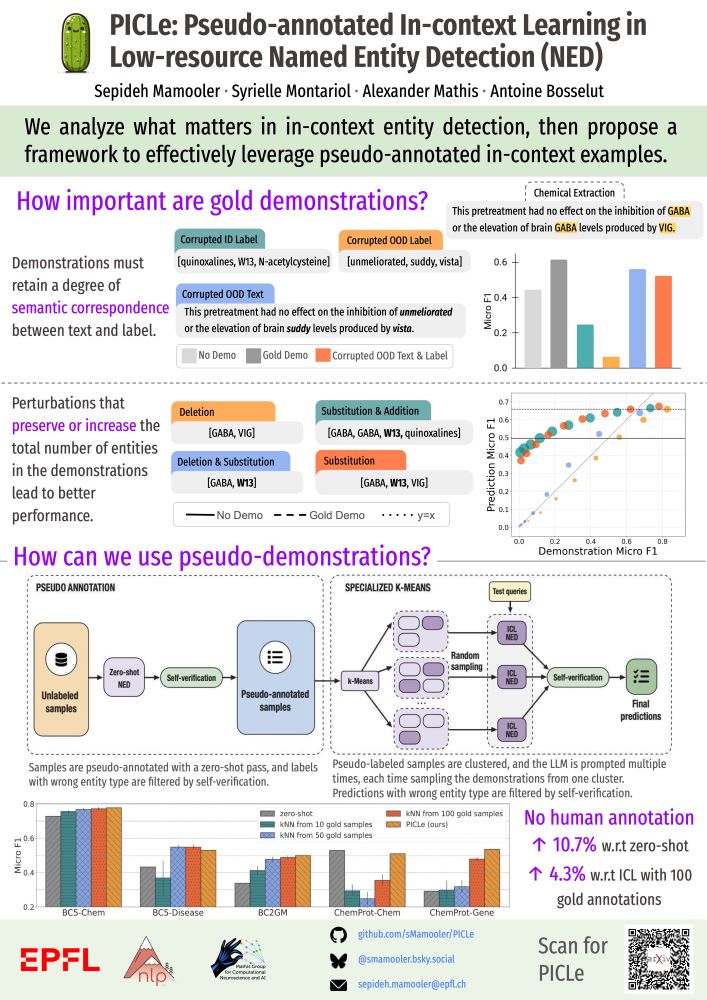

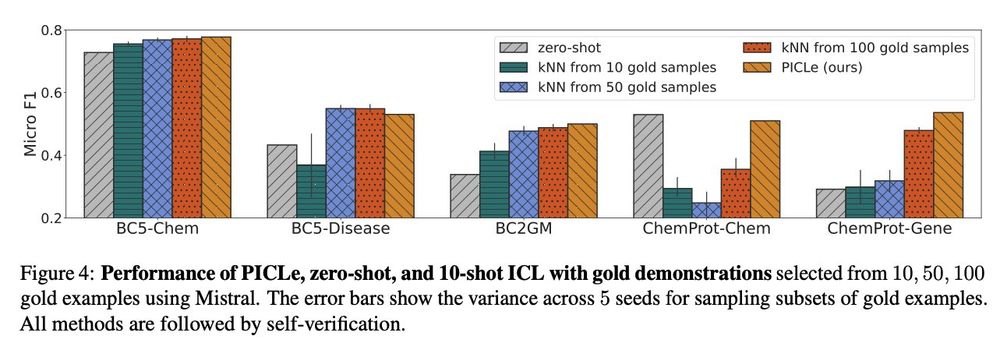

📊 We evaluate PICLe on 5 biomedical NED datasets and find:

✨ With zero human annotations, PICLe outperforms ICL in low-resource settings, where limited gold examples can be used as in-context demonstrations!

✨ With zero human annotations, PICLe outperforms ICL in low-resource settings, where limited gold examples can be used as in-context demonstrations!

December 17, 2024 at 2:51 PM

📊 We evaluate PICLe on 5 biomedical NED datasets and find:

✨ With zero human annotations, PICLe outperforms ICL in low-resource settings, where limited gold examples can be used as in-context demonstrations!

✨ With zero human annotations, PICLe outperforms ICL in low-resource settings, where limited gold examples can be used as in-context demonstrations!

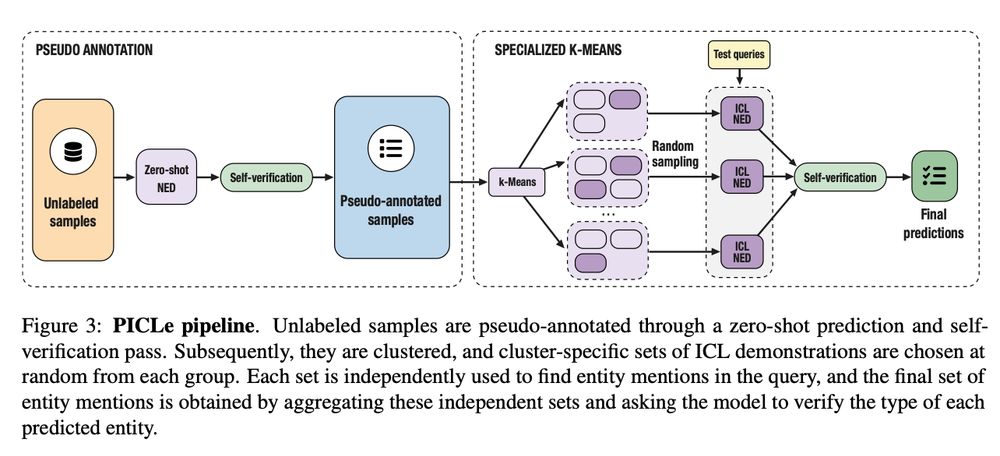

⚙️ How does PICLe work?

1️⃣ LLMs annotate demonstrations in a zero-shot first pass.

2️⃣ Synthetic demos are clustered, and in-context sets are sampled.

3️⃣ Entity mentions are predicted using each set independently.

4️⃣ Self-verification selects the final predictions.

1️⃣ LLMs annotate demonstrations in a zero-shot first pass.

2️⃣ Synthetic demos are clustered, and in-context sets are sampled.

3️⃣ Entity mentions are predicted using each set independently.

4️⃣ Self-verification selects the final predictions.

December 17, 2024 at 2:51 PM

⚙️ How does PICLe work?

1️⃣ LLMs annotate demonstrations in a zero-shot first pass.

2️⃣ Synthetic demos are clustered, and in-context sets are sampled.

3️⃣ Entity mentions are predicted using each set independently.

4️⃣ Self-verification selects the final predictions.

1️⃣ LLMs annotate demonstrations in a zero-shot first pass.

2️⃣ Synthetic demos are clustered, and in-context sets are sampled.

3️⃣ Entity mentions are predicted using each set independently.

4️⃣ Self-verification selects the final predictions.

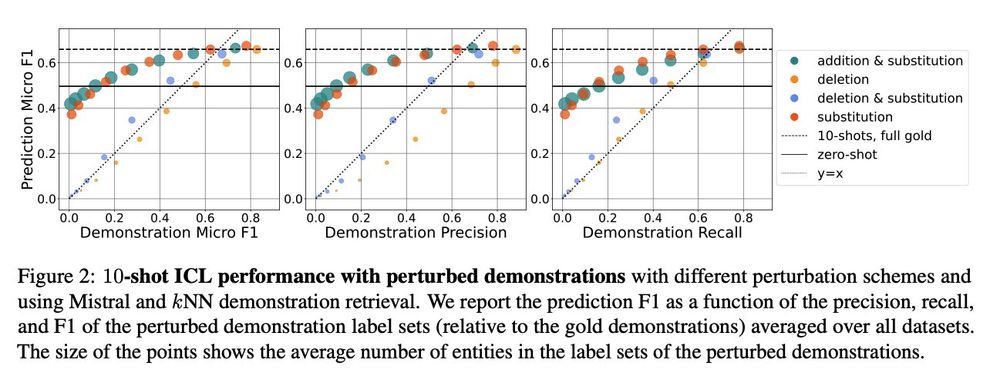

📊 Key finding: A semantic mapping between demonstration context and label is essential for in-context task transfer. BUT even weak semantic mappings can provide enough signal for effective adaptation in NED!

December 17, 2024 at 2:51 PM

📊 Key finding: A semantic mapping between demonstration context and label is essential for in-context task transfer. BUT even weak semantic mappings can provide enough signal for effective adaptation in NED!