"how to track objects with SORT tracker" notebook: colab.research.google.com/github/robof...

"how to track objects with SORT tracker" notebook: colab.research.google.com/github/robof...

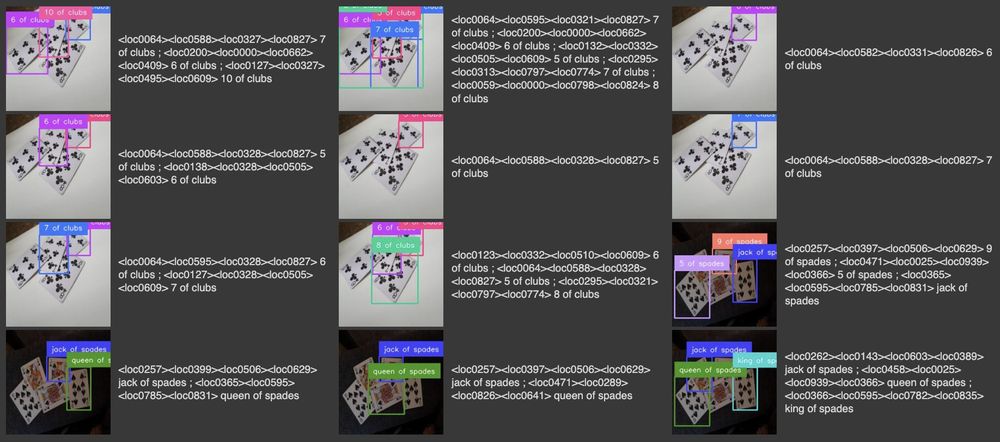

- used google/paligemma2-3b-pt-448 checkpoint

- trained on A100 with 40GB VRAM

- 1h of training

- 0.62 mAP on the validation set

colab with complete fine-tuning code: colab.research.google.com/github/robof...

- used google/paligemma2-3b-pt-336 checkpoint; I tried to make it happen with 224, but 336 performed a lot better

- trained on A100 with 40GB VRAM

- trained with LoRA

colab with complete fine-tuning code: colab.research.google.com/github/robof...

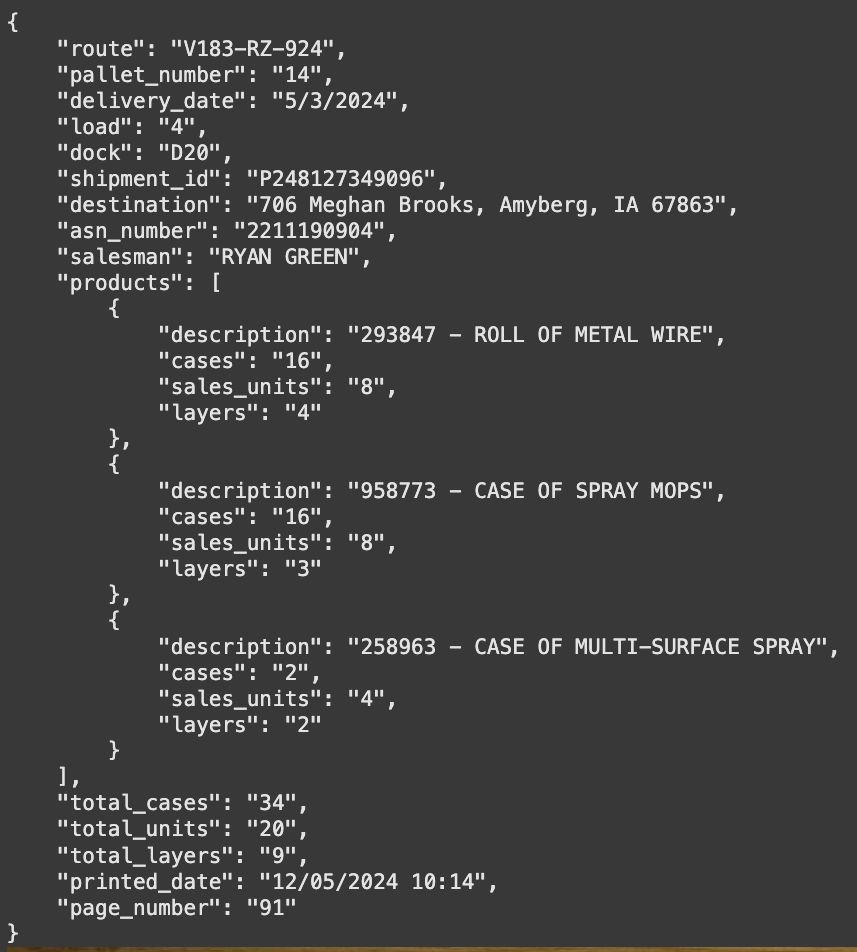

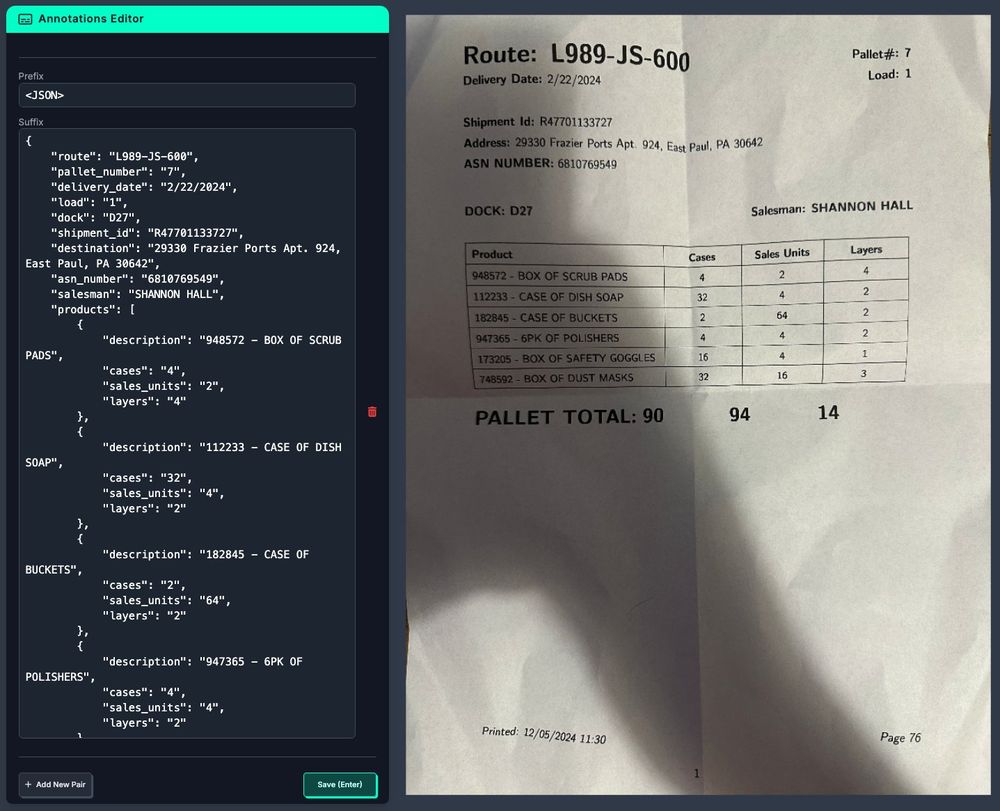

each subset contains images and annotations.jsonl file where each line of the file is a valid JSON object; each JSON object has three keys: image, prefix, and suffix

each subset contains images and annotations.jsonl file where each line of the file is a valid JSON object; each JSON object has three keys: image, prefix, and suffix

to pick the right variant, you need to take into account the vision-language task you are solving, available hardware, amount of data, inference speed

to pick the right variant, you need to take into account the vision-language task you are solving, available hardware, amount of data, inference speed

we can see that PaliGemma2's object detection performance depends more on input resolution than model size. 3B 448 seems like a sweet spot.

we can see that PaliGemma2's object detection performance depends more on input resolution than model size. 3B 448 seems like a sweet spot.

compared to PG1, it performs much better; datasets with a large number of classes were hard to fine-tune with previous version

compared to PG1, it performs much better; datasets with a large number of classes were hard to fine-tune with previous version

- there are still a lot bigger versions of the model, both in terms of parameters and input resolution

- I only trained it for 1 hour

- there are still a lot bigger versions of the model, both in terms of parameters and input resolution

- I only trained it for 1 hour

it looks like OCR-related metrics ST-VQA, TallyQA, and TextCaps... benefit more from increased resolution than model size. that's why I went from 224 to 336.

it looks like OCR-related metrics ST-VQA, TallyQA, and TextCaps... benefit more from increased resolution than model size. that's why I went from 224 to 336.

- never allow github actions from first-time contributors.

- always require review for new contributors.

- never run important actions automatically via bots.

- protect release actions with unique cases and selected actors.

- never allow github actions from first-time contributors.

- always require review for new contributors.

- never run important actions automatically via bots.

- protect release actions with unique cases and selected actors.

malicious code was injected into the pypi deployment workflow (github action).

the source code itself wasn't infected. however, the resulting tar/wheel files were corrupted during the build process.

malicious code was injected into the pypi deployment workflow (github action).

the source code itself wasn't infected. however, the resulting tar/wheel files were corrupted during the build process.