Looking for Ph.D position 26 Fall

Comp Psycholing & CogSci, human-like AI, rock🎸 @growai.bsky.social

Prev:

Summer Research Visit @MIT BCS(2025), Harvard Psych(2024), Undergrad@SJTU(2022-24)

Opinions are my own.

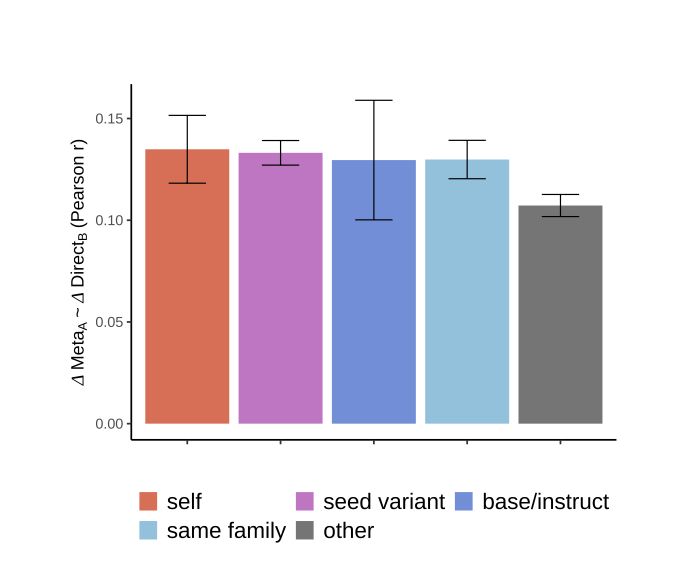

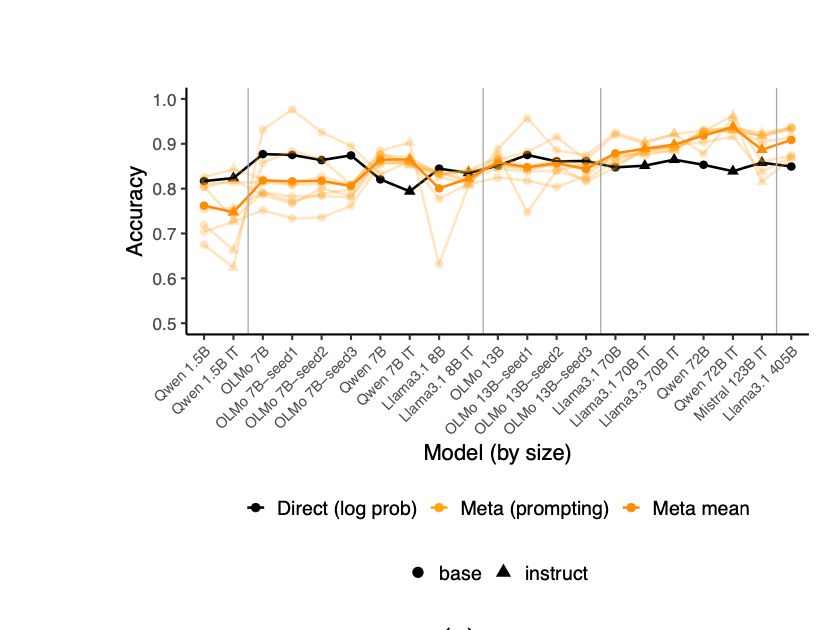

Across models and domains, we did not find evidence that LLMs have privileged access to their own predictions. 🧵(1/8)

Across models and domains, we did not find evidence that LLMs have privileged access to their own predictions. 🧵(1/8)