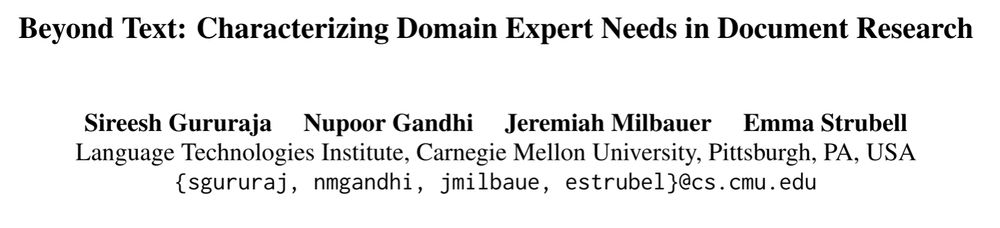

Sireesh Gururaja

@siree.sh

PhD student @ltiatcmu.bsky.social. Working on NLP that centers worker agency. Otherwise: coffee, fly fishing, and keeping peach pits around, for...some reason

https://siree.sh

https://siree.sh

Coming soon (6pm!) to the #ACL poster session: how do experts work with collections of documents, and do LLMs do those things?

tl;dr: only sometimes! While we have good tools for things like information extraction, the way that experts read documents goes deeper - come to our poster to learn more!

tl;dr: only sometimes! While we have good tools for things like information extraction, the way that experts read documents goes deeper - come to our poster to learn more!

July 28, 2025 at 3:26 PM

Coming soon (6pm!) to the #ACL poster session: how do experts work with collections of documents, and do LLMs do those things?

tl;dr: only sometimes! While we have good tools for things like information extraction, the way that experts read documents goes deeper - come to our poster to learn more!

tl;dr: only sometimes! While we have good tools for things like information extraction, the way that experts read documents goes deeper - come to our poster to learn more!

Yeah, I think I read this (and got burned) the same way :/

D&B also had additional spots that implied our original reading, like the dates page:

D&B also had additional spots that implied our original reading, like the dates page:

May 14, 2025 at 11:41 PM

Yeah, I think I read this (and got burned) the same way :/

D&B also had additional spots that implied our original reading, like the dates page:

D&B also had additional spots that implied our original reading, like the dates page:

Hey, if it's good enough for the guy that founded the town...

May 2, 2025 at 3:06 PM

Hey, if it's good enough for the guy that founded the town...

Research going at the same pace, but!

December 20, 2024 at 5:29 PM

Research going at the same pace, but!

When I started on ARL project that funds my PhD, the thing we were supposed to build was a "MaterialsGPT".

What is a MaterialsGPT? Where does that idea come from? I got to spend a lot of time thinking about that second question with @davidthewid.bsky.social and Lucy Suchman (!) working on this:

What is a MaterialsGPT? Where does that idea come from? I got to spend a lot of time thinking about that second question with @davidthewid.bsky.social and Lucy Suchman (!) working on this:

December 17, 2024 at 2:33 PM

When I started on ARL project that funds my PhD, the thing we were supposed to build was a "MaterialsGPT".

What is a MaterialsGPT? Where does that idea come from? I got to spend a lot of time thinking about that second question with @davidthewid.bsky.social and Lucy Suchman (!) working on this:

What is a MaterialsGPT? Where does that idea come from? I got to spend a lot of time thinking about that second question with @davidthewid.bsky.social and Lucy Suchman (!) working on this:

Fully dislocated my shoulder going down some icy steps, so there will be no winter fishing for me this year :/ now gazing longingly at pictures of the last time I was out

December 8, 2024 at 4:05 PM

Fully dislocated my shoulder going down some icy steps, so there will be no winter fishing for me this year :/ now gazing longingly at pictures of the last time I was out

These years have also raised existential concerns about the incentives that drive the community, peer review, research under limited compute budgets, and even the place of a *CL community.

October 12, 2023 at 2:06 PM

These years have also raised existential concerns about the incentives that drive the community, peer review, research under limited compute budgets, and even the place of a *CL community.

What about LLMs? The last few years have intensified these trends: the community has grown immensely. As models grow better and NLP becomes more public-facing, failures in benchmarking become evident. Centralization on individual models has grown.

October 12, 2023 at 2:05 PM

What about LLMs? The last few years have intensified these trends: the community has grown immensely. As models grow better and NLP becomes more public-facing, failures in benchmarking become evident. Centralization on individual models has grown.

Neural NLP increased the sharing of toolkits or library code across labs and even across subfields, with libraries like PyTorch and Tensorflow. Pretraining extended this to the sharing of models, too, with Hugging Face being the biggest example.

October 12, 2023 at 2:05 PM

Neural NLP increased the sharing of toolkits or library code across labs and even across subfields, with libraries like PyTorch and Tensorflow. Pretraining extended this to the sharing of models, too, with Hugging Face being the biggest example.

The rise of statistical NLP in the early 2000s was another such cycle. But major methodological shifts come with major cultural shifts as well. Statistical NLP introduced a culture laser-focused on benchmarks and saw the end of a small, “high trust” research community.

October 12, 2023 at 2:04 PM

The rise of statistical NLP in the early 2000s was another such cycle. But major methodological shifts come with major cultural shifts as well. Statistical NLP introduced a culture laser-focused on benchmarks and saw the end of a small, “high trust” research community.

Participants describe cycles of research: a breakthrough, a flurry of work exploiting the new method, then a slower wave of work exploring extensions or limitations. This pattern is not new– for instance, we heard about this with SVMs, RNNs, and BERT!

October 12, 2023 at 2:03 PM

Participants describe cycles of research: a breakthrough, a flurry of work exploiting the new method, then a slower wave of work exploring extensions or limitations. This pattern is not new– for instance, we heard about this with SVMs, RNNs, and BERT!

These years have also raised existential concerns about the incentives that drive the community, peer review, research under limited compute budgets, and even the place of a *CL community.

October 12, 2023 at 2:02 PM

These years have also raised existential concerns about the incentives that drive the community, peer review, research under limited compute budgets, and even the place of a *CL community.

What about LLMs? The last few years have intensified these trends: the community has grown immensely. As models grow better and NLP becomes more public-facing, failures in benchmarking become evident. Centralization on individual models has grown.

October 12, 2023 at 2:02 PM

What about LLMs? The last few years have intensified these trends: the community has grown immensely. As models grow better and NLP becomes more public-facing, failures in benchmarking become evident. Centralization on individual models has grown.

We conducted long-form interviews with established NLP researchers, which reveal larger trends and forces that have been shaping the NLP research community since the 1980s.

October 12, 2023 at 1:59 PM

We conducted long-form interviews with established NLP researchers, which reveal larger trends and forces that have been shaping the NLP research community since the 1980s.

We all know that “recently large language models have”, “large language models are”, and “large language models can.” But *why* LLMs? How did we get here? (where is “here”?) What forces are shaping NLP, and how recent are they, actually?

To appear at EMNLP 2023: arxiv.org/abs/2310.07715

To appear at EMNLP 2023: arxiv.org/abs/2310.07715

October 12, 2023 at 1:59 PM

We all know that “recently large language models have”, “large language models are”, and “large language models can.” But *why* LLMs? How did we get here? (where is “here”?) What forces are shaping NLP, and how recent are they, actually?

To appear at EMNLP 2023: arxiv.org/abs/2310.07715

To appear at EMNLP 2023: arxiv.org/abs/2310.07715