@simonroschmann.bsky.social

PhD Student @eml-munich.bsky.social @tum.de @www.helmholtz-munich.de.

Passionate about ML research.

Passionate about ML research.

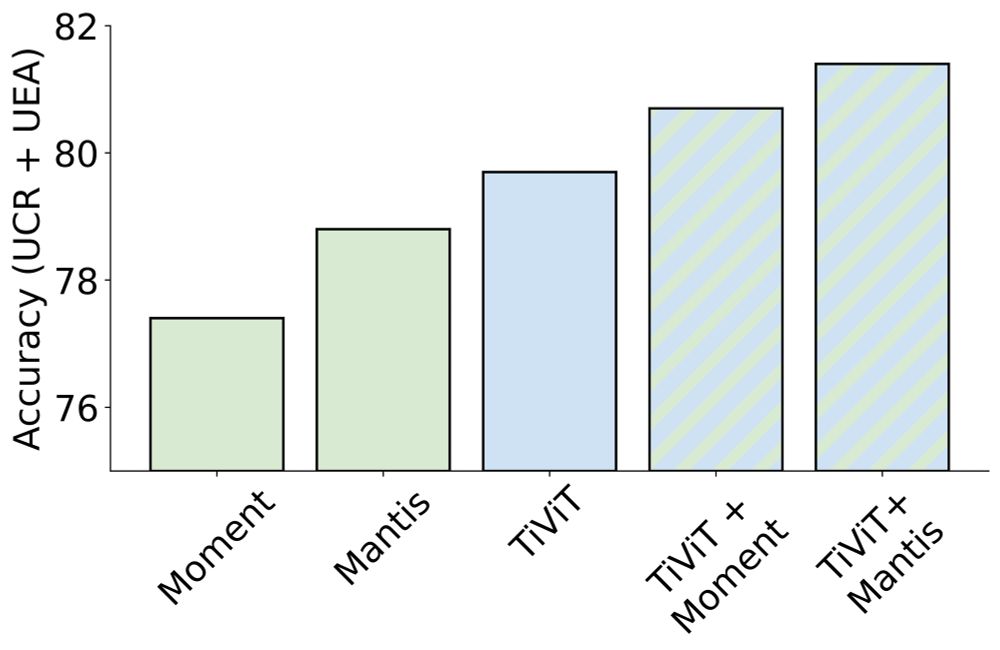

TiViT is on par with TSFMs (Mantis, Moment) on the UEA benchmark and significantly outperforms them on the UCR benchmark. The representations of TiViT and TSFMs are complementary; their combination yields SOTA classification results among foundation models.

July 3, 2025 at 7:59 AM

TiViT is on par with TSFMs (Mantis, Moment) on the UEA benchmark and significantly outperforms them on the UCR benchmark. The representations of TiViT and TSFMs are complementary; their combination yields SOTA classification results among foundation models.

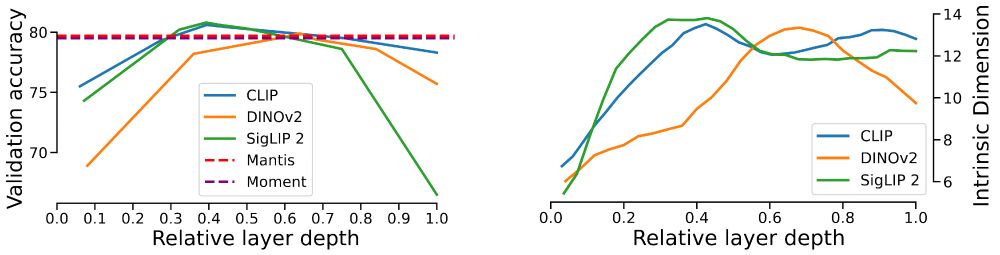

We further explore the structure of TiViT representations and find that intermediate layers with high intrinsic dimension are the most effective for time series classification.

July 3, 2025 at 7:59 AM

We further explore the structure of TiViT representations and find that intermediate layers with high intrinsic dimension are the most effective for time series classification.

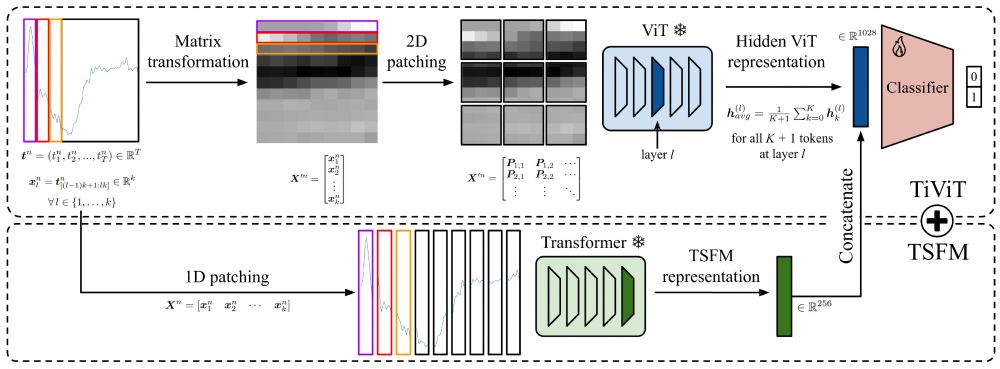

Our Time Vision Transformer (TiViT) converts a time series into a grayscale image, applies 2D patching, and utilizes a pretrained frozen ViT for feature extraction. We average the representations from a specific hidden layer and only train a linear classifier.

July 3, 2025 at 7:59 AM

Our Time Vision Transformer (TiViT) converts a time series into a grayscale image, applies 2D patching, and utilizes a pretrained frozen ViT for feature extraction. We average the representations from a specific hidden layer and only train a linear classifier.

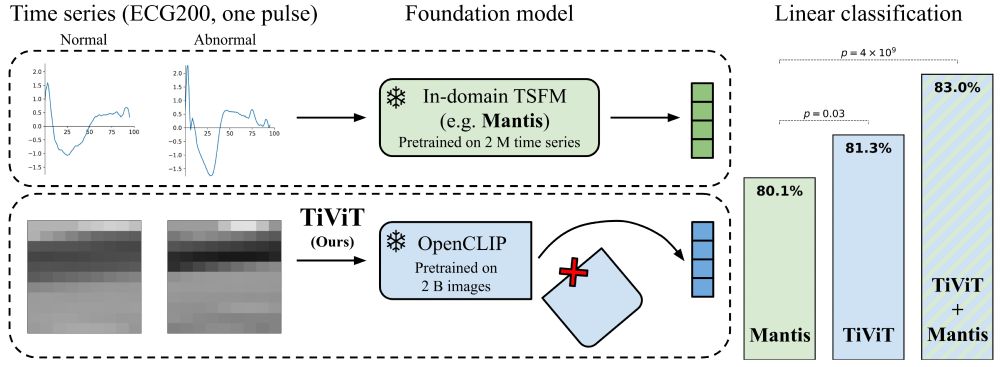

How can we circumvent data scarcity in the time series domain?

We propose to leverage pretrained ViTs (e.g., CLIP, DINOv2) for time series classification and outperform time series foundation models (TSFMs).

📄 Preprint: arxiv.org/abs/2506.08641

💻 Code: github.com/ExplainableM...

We propose to leverage pretrained ViTs (e.g., CLIP, DINOv2) for time series classification and outperform time series foundation models (TSFMs).

📄 Preprint: arxiv.org/abs/2506.08641

💻 Code: github.com/ExplainableM...

July 3, 2025 at 7:59 AM

How can we circumvent data scarcity in the time series domain?

We propose to leverage pretrained ViTs (e.g., CLIP, DINOv2) for time series classification and outperform time series foundation models (TSFMs).

📄 Preprint: arxiv.org/abs/2506.08641

💻 Code: github.com/ExplainableM...

We propose to leverage pretrained ViTs (e.g., CLIP, DINOv2) for time series classification and outperform time series foundation models (TSFMs).

📄 Preprint: arxiv.org/abs/2506.08641

💻 Code: github.com/ExplainableM...