📂 Codebase (part of ESPnet): github.com/espnet/espnet

📖 README & User Guide: github.com/espnet/espne...

🎥 Demo Video: www.youtube.com/watch?v=kI_D...

📂 Codebase (part of ESPnet): github.com/espnet/espnet

📖 README & User Guide: github.com/espnet/espne...

🎥 Demo Video: www.youtube.com/watch?v=kI_D...

@ltiatcmu.bsky.social , Sony Japan and Hugging Face ( @shinjiw.bsky.social @pengyf.bsky.social @jiatongs.bsky.social @wanchichen.bsky.social @shikharb.bsky.social @emonosuke.bsky.social @cromz22.bsky.social @reach-vb.hf.co @wavlab.bsky.social ).

@ltiatcmu.bsky.social , Sony Japan and Hugging Face ( @shinjiw.bsky.social @pengyf.bsky.social @jiatongs.bsky.social @wanchichen.bsky.social @shikharb.bsky.social @emonosuke.bsky.social @cromz22.bsky.social @reach-vb.hf.co @wavlab.bsky.social ).

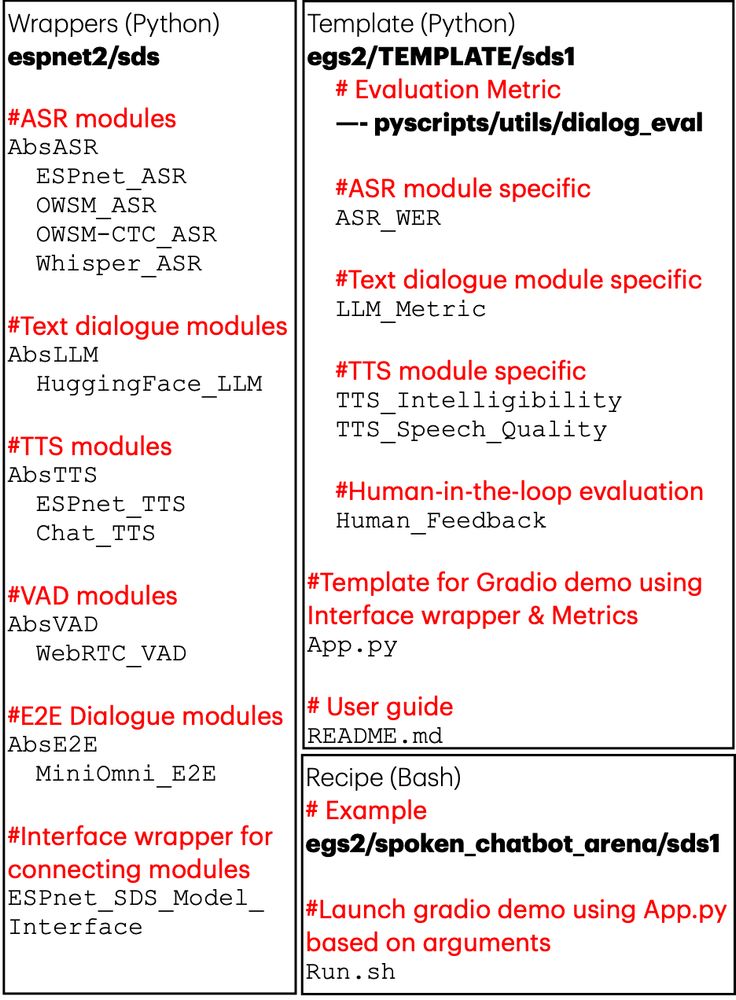

✅ Unified Web UI with support for both cascaded & E2E models

✅ Real-time evaluation of latency, semantic coherence, audio quality & more

✅ Mechanism for collecting user feedback

✅ Open-source with modular code -> could easily incorporate new systems!

✅ Unified Web UI with support for both cascaded & E2E models

✅ Real-time evaluation of latency, semantic coherence, audio quality & more

✅ Mechanism for collecting user feedback

✅ Open-source with modular code -> could easily incorporate new systems!

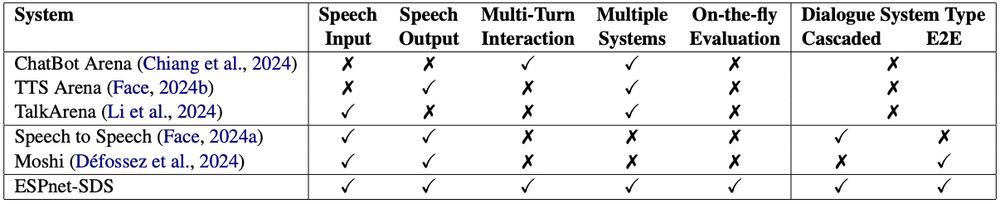

But evaluating and comparing them is challenging:

❌ No standardized interface—different frontends & backends

❌ Complex and inconsistent evaluation metrics

ESPnet-SDS aims to fix this!

But evaluating and comparing them is challenging:

❌ No standardized interface—different frontends & backends

❌ Complex and inconsistent evaluation metrics

ESPnet-SDS aims to fix this!

along with co-authors @ltiatcmu.bsky.social ( @shinjiw.bsky.social @wavlab.bsky.social).

(9/9)

along with co-authors @ltiatcmu.bsky.social ( @shinjiw.bsky.social @wavlab.bsky.social).

(9/9)

We’re open-sourcing our evaluation platform: github.com/espnet/espne...!

(8/9)

We’re open-sourcing our evaluation platform: github.com/espnet/espne...!

(8/9)

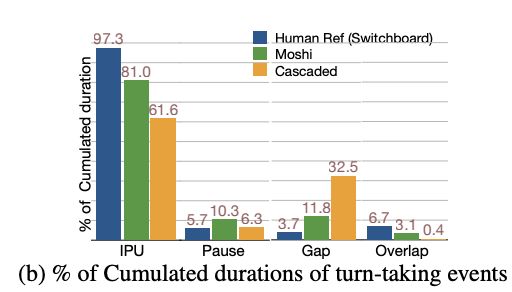

❌ Both systems fails to speak up when they should and do not give user enough cues when they wants to keep conversation floor.

❌ Moshi interrupt too aggressively.

❌ Both systems rarely backchannel.

❌ User interruptions are poorly managed.

(7/9)

❌ Both systems fails to speak up when they should and do not give user enough cues when they wants to keep conversation floor.

❌ Moshi interrupt too aggressively.

❌ Both systems rarely backchannel.

❌ User interruptions are poorly managed.

(7/9)

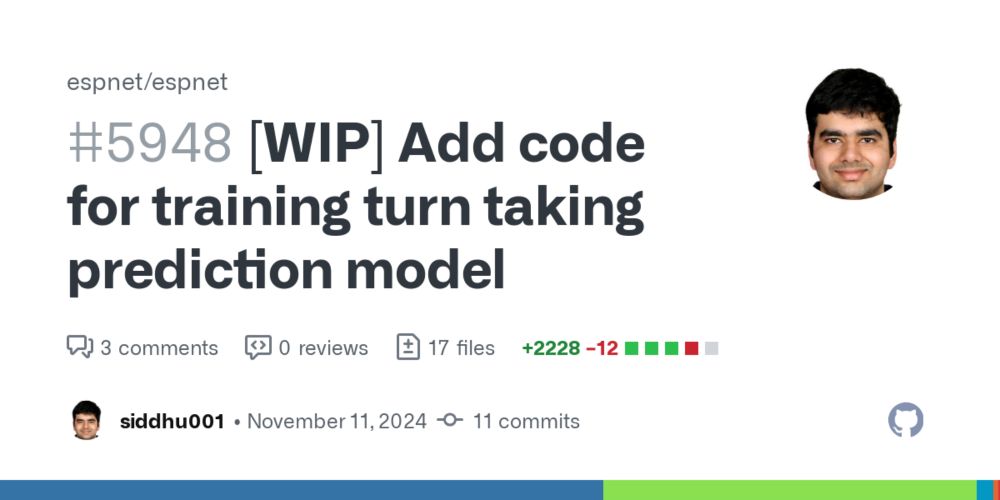

Strong OOD generalization -> a reliable proxy for human judgment!

No need for costly human judgments—our model judges the timing of turn taking events automatically!

(6/9)

Strong OOD generalization -> a reliable proxy for human judgment!

No need for costly human judgments—our model judges the timing of turn taking events automatically!

(6/9)

Moshi generates overlapping speech—but is it helpful or disruptive to the natural flow of the conversation? 🤔

(5/9)

Moshi generates overlapping speech—but is it helpful or disruptive to the natural flow of the conversation? 🤔

(5/9)

Moshi: small gaps, some overlap—but less than natural dialogue

Cascaded: higher latency, minimal overlap.

(4/9)

Moshi: small gaps, some overlap—but less than natural dialogue

Cascaded: higher latency, minimal overlap.

(4/9)

Recent audio FMs claim to have conversational abilities but limited efforts to evaluate these models on their turn taking capabilities.

(3/9)

Recent audio FMs claim to have conversational abilities but limited efforts to evaluate these models on their turn taking capabilities.

(3/9)

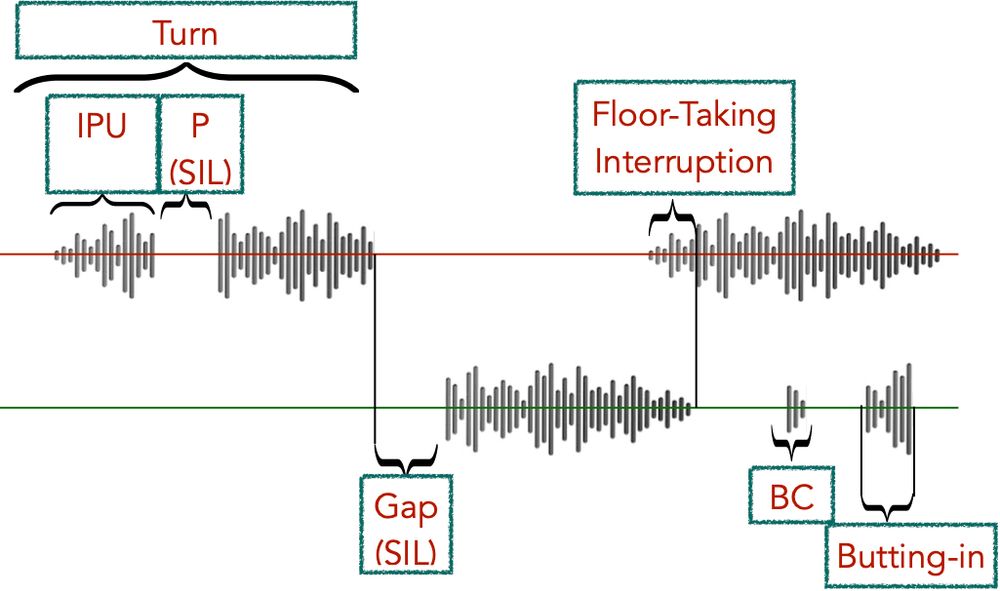

In human dialogue, we listen, speak, and backchannel in real-time.

Similarly the AI should know when to listen, speak, backchannel, interrupt, convey to the user when it wants to keep the conversation floor and address user interruptions

(2/9)

In human dialogue, we listen, speak, and backchannel in real-time.

Similarly the AI should know when to listen, speak, backchannel, interrupt, convey to the user when it wants to keep the conversation floor and address user interruptions

(2/9)